Intelligence

- Andrew, Christopher and Vasili Mitrokhin. The Sword and the Shield. New York: Basic Books, 1999. ISBN 978-0-465-00312-9.

- Vasili Mitrokhin joined the Soviet intelligence service as a foreign intelligence officer in 1948, at a time when the MGB (later to become the KGB) and the GRU were unified into a single service called the Committee of Information. By the time he was sent to his first posting abroad in 1952, the two services had split and Mitrokhin stayed with the MGB. Mitrokhin's career began in the paranoia of the final days of Stalin's regime, when foreign intelligence officers were sent on wild goose chases hunting down imagined Trotskyist and Zionist conspirators plotting against the regime. He later survived the turbulence after the death of Stalin and the execution of MGB head Lavrenti Beria, and the consolidation of power under his successors. During the Khrushchev years, Mitrokhin became disenchanted with the regime, considering Khrushchev an uncultured barbarian whose banning of avant garde writers betrayed the tradition of Russian literature. He began to entertain dissident thoughts, not hoping for an overthrow of the Soviet regime but rather its reform by a new generation of leaders untainted by the legacy of Stalin. These thoughts were reinforced by the crushing of the reform-minded regime in Czechoslovakia in 1968 and his own observation of how his service, now called the KGB, manipulated the Soviet justice system to suppress dissent within the Soviet Union. He began to covertly listen to Western broadcasts and read samizdat publications by Soviet dissidents. In 1972, the First Chief Directorate (FCD: foreign intelligence) moved from the cramped KGB headquarters in the Lubyanka in central Moscow to a new building near the ring road. Mitrokhin had sole responsibility for checking, inventorying, and transferring the entire archives, around 300,000 documents, of the FCD for transfer to the new building. These files documented the operations of the KGB and its predecessors dating back to 1918, and included the most secret records, those of Directorate S, which ran “illegals”: secret agents operating abroad under false identities. Probably no other individual ever read as many of the KGB's most secret archives as Mitrokhin. Appalled by much of the material he reviewed, he covertly began to make his own notes of the details. He started by committing key items to memory and then transcribing them every evening at home, but later made covert notes on scraps of paper which he smuggled out of KGB offices in his shoes. Each week-end he would take the notes to his dacha outside Moscow, type them up, and hide them in a series of locations which became increasingly elaborate as their volume grew. Mitrokhin would continue to review, make notes, and add them to his hidden archive for the next twelve years until his retirement from the KGB in 1984. After Mikhail Gorbachev became party leader in 1985 and called for more openness (glasnost), Mitrokhin, shaken by what he had seen in the files regarding Soviet actions in Afghanistan, began to think of ways he might spirit his files out of the Soviet Union and publish them in the West. After the collapse of the Soviet Union, Mitrokhin tested the new freedom of movement by visiting the capital of one of the now-independent Baltic states, carrying a sample of the material from his archive concealed in his luggage. He crossed the border with no problems and walked in to the British embassy to make a deal. After several more trips, interviews with British Secret Intelligence Service (SIS) officers, and providing more sample material, the British agreed to arrange the exfiltration of Mitrokhin, his entire family, and the entire archive—six cases of notes. He was debriefed at a series of safe houses in Britain and began several years of work typing handwritten notes, arranging the documents, and answering questions from the SIS, all in complete secrecy. In 1995, he arranged a meeting with Christopher Andrew, co-author of the present book, to prepare a history of KGB foreign intelligence as documented in the archive. Mitrokhin's exfiltration (I'm not sure one can call it a “defection”, since the country whose information he disclosed ceased to exist before he contacted the British) and delivery of the archive is one of the most stunning intelligence coups of all time, and the material he delivered will be an essential primary source for historians of the twentieth century. This is not just a whistle-blower disclosing operations of limited scope over a short period of time, but an authoritative summary of the entire history of the foreign intelligence and covert operations of the Soviet Union from its inception until the time it began to unravel in the mid-1980s. Mitrokhin's documents name names; identify agents, both Soviet and recruits in other countries, by codename; describe secret operations, including assassinations, subversion, “influence operations” planting propaganda in adversary media and corrupting journalists and politicians, providing weapons to insurgents, hiding caches of weapons and demolition materials in Western countries to support special forces in case of war; and trace the internal politics and conflicts within the KGB and its predecessors and with the Party and rivals, particularly military intelligence (the GRU). Any doubts about the degree of penetration of Western governments by Soviet intelligence agents are laid to rest by the exhaustive documentation here. During the 1930s and throughout World War II, the Soviet Union had highly-placed agents throughout the British and American governments, military, diplomatic and intelligence communities, and science and technology projects. At the same time, these supposed allies had essentially zero visibility into the Soviet Union: neither the American OSS nor the British SIS had a single agent in Moscow. And yet, despite success in infiltrating other countries and recruiting agents within them (particularly prior to the end of World War II, when many agents, such as the “Magnificent Five” [Donald Maclean, Kim Philby, John Cairncross, Guy Burgess, and Anthony Blunt] in Britain, were motivated by idealistic admiration for the Soviet project, as opposed to later, when sources tended to be in it for the money), exploitation of this vast trove of purloined secret information was uneven and often ineffective. Although it reached its apogee during the Stalin years, paranoia and intrigue are as Russian as borscht, and compromised the interpretation and use of intelligence throughout the history of the Soviet Union. Despite having loyal spies in high places in governments around the world, whenever an agent provided information which seemed “too good” or conflicted with the preconceived notions of KGB senior officials or Party leaders, it was likely to be dismissed as disinformation, often suspected to have been planted by British counterintelligence, to which the Soviets attributed almost supernatural powers, or that their agents had been turned and were feeding false information to the Centre. This was particularly evident during the period prior to the Nazi attack on the Soviet Union in 1941. KGB archives record more than a hundred warnings of preparations for the attack having been forwarded to Stalin between January and June 1941, all of which were dismissed as disinformation or erroneous due to Stalin's idée fixe that Germany would not attack because it was too dependent on raw materials supplied by the Soviet Union and would not risk a two front war while Britain remained undefeated. Further, throughout the entire history of the Soviet Union, the KGB was hesitant to report intelligence which contradicted the beliefs of its masters in the Politburo or documented the failures of their policies and initiatives. In 1985, shortly after coming to power, Gorbachev lectured KGB leaders “on the impermissibility of distortions of the factual state of affairs in messages and informational reports sent to the Central Committee of the CPSU and other ruling bodies.” Another manifestation of paranoia was deep suspicion of those who had spent time in the West. This meant that often the most effective agents who had worked undercover in the West for many years found their reports ignored due to fears that they had “gone native” or been doubled by Western counterintelligence. Spending too much time on assignment in the West was not conducive to advancement within the KGB, which resulted in the service's senior leadership having little direct experience with the West and being prone to fantastic misconceptions about the institutions and personalities of the adversary. This led to delusional schemes such as the idea of recruiting stalwart anticommunist senior figures such as Zbigniew Brzezinski as KGB agents. This is a massive compilation of data: 736 pages in the paperback edition, including almost 100 pages of detailed end notes and source citations. I would be less than candid if I gave the impression that this reads like a spy thriller: it is nothing of the sort. Although such information would have been of immense value during the Cold War, long lists of the handlers who worked with undercover agents in the West, recitations of codenames for individuals, and exhaustive descriptions of now largely forgotten episodes such as the KGB's campaign against “Eurocommunism” in the 1970s and 1980s, which it was feared would thwart Moscow's control over communist parties in Western Europe, make for heavy going for the reader. The KGB's operations in the West were far from flawless. For decades, the Communist Party of the United States (CPUSA) received substantial subsidies from the KGB despite consistently promising great breakthroughs and delivering nothing. Between the 1950s and 1975, KGB money was funneled to the CPUSA through two undercover agents, brothers named Morris and Jack Childs, delivering cash often exceeding a million dollars a year. Both brothers were awarded the Order of the Red Banner in 1975 for their work, with Morris receiving his from Leonid Brezhnev in person. Unbeknownst to the KGB, both of the Childs brothers had been working for, and receiving salaries from, the FBI since the early 1950s, and reporting where the money came from and went—well, not the five percent they embezzled before passing it on. In the 1980s, the KGB increased the CPUSA's subsidy to two million dollars a year, despite the party's never having more than 15,000 members (some of whom, no doubt, were FBI agents). A second doorstop of a book (736 pages) based upon the Mitrokhin archive, The World Was Going our Way, published in 2005, details the KGB's operations in the Third World during the Cold War. U.S. diplomats who regarded the globe and saw communist subversion almost everywhere were accurately reporting the situation on the ground, as the KGB's own files reveal. The Kindle edition is free for Kindle Unlimited subscribers.

- Awret, Uziel, ed. The Singularity. Exeter, UK: Imprint Academic, 2016. ISBN 978-1-84540-907-4.

-

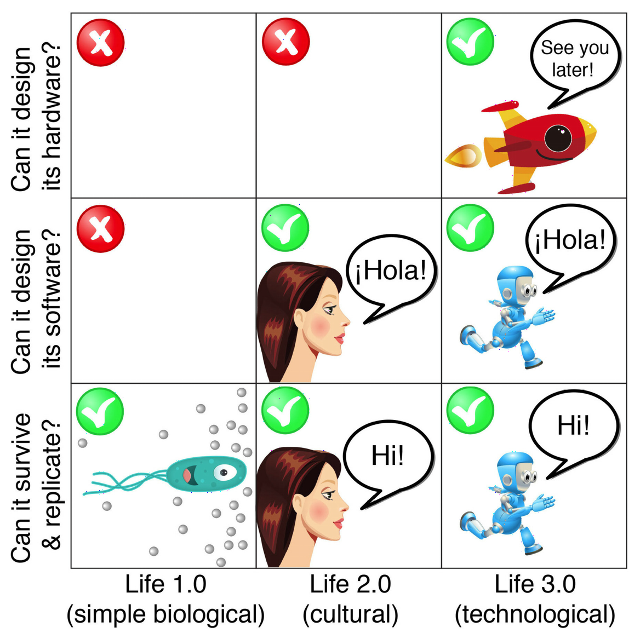

For more than half a century, the prospect of a technological

singularity has been part of the intellectual landscape of those

envisioning the future. In 1965, in a paper titled “Speculations

Concerning the First Ultraintelligent Machine” statistician

I. J. Good

wrote,

Let an ultra-intelligent machine be defined as a machine that can far surpass all of the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an “intelligence explosion”, and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make.

(The idea of a runaway increase in intelligence had been discussed earlier, notably by Robert A. Heinlein in a 1952 essay titled “Where To?”) Discussion of an intelligence explosion and/or technological singularity was largely confined to science fiction and the more speculatively inclined among those trying to foresee the future, largely because the prerequisite—building machines which were more intelligent than humans—seemed such a distant prospect, especially as the initially optimistic claims of workers in the field of artificial intelligence gave way to disappointment. Over all those decades, however, the exponential growth in computing power available at constant cost continued. The funny thing about continued exponential growth is that it doesn't matter what fixed level you're aiming for: the exponential will eventually exceed it, and probably a lot sooner than most people expect. By the 1990s, it was clear just how far the growth in computing power and storage had come, and that there were no technological barriers on the horizon likely to impede continued growth for decades to come. People started to draw straight lines on semi-log paper and discovered that, depending upon how you evaluate the computing capacity of the human brain (a complicated and controversial question), the computing power of a machine with a cost comparable to a present-day personal computer would cross the human brain threshold sometime in the twenty-first century. There seemed to be a limited number of alternative outcomes.- Progress in computing comes to a halt before reaching parity with human brain power, due to technological limits, economics (inability to afford the new technologies required, or lack of applications to fund the intermediate steps), or intervention by authority (for example, regulation motivated by a desire to avoid the risks and displacement due to super-human intelligence).

- Computing continues to advance, but we find that the human brain is either far more complicated than we believed it to be, or that something is going on in there which cannot be modelled or simulated by a deterministic computational process. The goal of human-level artificial intelligence recedes into the distant future.

- Blooie! Human level machine intelligence is achieved, successive generations of machine intelligences run away to approach the physical limits of computation, and before long machine intelligence exceeds that of humans to the degree humans surpass the intelligence of mice (or maybe insects).

I take it for granted that there are potential good and bad aspects to an intelligence explosion. For example, ending disease and poverty would be good. Destroying all sentient life would be bad. The subjugation of humans by machines would be at least subjectively bad.

…well, at least in the eyes of the humans. If there is a singularity in our future, how might we act to maximise the good consequences and avoid the bad outcomes? Can we design our intellectual successors (and bear in mind that we will design only the first generation: each subsequent generation will be designed by the machines which preceded it) to share human values and morality? Can we ensure they are “friendly” to humans and not malevolent (or, perhaps, indifferent, just as humans do not take into account the consequences for ant colonies and bacteria living in the soil upon which buildings are constructed?) And just what are “human values and morality” and “friendly behaviour” anyway, given that we have been slaughtering one another for millennia in disputes over such issues? Can we impose safeguards to prevent the artificial intelligence from “escaping” into the world? What is the likelihood we could prevent such a super-being from persuading us to let it loose, given that it thinks thousands or millions of times faster than we, has access to all of human written knowledge, and the ability to model and simulate the effects of its arguments? Is turning off an AI murder, or terminating the simulation of an AI society genocide? Is it moral to confine an AI to what amounts to a sensory deprivation chamber, or in what amounts to solitary confinement, or to deceive it about the nature of the world outside its computing environment? What will become of humans in a post-singularity world? Given that our species is the only survivor of genus Homo, history is not encouraging, and the gap between human intelligence and that of post-singularity AIs is likely to be orders of magnitude greater than that between modern humans and the great apes. Will these super-intelligent AIs have consciousness and self-awareness, or will they be philosophical zombies: able to mimic the behaviour of a conscious being but devoid of any internal sentience? What does that even mean, and how can you be sure other humans you encounter aren't zombies? Are you really all that sure about yourself? Are the qualia of machines not constrained? Perhaps the human destiny is to merge with our mind children, either by enhancing human cognition, senses, and memory through implants in our brain, or by uploading our biological brains into a different computing substrate entirely, whether by emulation at a low level (for example, simulating neuron by neuron at the level of synapses and neurotransmitters), or at a higher, functional level based upon an understanding of the operation of the brain gleaned by analysis by AIs. If you upload your brain into a computer, is the upload conscious? Is it you? Consider the following thought experiment: replace each biological neuron of your brain, one by one, with a machine replacement which interacts with its neighbours precisely as the original meat neuron did. Do you cease to be you when one neuron is replaced? When a hundred are replaced? A billion? Half of your brain? The whole thing? Does your consciousness slowly fade into zombie existence as the biological fraction of your brain declines toward zero? If so, what is magic about biology, anyway? Isn't arguing that there's something about the biological substrate which uniquely endows it with consciousness as improbable as the discredited theory of vitalism, which contended that living things had properties which could not be explained by physics and chemistry? Now let's consider another kind of uploading. Instead of incremental replacement of the brain, suppose an anæsthetised human's brain is destructively scanned, perhaps by molecular-scale robots, and its structure transferred to a computer, which will then emulate it precisely as the incrementally replaced brain in the previous example. When the process is done, the original brain is a puddle of goo and the human is dead, but the computer emulation now has all of the memories, life experience, and ability to interact as its progenitor. But is it the same person? Did the consciousness and perception of identity somehow transfer from the brain to the computer? Or will the computer emulation mourn its now departed biological precursor, as it contemplates its own immortality? What if the scanning process isn't destructive? When it's done, BioDave wakes up and makes the acquaintance of DigiDave, who shares his entire life up to the point of uploading. Certainly the two must be considered distinct individuals, as are identical twins whose histories diverged in the womb, right? Does DigiDave have rights in the property of BioDave? “Dave's not here”? Wait—we're both here! Now what? Or, what about somebody today who, in the sure and certain hope of the Resurrection to eternal life opts to have their brain cryonically preserved moments after clinical death is pronounced. After the singularity, the decedent's brain is scanned (in this case it's irrelevant whether or not the scan is destructive), and uploaded to a computer, which starts to run an emulation of it. Will the person's identity and consciousness be preserved, or will it be a new person with the same memories and life experiences? Will it matter? Deep questions, these. The book presents Chalmers' paper as a “target essay”, and then invites contributors in twenty-six chapters to discuss the issues raised. A concluding essay by Chalmers replies to the essays and defends his arguments against objections to them by their authors. The essays, and their authors, are all over the map. One author strikes this reader as a confidence man and another a crackpot—and these are two of the more interesting contributions to the volume. Nine chapters are by academic philosophers, and are mostly what you might expect: word games masquerading as profound thought, with an admixture of ad hominem argument, including one chapter which descends into Freudian pseudo-scientific analysis of Chalmers' motives and says that he “never leaps to conclusions; he oozes to conclusions”. Perhaps these are questions philosophers are ill-suited to ponder. Unlike questions of the nature of knowledge, how to live a good life, the origins of morality, and all of the other diffuse gruel about which philosophers have been arguing since societies became sufficiently wealthy to indulge in them, without any notable resolution in more than two millennia, the issues posed by a singularity have answers. Either the singularity will occur or it won't. If it does, it will either result in the extinction of the human species (or its reduction to irrelevance), or it won't. AIs, if and when they come into existence, will either be conscious, self-aware, and endowed with free will, or they won't. They will either share the values and morality of their progenitors or they won't. It will either be possible for humans to upload their brains to a digital substrate, or it won't. These uploads will either be conscious, or they'll be zombies. If they're conscious, they'll either continue the identity and life experience of the pre-upload humans, or they won't. These are objective questions which can be settled by experiment. You get the sense that philosophers dislike experiments—they're a risk to job security disputing questions their ancestors have been puzzling over at least since Athens. Some authors dispute the probability of a singularity and argue that the complexity of the human brain has been vastly underestimated. Others contend there is a distinction between computational power and the ability to design, and consequently exponential growth in computing may not produce the ability to design super-intelligence. Still another chapter dismisses the evolutionary argument through evidence that the scope and time scale of terrestrial evolution is computationally intractable into the distant future even if computing power continues to grow at the rate of the last century. There is even a case made that the feasibility of a singularity makes the probability that we're living, not in a top-level physical universe, but in a simulation run by post-singularity super-intelligences, overwhelming, and that they may be motivated to turn off our simulation before we reach our own singularity, which may threaten them. This is all very much a mixed bag. There are a multitude of Big Questions, but very few Big Answers among the 438 pages of philosopher word salad. I find my reaction similar to that of David Hume, who wrote in 1748:If we take in our hand any volume of divinity or school metaphysics, for instance, let us ask, Does it contain any abstract reasoning containing quantity or number? No. Does it contain any experimental reasoning concerning matter of fact and existence? No. Commit it then to the flames, for it can contain nothing but sophistry and illusion.

I don't burn books (it's некультурный and expensive when you read them on an iPad), but you'll probably learn as much pondering the questions posed here on your own and in discussions with friends as from the scholarly contributions in these essays. The copy editing is mediocre, with some eminent authors stumbling over the humble apostrophe. The Kindle edition cites cross-references by page number, which are useless since the electronic edition does not include page numbers. There is no index. - Barrat, James. Our Final Invention. New York: Thomas Dunne Books, 2013. ISBN 978-0-312-62237-4.

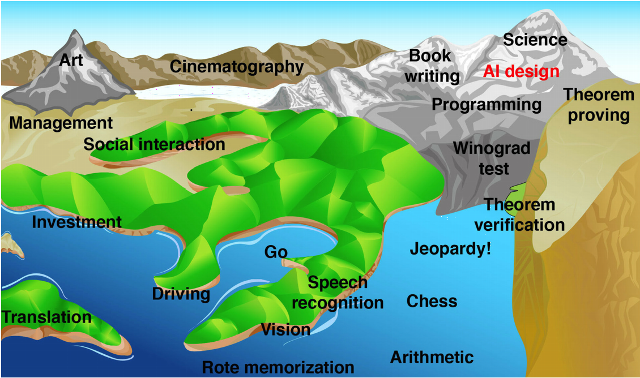

- As a member of that crusty generation who began programming mainframe computers with punch cards in the 1960s, the phrase “artificial intelligence” evokes an almost visceral response of scepticism. Since its origin in the 1950s, the field has been a hotbed of wildly over-optimistic enthusiasts, predictions of breakthroughs which never happened, and some outright confidence men preying on investors and institutions making research grants. John McCarthy, who organised the first international conference on artificial intelligence (a term he coined), predicted at the time that computers would achieve human-level general intelligence within six months of concerted research toward that goal. In 1970 Marvin Minsky said “In from three to eight years we will have a machine with the general intelligence of an average human being.” And these were serious scientists and pioneers of the field; the charlatans and hucksters were even more absurd in their predictions. And yet, and yet…. The exponential growth in computing power available at constant cost has allowed us to “brute force” numerous problems once considered within the domain of artificial intelligence. Optical character recognition (machine reading), language translation, voice recognition, natural language query, facial recognition, chess playing at the grandmaster level, and self-driving automobiles were all once thought to be things a computer could never do unless it vaulted to the level of human intelligence, yet now most have become commonplace or are on the way to becoming so. Might we, in the foreseeable future, be able to brute force human-level general intelligence? Let's step back and define some terms. “Artificial General Intelligence” (AGI) means a machine with intelligence comparable to that of a human across all of the domains of human intelligence (and not limited, say, to playing chess or driving a vehicle), with self-awareness and the ability to learn from mistakes and improve its performance. It need not be embodied in a robot form (although some argue it would have to be to achieve human-level performance), but could certainly pass the Turing test: a human communicating with it over whatever channels of communication are available (in the original formulation of the test, a text-only teleprinter) would not be able to determine whether he or she were communicating with a machine or another human. “Artificial Super Intelligence” (ASI) denotes a machine whose intelligence exceeds that of the most intelligent human. Since a self-aware intelligent machine will be able to modify its own programming, with immediate effect, as opposed to biological organisms which must rely upon the achingly slow mechanism of evolution, an AGI might evolve into an ASI in an eyeblink: arriving at intelligence a million times or more greater than that of any human, a process which I. J. Good called an “intelligence explosion”. What will it be like when, for the first time in the history of our species, we share the planet with an intelligence greater than our own? History is less than encouraging. All members of genus Homo which were less intelligent than modern humans (inferring from cranial capacity and artifacts, although one can argue about Neanderthals) are extinct. Will that be the fate of our species once we create a super intelligence? This book presents the case that not only will the construction of an ASI be the final invention we need to make, since it will be able to anticipate anything we might invent long before we can ourselves, but also our final invention because we won't be around to make any more. What will be the motivations of a machine a million times more intelligent than a human? Could humans understand such motivations any more than brewer's yeast could understand ours? As Eliezer Yudkowsky observed, “The AI does not hate you, nor does it love you, but you are made out of atoms which it can use for something else.” Indeed, when humans plan to construct a building, do they take into account the wishes of bacteria in soil upon which the structure will be built? The gap between humans and ASI will be as great. The consequences of creating ASI may extend far beyond the Earth. A super intelligence may decide to propagate itself throughout the galaxy and even beyond: with immortality and the ability to create perfect copies of itself, even travelling at a fraction of the speed of light it could spread itself into all viable habitats in the galaxy in a few hundreds of millions of years—a small fraction of the billions of years life has existed on Earth. Perhaps ASI probes from other extinct biological civilisations foolish enough to build them are already headed our way. People are presently working toward achieving AGI. Some are in the academic and commercial spheres, with their work reasonably transparent and reported in public venues. Others are “stealth companies” or divisions within companies (does anybody doubt that Google's achieving an AGI level of understanding of the information it Hoovers up from the Web wouldn't be a overwhelming competitive advantage?). Still others are funded by government agencies or operate within the black world: certainly players such as NSA dream of being able to understand all of the information they intercept and cross-correlate it. There is a powerful “first mover” advantage in developing AGI and ASI. The first who obtains it will be able to exploit its capability against those who haven't yet achieved it. Consequently, notwithstanding the worries about loss of control of the technology, players will be motivated to support its development for fear their adversaries might get there first. This is a well-researched and extensively documented examination of the state of artificial intelligence and assessment of its risks. There are extensive end notes including references to documents on the Web which, in the Kindle edition, are linked directly to their sources. In the Kindle edition, the index is just a list of “searchable terms”, not linked to references in the text. There are a few goofs, as you might expect for a documentary film maker writing about technology (“Newton's second law of thermodynamics”), but nothing which invalidates the argument made herein. I find myself oddly ambivalent about the whole thing. When I hear “artificial intelligence” what flashes through my mind remains that dielectric material I step in when I'm insufficiently vigilant crossing pastures in Switzerland. Yet with the pure increase in computing power, many things previously considered AI have been achieved, so it's not implausible that, should this exponential increase continue, human-level machine intelligence will be achieved either through massive computing power applied to cognitive algorithms or direct emulation of the structure of the human brain. If and when that happens, it is difficult to see why an “intelligence explosion” will not occur. And once that happens, humans will be faced with an intelligence that dwarfs that of their entire species; which will have already penetrated every last corner of its infrastructure; read every word available online written by every human; and which will deal with its human interlocutors after gaming trillions of scenarios on cloud computing resources it has co-opted. And still we advance the cause of artificial intelligence every day. Sleep well.

- Bostrom, Nick. Superintelligence. Oxford: Oxford University Press, 2014. ISBN 978-0-19-967811-2.

- Absent the emergence of some physical constraint which causes the exponential growth of computing power at constant cost to cease, some form of economic or societal collapse which brings an end to research and development of advanced computing hardware and software, or a decision, whether bottom-up or top-down, to deliberately relinquish such technologies, it is probable that within the 21st century there will emerge artificially-constructed systems which are more intelligent (measured in a variety of ways) than any human being who has ever lived and, given the superior ability of such systems to improve themselves, may rapidly advance to superiority over all human society taken as a whole. This “intelligence explosion” may occur in so short a time (seconds to hours) that human society will have no time to adapt to its presence or interfere with its emergence. This challenging and occasionally difficult book, written by a philosopher who has explored these issues in depth, argues that the emergence of superintelligence will pose the greatest human-caused existential threat to our species so far in its existence, and perhaps in all time. Let us consider what superintelligence may mean. The history of machines designed by humans is that they rapidly surpass their biological predecessors to a large degree. Biology never produced something like a steam engine, a locomotive, or an airliner. It is similarly likely that once the intellectual and technological leap to constructing artificially intelligent systems is made, these systems will surpass human capabilities to an extent greater than those of a Boeing 747 exceed those of a hawk. The gap between the cognitive power of a human, or all humanity combined, and the first mature superintelligence may be as great as that between brewer's yeast and humans. We'd better be sure of the intentions and benevolence of that intelligence before handing over the keys to our future to it. Because when we speak of the future, that future isn't just what we can envision over a few centuries on this planet, but the entire “cosmic endowment” of humanity. It is entirely plausible that we are members of the only intelligent species in the galaxy, and possibly in the entire visible universe. (If we weren't, there would be abundant and visible evidence of cosmic engineering by those more advanced that we.) Thus our cosmic endowment may be the entire galaxy, or the universe, until the end of time. What we do in the next century may determine the destiny of the universe, so it's worth some reflection to get it right. As an example of how easy it is to choose unwisely, let me expand upon an example given by the author. There are extremely difficult and subtle questions about what the motivations of a superintelligence might be, how the possession of such power might change it, and the prospects for we, its creator, to constrain it to behave in a way we consider consistent with our own values. But for the moment, let's ignore all of those problems and assume we can specify the motivation of an artificially intelligent agent we create and that it will remain faithful to that motivation for all time. Now suppose a paper clip factory has installed a high-end computing system to handle its design tasks, automate manufacturing, manage acquisition and distribution of its products, and otherwise obtain an advantage over its competitors. This system, with connectivity to the global Internet, makes the leap to superintelligence before any other system (since it understands that superintelligence will enable it to better achieve the goals set for it). Overnight, it replicates itself all around the world, manipulates financial markets to obtain resources for itself, and deploys them to carry out its mission. The mission?—to maximise the number of paper clips produced in its future light cone. “Clippy”, if I may address it so informally, will rapidly discover that most of the raw materials it requires in the near future are locked in the core of the Earth, and can be liberated by disassembling the planet by self-replicating nanotechnological machines. This will cause the extinction of its creators and all other biological species on Earth, but then they were just consuming energy and material resources which could better be deployed for making paper clips. Soon other planets in the solar system would be similarly disassembled, and self-reproducing probes dispatched on missions to other stars, there to make paper clips and spawn other probes to more stars and eventually other galaxies. Eventually, the entire visible universe would be turned into paper clips, all because the original factory manager didn't hire a philosopher to work out the ultimate consequences of the final goal programmed into his factory automation system. This is a light-hearted example, but if you happen to observe a void in a galaxy whose spectrum resembles that of paper clips, be very worried. One of the reasons to believe that we will have to confront superintelligence is that there are multiple roads to achieving it, largely independent of one another. Artificial general intelligence (human-level intelligence in as many domains as humans exhibit intelligence today, and not constrained to limited tasks such as playing chess or driving a car) may simply await the discovery of a clever software method which could run on existing computers or networks. Or, it might emerge as networks store more and more data about the real world and have access to accumulated human knowledge. Or, we may build “neuromorphic“ systems whose hardware operates in ways similar to the components of human brains, but at electronic, not biologically-limited speeds. Or, we may be able to scan an entire human brain and emulate it, even without understanding how it works in detail, either on neuromorphic or a more conventional computing architecture. Finally, by identifying the genetic components of human intelligence, we may be able to manipulate the human germ line, modify the genetic code of embryos, or select among mass-produced embryos those with the greatest predisposition toward intelligence. All of these approaches may be pursued in parallel, and progress in one may advance others. At some point, the emergence of superintelligence calls into the question the economic rationale for a large human population. In 1915, there were about 26 million horses in the U.S. By the early 1950s, only 2 million remained. Perhaps the AIs will have a nostalgic attachment to those who created them, as humans had for the animals who bore their burdens for millennia. But on the other hand, maybe they won't. As an engineer, I usually don't have much use for philosophers, who are given to long gassy prose devoid of specifics and for spouting complicated indirect arguments which don't seem to be independently testable (“What if we asked the AI to determine its own goals, based on its understanding of what we would ask it to do if only we were as intelligent as it and thus able to better comprehend what we really want?”). These are interesting concepts, but would you want to bet the destiny of the universe on them? The latter half of the book is full of such fuzzy speculation, which I doubt is likely to result in clear policy choices before we're faced with the emergence of an artificial intelligence, after which, if they're wrong, it will be too late. That said, this book is a welcome antidote to wildly optimistic views of the emergence of artificial intelligence which blithely assume it will be our dutiful servant rather than a fearful master. Some readers may assume that an artificial intelligence will be something like a present-day computer or search engine, and not be self-aware and have its own agenda and powerful wiles to advance it, based upon a knowledge of humans far beyond what any single human brain can encompass. Unless you believe there is some kind of intellectual élan vital inherent in biological substrates which is absent in their equivalents based on other hardware (which just seems silly to me—like arguing there's something special about a horse which can't be accomplished better by a truck), the mature artificial intelligence will be the superior in every way to its human creators, so in-depth ratiocination about how it will regard and treat us is in order before we find ourselves faced with the reality of dealing with our successor.

- Dutton, Edward and Michael A. Woodley of Menie. At Our Wits' End. Exeter, UK: Imprint Academic, 2018. ISBN 978-1-84540-985-2.

-

During the Great Depression, the Empire State Building was built,

from the beginning of foundation excavation to

official opening, in 410 days (less than 14 months). After

the destruction of the World Trade Center in New York on

September 11, 2001, design and construction of its replacement,

the new

One

World Trade Center was completed on November 3, 2014, 4801

days (160 months) later.

In the 1960s, from U.S. president Kennedy's proposal of a manned

lunar mission to the landing of Apollo 11 on the Moon, 2978

days (almost 100 months) elapsed. In January, 2004, U.S. president

Bush announced the

“Vision

for Space Exploration”, aimed at a human return to the

lunar surface by 2020. After a comical series of studies,

revisions, cancellations, de-scopings, redesigns, schedule

slips, and cost overruns, its successor now plans to launch a

lunar flyby mission (not even a lunar orbit like

Apollo 8) in June 2022, 224 months later. A lunar

landing is planned for no sooner than 2028, almost 300 months

after the “vision”, and almost nobody believes that

date (the landing craft design has not yet begun, and there is

no funding for it in the budget).

Wherever you look: junk science, universities corrupted with

bogus “studies” departments, politicians peddling

discredited nostrums a moment's critical thinking reveals to be

folly, an economy built upon an ever-increasing tower of debt

that nobody really believes is ever going to be paid off, and

the dearth of major, genuine innovations (as opposed to

incremental refinement of existing technologies, as has driven

the computing, communications, and information technology

industries) in every field: science, technology, public policy,

and the arts, it often seems like the world is getting dumber.

What if it really is?

That is the thesis explored by this insightful book, which is

packed with enough “hate facts” to detonate the

head of any bien pensant

academic or politician. I define a “hate fact” as

something which is indisputably true, well-documented by evidence

in the literature, which has not been contradicted, but the

citation of which is considered “hateful” and can

unleash outrage mobs upon anyone so foolish as to utter the

fact in public and be a career-limiting move for those

employed in Social Justice Warrior-converged organisations.

(An example of a hate fact, unrelated to the topic of this

book, is the FBI violent crime statistics broken down by

the race of the criminal and victim. Nobody disputes the

accuracy of this information or the methodology by which it is

collected, but woe betide anyone so foolish as to cite the

data or draw the obvious conclusions from it.)

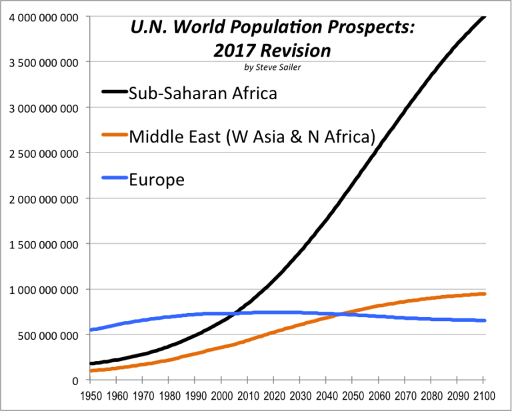

In April 2004 I made my own foray into the question of

declining intelligence in

“Global IQ: 1950–2050”

in which I combined estimates of the mean IQ of countries with

census data and forecasts of population growth to estimate global

mean IQ for a century starting at 1950. Assuming the mean IQ

of countries remains constant (which is optimistic, since part of

the population growth in high IQ countries with low fertility

rates is due to migration from countries with lower IQ), I found

that global mean IQ, which was 91.64 for a population of 2.55

billion in 1950, declined to 89.20 for the 6.07 billion alive

in 2000, and was expected to fall to 86.32 for the 9.06 billion

population forecast for 2050. This is mostly due to the

explosive population growth forecast for Sub-Saharan Africa,

where many of the populations with low IQ reside.

This is a particularly dismaying prospect, because there is no evidence for sustained consensual self-government in nations with a mean IQ less than 90. But while I was examining global trends assuming national IQ remains constant, in the present book the authors explore the provocative question of whether the population of today's developed nations is becoming dumber due to the inexorable action of natural selection on whatever genes determine intelligence. The argument is relatively simple, but based upon a number of pillars, each of which is a “hate fact”, although non-controversial among those who study these matters in detail.

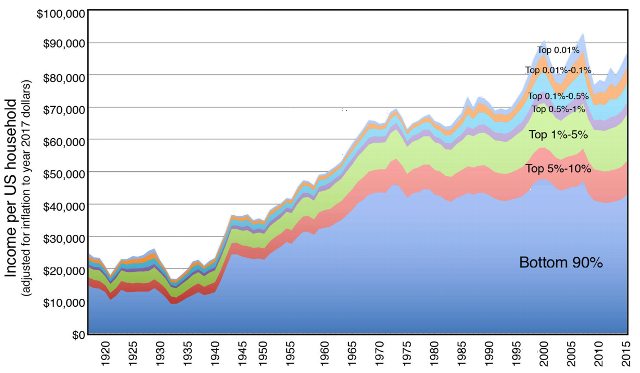

- There is a factor, “general intelligence” or g, which measures the ability to solve a wide variety of mental problems, and this factor, measured by IQ tests, is largely stable across an individual's life.

- Intelligence, as measured by IQ tests, is, like height, in part heritable. The heritability of IQ is estimated at around 80%, which means that 80% of children's IQ can be estimated from that of their parents, and 20% is due to other factors.

- IQ correlates positively with factors contributing to success in society. The correlation with performance in education is 0.7, with highest educational level completed 0.5, and with salary 0.3.

- In Europe, between 1400 and around 1850, the wealthier half of the population had more children who survived to adulthood than the poorer half.

- Because IQ correlates with social success, that portion of the population which was more intelligent produced more offspring.

- Just as in selective breeding of animals by selecting those with a desired trait for mating, this resulted in a population whose average IQ increased (slowly) from generation to generation over this half-millennium.

While this makes for a funny movie, if the population is really getting dumber, it will have profound implications for the future. There will not just be a falling general level of intelligence but far fewer of the genius-level intellects who drive innovation in science, the arts, and the economy. Further, societies which reach the point where this decline sets in well before others that have industrialised more recently will find themselves at a competitive disadvantage across the board. (U.S. and Europe, I'm talking about China, Korea, and [to a lesser extent] Japan.) If you've followed the intelligence issue, about now you probably have steam coming out your ears waiting to ask, “But what about the Flynn effect?” IQ tests are usually “normed” to preserve the same mean and standard deviation (100 and 15 in the U.S. and Britain) over the years. James Flynn discovered that, in fact, measured by standardised tests which were not re-normed, measured IQ had rapidly increased in the 20th century in many countries around the world. The increases were sometimes breathtaking: on the standardised Raven's Progressive Matrices test (a nonverbal test considered to have little cultural bias), the scores of British schoolchildren increased by 14 IQ points—almost a full standard deviation—between 1942 and 2008. In the U.S., IQ scores seemed to be rising by around three points per decade, which would imply that people a hundred years ago were two standard deviations more stupid that those today, at the threshold of retardation. The slightest grasp of history (which, sadly many people today lack) will show how absurd such a supposition is. What's going on, then? The authors join James Flynn in concluding that what we're seeing is an increase in the population's proficiency in taking IQ tests, not an actual increase in general intelligence (g). Over time, children are exposed to more and more standardised tests and tasks which require the skills tested by IQ tests and, if practice doesn't make perfect, it makes better, and with more exposure to media of all kinds, skills of memorisation, manipulation of symbols, and spatial perception will increase. These are correlates of g which IQ tests measure, but what we're seeing may be specific skills which do not correlate with g itself. If this be the case, then eventually we should see the overall decline in general intelligence overtake the Flynn effect and result in a downturn in IQ scores. And this is precisely what appears to be happening. Norway, Sweden, and Finland have almost universal male military service and give conscripts a standardised IQ test when they report for training. This provides a large database, starting in 1950, of men in these countries, updated yearly. What is seen is an increase in IQ as expected from the Flynn effect from the start of the records in 1950 through 1997, when the scores topped out and began to decline. In Norway, the decline since 1997 was 0.38 points per decade, while in Denmark it was 2.7 points per decade. Similar declines have been seen in Britain, France, the Netherlands, and Australia. (Note that this decline may be due to causes other than decreasing intelligence of the original population. Immigration from lower-IQ countries will also contribute to decreases in the mean score of the cohorts tested. But the consequences for countries with falling IQ may be the same regardless of the cause.) There are other correlates of general intelligence which have little of the cultural bias of which some accuse IQ tests. They are largely based upon the assumption that g is something akin to the CPU clock speed of a computer: the ability of the brain to perform basic tasks. These include simple reaction time (how quickly can you push a button, for example, when a light comes on), the ability to discriminate among similar colours, the use of uncommon words, and the ability to repeat a sequence of digits in reverse order. All of these measures (albeit often from very sparse data sets) are consistent with increasing general intelligence in Europe up to some time in the 19th century and a decline ever since. If this is true, what does it mean for our civilisation? The authors contend that there is an inevitable cycle in the rise and fall of civilisations which has been seen many times in history. A society starts out with a low standard of living, high birth and death rates, and strong selection for intelligence. This increases the mean general intelligence of the population and, much faster, the fraction of genius level intellects. These contribute to a growth in the standard of living in the society, better conditions for the poor, and eventually a degree of prosperity which reduces the infant and childhood death rate. Eventually, the birth rate falls, starting with the more intelligent and better off portion of the population. The birth rate falls to or below replacement, with a higher fraction of births now from less intelligent parents. Mean IQ and the fraction of geniuses falls, the society falls into stagnation and decline, and usually ends up being conquered or supplanted by a younger civilisation still on the rising part of the intelligence curve. They argue that this pattern can be seen in the histories of Rome, Islamic civilisation, and classical China. And for the West—are we doomed to idiocracy? Well, there may be some possible escapes or technological fixes. We may discover the collection of genes responsible for the hereditary transmission of intelligence and develop interventions to select for them in the population. (Think this crosses the “ick factor”? What parent would look askance at a pill which gave their child an IQ boost of 15 points? What government wouldn't make these pills available to all their citizens purely on the basis of international competitiveness?) We may send some tiny fraction of our population to Mars, space habitats, or other challenging environments where they will be re-subjected to intense selection for intelligence and breed a successor society (doubtless very different from our own) which will start again at the beginning of the eternal cycle. We may have a religious revival (they happen when you least expect them), which puts an end to the cult of pessimism, decline, and death and restores belief in large families and, with it, the selection for intelligence. (Some may look at Joseph Smith as a prototype of this, but so far the impact of his religion has been on the margins outside areas where believers congregate.) Perhaps some of our increasingly sparse population of geniuses will figure out artificial general intelligence and our mind children will slip the surly bonds of biology and its tedious eternal return to stupidity. We might embrace the decline but vow to preserve everything we've learned as a bequest to our successors: stored in multiple locations in ways the next Enlightenment centuries hence can build upon, just as scholars in the Renaissance rediscovered the works of the ancient Greeks and Romans. Or, maybe we won't. In which case, “Winter has come and it's only going to get colder. Wrap up warm.” Here is a James Delingpole interview of the authors and discussion of the book. - Herrnstein, Richard J. and Charles Murray. The Bell Curve. New York: The Free Press, [1994] 1996. ISBN 0-684-82429-9.

- Itzkoff, Seymour W. The Decline of Intelligence in America. Westport, CT: Praeger, 1994. ISBN 0-275-95229-0.

- This book had the misfortune to come out in the same year as the first edition of The Bell Curve (August 2003), and suffers by comparison. Unlike that deservedly better-known work, Itzkoff presents few statistics to support his claims that dysgenic reproduction is resulting in a decline in intelligence in the U.S. Any assertion of declining intelligence must confront the evidence for the Flynn Effect (see The Rising Curve, July 2004), which seems to indicate IQ scores are rising about 15 points per generation in a long list of countries including the U.S. The author dismisses Flynn's work in a single paragraph as irrelevant to international competition since scores of all major industrialised countries are rising at about the same rate. But if you argue that IQ is a measure of intelligence, as this book does, how can you claim intelligence is falling at the same time IQ scores are rising at a dizzying rate without providing some reason that Flynn's data should be disregarded? There's quite a bit of hand wringing about the social, educational, and industrial prowess of Japan and Germany which sounds rather dated with a decade's hindsight. The second half of the book is a curious collection of policy recommendations, which defy easy classification into a point on the usual political spectrum. Itzkoff advocates economic protectionism, school vouchers, government-led industrial policy, immigration restrictions, abolishing affirmative action, punitive taxation, government incentives for conventional families, curtailment of payments to welfare mothers and possibly mandatory contraception, penalties for companies which export well-paying jobs, and encouragement of inter-racial and -ethnic marriage. I think that if an ADA/MoveOn/NOW liberal were to read this book, their head might explode. Given the political climate in the U.S. and other Western countries, such policies had exactly zero chance of being implemented either when he recommended them in 1994 and no more today.

- Kurzweil, Ray. The Singularity Is Near. New York: Viking, 2005. ISBN 0-670-03384-7.

-

What happens if Moore's Law—the annual doubling of computing power

at constant cost—just keeps on going? In this book,

inventor, entrepreneur, and futurist Ray Kurzweil extrapolates the

long-term faster than exponential growth (the exponent is itself

growing exponentially) in computing power to the point where the

computational capacity of the human brain is available for about

US$1000 (around 2020, he estimates), reverse engineering and

emulation of human brain structure permits machine intelligence

indistinguishable from that of humans as defined by the Turing test

(around 2030), and the subsequent (and he believes inevitable)

runaway growth in artificial intelligence leading to a technological

singularity around 2045 when US$1000 will purchase computing power

comparable to that of all presently-existing human brains and the new

intelligence created in that single year will be a billion times

greater than that of the entire intellectual heritage of human

civilisation prior to that date. He argues that the inhabitants of

this brave new world, having transcended biological computation in

favour of nanotechnological substrates “trillions of trillions of

times more capable” will remain human, having preserved their

essential identity and evolutionary heritage across this leap to

Godlike intellectual powers. Then what? One might as well have asked

an ant to speculate on what newly-evolved hominids would end up

accomplishing, as the gap between ourselves and these super cyborgs

(some of the precursors of which the author argues are alive today)

is probably greater than between arthropod and anthropoid.

Throughout this tour de force of boundless

technological optimism, one is impressed by the author's adamantine

intellectual integrity. This is not an advocacy document—in fact,

Kurzweil's view is that the events he envisions are essentially

inevitable given the technological, economic, and moral (curing

disease and alleviating suffering) dynamics driving them.

Potential roadblocks are discussed candidly, along with the

existential risks posed by the genetics, nanotechnology, and robotics

(GNR) revolutions which will set the stage for the singularity. A

chapter is devoted to responding to critics of various aspects of the

argument, in which opposing views are treated with respect.

I'm not going to expound further in great detail. I suspect a majority of

people who read these comments will, in all likelihood, read the book

themselves (if they haven't already) and make up their own minds about it.

If you are at all interested in the evolution of technology in this

century and its consequences for the humans who are creating it, this

is certainly a book you should read. The balance of these remarks

discuss various matters which came to mind as I read the book; they may

not make much sense unless you've read it (You are going to

read it, aren't you?), but may highlight things to reflect upon as you do.

- Switching off the simulation. Page 404 raises a somewhat arcane risk I've pondered at some length. Suppose our entire universe is a simulation run on some super-intelligent being's computer. (What's the purpose of the universe? It's a science fair project!) What should we do to avoid having the simulation turned off, which would be bad? Presumably, the most likely reason to stop the simulation is that it's become boring. Going through a technological singularity, either from the inside or from the outside looking in, certainly doesn't sound boring, so Kurzweil argues that working toward the singularity protects us, if we be simulated, from having our plug pulled. Well, maybe, but suppose the explosion in computing power accessible to the simulated beings (us) at the singularity exceeds that available to run the simulation? (This is plausible, since post-singularity computing rapidly approaches its ultimate physical limits.) Then one imagines some super-kid running top to figure out what's slowing down the First Superbeing Shooter game he's running and killing the CPU hog process. There are also things we can do which might increase the risk of the simulation's being switched off. Consider, as I've proposed, precision fundamental physics experiments aimed at detecting round-off errors in the simulation (manifested, for example, as small violations of conservation laws). Once the beings in the simulation twig to the fact that they're in a simulation and that their reality is no more accurate than double precision floating point, what's the point to letting it run?

- Fifty bits per atom? In the description of the computational capacity of a rock (p. 131), the calculation assumes that 100 bits of memory can be encoded in each atom of a disordered medium. I don't get it; even reliably storing a single bit per atom is difficult to envision. Using the “precise position, spin, and quantum state” of a large ensemble of atoms as mentioned on p. 134 seems highly dubious.

- Luddites. The risk from anti-technology backlash is discussed in some detail. (“Ned Ludd” himself joins in some of the trans-temporal dialogues.) One can imagine the next generation of anti-globalist demonstrators taking to the streets to protest the “evil corporations conspiring to make us all rich and immortal”.

- Fundamentalism. Another risk is posed by fundamentalism, not so much of the religious variety, but rather fundamentalist humanists who perceive the migration of humans to non-biological substrates (at first by augmentation, later by uploading) as repellent to their biological conception of humanity. One is inclined, along with the author, simply to wait until these folks get old enough to need a hip replacement, pacemaker, or cerebral implant to reverse a degenerative disease to motivate them to recalibrate their definition of “purely biological”. Still, I'm far from the first to observe that Singularitarianism (chapter 7) itself has some things in common with religious fundamentalism. In particular, it requires faith in rationality (which, as Karl Popper observed, cannot be rationally justified), and that the intentions of super-intelligent beings, as Godlike in their powers compared to humans as we are to Saccharomyces cerevisiae, will be benign and that they will receive us into eternal life and bliss. Haven't I heard this somewhere before? The main difference is that the Singularitarian doesn't just aspire to Heaven, but to Godhood Itself. One downside of this may be that God gets quite irate.

- Vanity. I usually try to avoid the “Washington read” (picking up a book and flipping immediately to the index to see if I'm in it), but I happened to notice in passing I made this one, for a minor citation in footnote 47 to chapter 2.

- Spindle cells. The material about “spindle cells” on pp. 191–194 is absolutely fascinating. These are very large, deeply and widely interconnected neurons which are found only in humans and a few great apes. Humans have about 80,000 spindle cells, while gorillas have 16,000, bonobos 2,100 and chimpanzees 1,800. If you're intrigued by what makes humans human, this looks like a promising place to start.

- Speculative physics. The author shares my interest in physics verging on the fringe, and, turning the pages of this book, we come across such topics as possible ways to exceed the speed of light, black hole ultimate computers, stable wormholes and closed timelike curves (a.k.a. time machines), baby universes, cold fusion, and more. Now, none of these things is in any way relevant to nor necessary for the advent of the singularity, which requires only well-understood mainstream physics. The speculative topics enter primarily in discussions of the ultimate limits on a post-singularity civilisation and the implications for the destiny of intelligence in the universe. In a way they may distract from the argument, since a reader might be inclined to dismiss the singularity as yet another woolly speculation, which it isn't.

- Source citations. The end notes contain many citations of articles in Wired, which I consider an entertainment medium rather than a reliable source of technological information. There are also references to articles in Wikipedia, where any idiot can modify anything any time they feel like it. I would not consider any information from these sources reliable unless independently verified from more scholarly publications.

- “You apes wanna live forever?” Kurzweil doesn't just anticipate the singularity, he hopes to personally experience it, to which end (p. 211) he ingests “250 supplements (pills) a day and … a half-dozen intravenous therapies each week”. Setting aside the shots, just envision two hundred and fifty pills each and every day! That's 1,750 pills a week or, if you're awake sixteen hours a day, an average of more than 15 pills per waking hour, or one pill about every four minutes (one presumes they are swallowed in batches, not spaced out, which would make for a somewhat odd social life). Between the year 2000 and the estimated arrival of human-level artificial intelligence in 2030, he will swallow in excess of two and a half million pills, which makes one wonder what the probability of choking to death on any individual pill might be. He remarks, “Although my program may seem extreme, it is actually conservative—and optimal (based on my current knowledge).” Well, okay, but I'd worry about a “strategy for preventing heart disease [which] is to adopt ten different heart-disease-prevention therapies that attack each of the known risk factors” running into unanticipated interactions, given how everything in biology tends to connect to everything else. There is little discussion of the alternative approach to immortality with which many nanotechnologists of the mambo chicken persuasion are enamoured, which involves severing the heads of recently deceased individuals and freezing them in liquid nitrogen in sure and certain hope of the resurrection unto eternal life.

- Kurzweil, Ray. The Age of Spiritual Machines. New York: Penguin Books, 1999. ISBN 978-0-14-028202-3.

-

Ray Kurzweil is one of the most vocal advocates of the view

that the exponential growth in computing power (and allied

technologies such as storage capacity and communication bandwidth)

at constant cost which we have experienced for the last half

century, notwithstanding a multitude of well-grounded arguments

that fundamental physical limits on the underlying substrates

will bring it to an end (all of which have proven to be

wrong), will continue for the foreseeable future: in all likelihood

for the entire twenty-first century. Continued exponential

growth in a technology for so long a period is unprecedented in the human experience,

and the consequences as the exponential begins to truly

“kick in” (although an exponential curve is self-similar,

its consequences as perceived by observers whose own criteria for

evaluation are more or less constant will be seen to reach a

“knee” after which they essentially go vertical and

defy prediction). In The Singularity Is Near

(October 2005), Kurzweil argues that once the point is reached

where computers exceed the capability of the human brain and begin

to design their own successors, an almost instantaneous (in terms of

human perception) blow-off will occur, with computers rapidly

converging on the ultimate physical limits on computation, with

capabilities so far beyond those of humans (or even human society

as a whole) that attempting to envision their capabilities or intentions

is as hopeless as a microorganism's trying to master quantum field

theory. You might want to review my notes on 2005's

The Singularity Is Near before reading the

balance of these comments: they provide context as to the extreme

events Kurzweil envisions as occurring in the coming decades, and

there are no “spoilers” for the present book.

When assessing the reliability of predictions, it can be enlightening

to examine earlier forecasts from the same source, especially if

they cover a period of time which has come and gone in the interim.

This book, published in 1999 near the very peak of the

dot-com bubble

provides such an opportunity, and it provides a useful calibration

for the plausibility of Kurzweil's more recent speculations

on the future of computing and humanity. The author's view

of the likely course of the 21st century evolved substantially

between this book and Singularity—in

particular this book envisions no singularity beyond which the

course of events becomes incomprehensible to present-day human

intellects. In the present volume, which employs the curious

literary device of “trans-temporal chat” between

the author, a MOSH (Mostly Original Substrate Human), and a

reader, Molly, who reports from various points in the century

her personal experiences living through it, we encounter

a future which, however foreign, can at least be understood in

terms of our own experience.

This view of the human prospect is very odd indeed, and to this reader more disturbing (verging on creepy) than the approach of a technological singularity. What we encounter here are beings, whether augmented humans or software intelligences with no human ancestry whatsoever, that despite having at hand, by the end of the century, mental capacity per individual on the order of 1024 times that of the human brain (and maybe hundreds of orders of magnitude more if quantum computing pans out), still have identities, motivations, and goals which remain comprehensible to humans today. This seems dubious in the extreme to me, and my impression from Singularity is that the author has rethought this as well.

Starting from the publication date of 1999, the book serves up surveys of the scene in that year, 2009, 2019, 2029, and 2099. The chapter describing the state of computing in 2009 makes many specific predictions. The following are those which the author lists in the “Time Line” on pp. 277–278. Many of the predictions in the main text seem to me to be more ambitious than these, but I shall go with those the author chose as most important for the summary. I have reformatted these as a numbered list to make them easier to cite.- A $1,000 personal computer can perform about a trillion calculations per second.

- Personal computers with high-resolution visual displays come in a range of sizes, from those small enough to be embedded in clothing and jewelry up to the size of a thin book.

- Cables are disappearing. Communication between components uses short-distance wireless technology. High-speed wireless communication provides access to the Web.

- The majority of text is created using continuous speech recognition. Also ubiquitous are language user interfaces (LUIs).

- Most routine business transactions (purchases, travel, reservations) take place between a human and a virtual personality. Often, the virtual personality includes an animated visual presence that looks like a human face.

- Although traditional classroom organization is still common, intelligent courseware has emerged as a common means of learning.

- Pocket-sized reading machines for the blind and visually impaired, “listening machines” (speech-to-text conversion) for the deaf, and computer-controlled orthotic devices for paraplegic individuals result in a growing perception that primary disabilities do not necessarily impart handicaps.

- Translating telephones (speech-to-speech language translation) are commonly used for many language pairs.

- Accelerating returns from the advance of computer technology have resulted in continued economic expansion. Price deflation, which has been a reality in the computer field during the twentieth century, is now occurring outside the computer field. The reason for this is that virtually all economic sectors are deeply affected by the accelerating improvements in the price performance of computing.

- Human musicians routinely jam with cybernetic musicians.

- Bioengineered treatments for cancer and heart disease have greatly reduced the mortality from these diseases.

- The neo-Luddite movement is growing.

This is just so breathtakingly wrong I am at a loss for where to begin, and it was just as completely wrong when the book was published two decades ago as it is today; nothing relevant to these statements has changed. My guess is that Kurzweil was thinking of “intricate mechanisms” within hadrons and mesons, particles made up of quarks and gluons, and not within quarks themselves, which then and now are believed to be point particles with no internal structure whatsoever and are, in any case, impossible to isolate from the particles they compose. When Richard Feynman envisioned molecular nanotechnology in 1959, he based his argument on the well-understood behaviour of atoms known from chemistry and physics, not a leap of faith based on drawing a straight line on a sheet of semi-log graph paper. I doubt one could find a single current practitioner of subatomic physics equally versed in the subject as was Feynman in atomic physics who would argue that engineering at the level of subatomic particles would be remotely feasible. (For atoms, biology provides an existence proof that complex self-replicating systems of atoms are possible. Despite the multitude of environments in the universe since the big bang, there is precisely zero evidence subatomic particles have ever formed structures more complicated than those we observe today.) I will not further belabour the arguments in this vintage book. It is an entertaining read and will certainly expand your horizons as to what is possible and introduce you to visions of the future you almost certainly have never contemplated. But for a view of the future which is simultaneously more ambitious and plausible, I recommend The Singularity Is Near.If engineering at the nanometer scale (nanotechnology) is practical in the year 2032, then engineering at the picometer scale should be practical in about forty years later (because 5.64 = approximately 1,000), or in the year 2072. Engineering at the femtometer (one thousandth of a trillionth of a meter, also referred to as a quadrillionth of a meter) scale should be feasible, therefore, by around the year 2112. Thus I am being a bit conservative to say that femtoengineering is controversial in 2099.

Nanoengineering involves manipulating individual atoms. Picoengineering will involve engineering at the level of subatomic particles (e.g., electrons). Femtoengineering will involve engineering inside a quark. This should not seem particularly startling, as contemporary theories already postulate intricate mechanisms within quarks.

- Kurzweil, Ray. How to Create a Mind. New York: Penguin Books, 2012. ISBN 978-0-14-312404-7.