|

|

I believe that the perceptions expressed herein point the way to the next generation of hugely successful computer products. The fact that I have been totally unsuccessful in explaining these concepts in numerous forums only reinforces my belief that they will eventually be considered self-evident.

The failure of personal computers to penetrate the office, engineering, and home markets as rapidly as many observers expected is a mystery to many in the industry. This failure is argued to be the result of a dissonance between the evolution of computer software toward placing decisions directly on the user, and the general trend in society toward services, intermediation, and division of labour. Suggestions of types of software products which are in harmony with the overall trends are made.

You will have robot `slaves' by 1965.

— Mechanix Illustrated, cover, 1955

Whatever happened to the computer revolution? If you asked almost anybody in the computer business in the 1960's and early 1970's to project the consequences of the price of computing power falling four orders of magnitude, they would almost universally have seen the introduction of computers into almost every aspect of life. Clearly, every home would have one or more computers, and computers would be used to communicate, write, read, draw, shop, bank, and perform numerous other tasks.

This was the “computer revolution”: sales of computers in the hundreds of millions, with their diffusion throughout society making an impact on the same scale as that of the automobile, television, or the telephone. This forecast was so universally shared that all of the major players in the game bet on it and polished their strategies to end up with a chunk of the market. But in this revolution, something funny happened on the way to the barricades.

After an initial burst, sales of computers into the home market have slowed dramatically, to the extent that the companies that concentrate on home computers and software are considered pariahs. Penetration of computers into the office has been much slower than many projections, and the promises of networks and the “universal workstation” remain largely unfulfilled. The current dismal climate in the semiconductor and computer industries is a consequence of this. This despite the fact that the computer revolution has technically succeeded; now for a price affordable by virtually every family in the country, and by every business for every employee, one can buy a computer that can be used for communication, reading, writing, drawing, shopping, banking, and more. But they aren't selling.

“What You See Is What You Get” (abbreviated WYSIWYG, pronounced “wizzy-wig”) has become the metaphor for most computer interaction today. It first became popular with screen-oriented text editing programs, became the accepted standard in word processing, and has been extended to graphics as exemplified by paint programs such as MacPaint and CAD programs such as AutoCAD. Today, this interface is being applied to integrating publication-quality text and graphics in products such as Interleaf and PageMaker, and is the universal approach to desktop publishing. Attempts to extend the WYSIWYG concept to encompass the user's entire interaction with a computer date from the Smalltalk system, and today are exemplified by icon based interfaces such as the Macintosh operating system and GEM. The generalisation of WYSIWYG to more abstract applications is sometimes referred to as a “Direct Manipulation Interface” (DMI).

The evolution of an economy from one dominated by agriculture and extractive industries, through industrialisation, to one dominated by service industries is one of the most remarked upon events of our age. Our economy is moving from one that prospers by doing things to stuff to one in which we do stuff to each other. Not only does everybody believe this is happening, it really is happening. For example, walk through any major city in the US and observe that there are four banks on every street corner. If this were not a service economy, there would be, say, four machine shops.

As numerous pundits have pointed out, this evolution is natural and is not a cause for concern. We will all become so rich selling innovative financial services, fast food, and overnight delivery services to each other that we will be able to buy all the computers from Japan, televisions from Korea, and steel from Bulgaria that we need. That makes sense, right?

Let's look back into the distant past and see how peoples' concepts of how computers would be used have changed. Originally (I'm talking 1950's) it was assumed that a systems analyst would specify how the computer would be applied to a problem. This specification would be given to a programmer, who would lay out the control and data flow to implement the system. A coder would then translate the flowchart into computer code and debug the system, calling on the programmer and analyst as required. The coder's program, written on paper, would be encoded for the machine by a keypunch operator. After the program was placed into operation, it would be run by the computer operator, with data prepared by an operations department.

Well before 1960, the development of assemblers and compilers (and the realisation that the division was a bad idea in the first place), collapsed the programming and coding jobs into one. The distinction between programmer and systems analyst had become mostly one of job title, pay, and prestige rather than substance in many organisations by 1970. The adoption of timesharing in the 1960's accelerated the combination of many of the remaining jobs. Now, as any computer user could type on his own keyboard, the rationale for data entry departments began to disappear (while concurrently, development of optical character recognition and bar code equipment reduced the need for manual entry of bulk data). In the 1970's, one person frequently designed a program, typed it in, debugged it, ran it, entered data into it, and used the results.

The concurrent fall in the price of computer hardware contributed to this trend. The division of labour which developed when computers were used in large organisations was impossible and silly when a computer was purchased by a 5 man engineering department. This led to the “every man a computer user, every man a programmer” concept that lies at the root of the “computer literacy” movement.

The skills required to use a computer were still very high, and the areas in which computers were applied were very specialised and generally concentrated in technical areas where users were willing to master new skills to improve their productivity.

Word processing (and later the spreadsheet) changed all that. With the widespread adoption of the CRT, it was possible to build a user interface that immediately reflected the user's interaction with the computer. With this interface it became possible to build programs that could be mastered in far less time by users with no direct computer-related skills. The personal computer accelerated this process. Now a financial analyst could directly build and operate a spreadsheet model, ask “what if” questions, and obtain rapid responses. Now an individual could enter, correct, and format documents which would be printed with quality equal to that of the best office typewriters.

The success of these two applications, which have accounted for the purchase of a large percentage of the desktop computers sold to date, led to attempts to make other applications as transparent to the user and thus as accessible to mass markets. Applications which paralleled word processing (such as CAD or raster-based drawing programs) were relatively easy to build and have enjoyed some success. Lotus attempted in 1-2-3 and Symphony to embed other functions within the metaphor of a spreadsheet, but one might read the market as saying that 1-2-3 was about as far as the sheet would spread.

The Macintosh user interface represents the most significant effort to date to create a set of diverse applications that share a common set of operating conventions. Certainly the effort has gone much farther toward that goal than any which preceded it, but the limited success of the product in the marketplace may indicate that either the common user interface does not extend deep enough into the products to be of real benefit to the user, or that the user interface is still too complex. (On the other hand, it may indicate that people don't want a 23 cm screen, or that Apple Computer should be named “IBM”).

Today, desktop publishing systems are placing far more control and power in the user's hands than before. Now the user can enter text, then control its typography, layout, insertion of illustrations, and every other parameter controlling the final output. We see document filing and retrieval systems which have interfaces which emulate a physical library. Engineering programs are being built which graphically simulate physical systems and allow dynamic interaction with computer-simulated objects. We begin to hear the phrase, “this job is 5% solving the problem and 95% user interface”.

It is currently almost an axiom of the industry that “people won't buy computers in large quantities until we make them easier to use”. This is often used to justify the conclusion, “we must invest far more work in user interfaces, and make our programs more interactive and responsive to the user”. I would like to explore whether this really follows.

I would like to suggest that the present state of computer user interfaces is the outgrowth of the postwar “do it yourself” culture, which peaked around 1960, and is not consonant with the service economy which began to expand rapidly around that date.

Consider how a business letter used to be written. The author would scribble some notes on a piece of paper, or blither something into a dictating machine. A secretary would translate the intent into English and type a draft of it. The author would then review it, mark up desired changes (which might be as general as “can you soften up the second paragraph so it doesn't seem so much like an ultimatum?”), and receive a final draft, which was signed and returned to be mailed. No wonder, as IBM pointed out in the early 60's, such a letter cost about $4 to prepare.

Now let's peek into the “office of the future”. The executive's 50cm screen is festooned with icons. Picking the one with the quill pen opens up a word processor. The document is entered, pulling down menus to select appropriate fonts for the heading, address, and body. After the text is entered, a few more menu picks right justify the text. Dragging an icon of a schoolmarm into the window performs spelling checking and correction, allowing the user to confirm unusual words. The user then drags a rubber stamp icon into the window and places a digitised signature at the bottom of the letter (remember, you saw it here first). Finally, popping up the “send” menu allows the executive to instruct the system to send the letter to the address in the heading (electronically if possible, otherwise by printing a copy on the laser printer in the mailroom, which will stuff it into a window envelope and mail it), and to a file a copy under a subtopic specified by entering it in a dialogue box.

As we astute observers of the computer scene know, this is just around the corner and “can be implemented today on existing systems as soon as the users are ready to buy it”. And what an astounding breakthrough it will be. An executive, who previously only needed to know about how to run a multi-billion dollar multinational corporation, analyse market trends, develop financial strategies, thread around governmental constraints, etc., now gets to be a typist, proofreader, typographer, mail clerk, and filing clerk. That makes sense, right?

Those involved in computing have discovered that doing it themselves is a far more productive way of getting things done than telling others to do it. They then generalise this to all people and all tasks. This may be a major error.

As we have developed user interfaces, we have placed the user in far more direct control of the details of his job than before. As this has happened, we have devolved upon the user all of the detailed tasks formerly done by others, but have provided few tools to automate these tasks. In the 1960's, engineering curricula largely dropped mechanical drafting requirements in favour of “graphical communication” courses. The rationale for this was that engineers did not make final drawings on the board, but rather simply needed to be able to read drawings and communicate a design to a drafter who would actually make the working drawings.

Now we're in the position of telling people, “you don't need all of those drafters. With CAD, your engineers can make perfectly accurate final drawings as they design”. Yeah, but what do they know about drafting? And what help does the computer give them? The STRETCH command?

I have always taken personal pride in the statement “I don't ask anybody else to do my shit work for me”. I am proud that I do my own typing, formatting, printing, and mailing. And as I have often remarked, I consider that I am better at doing these things than many of those paid full time to do them. I think that this is an outgrowth of the general “do it yourself” philosophy which goes all the way back to “self reliance”. Although I often make the argument based on efficiency (I can do it faster by myself and get it right the first time, than I can tell somebody how to do it, redo it, re-redo it, and so on), the feeling goes much deeper than mere efficiency, and I think it is shared by many other computer types. I think that this is because those in the computer business are largely drawn from the do it yourself tinkerer culture. I do not have statistics, but I'll bet that the incidence of home workshops is many times greater among computer people than the general populace. Computers have, I think, largely absorbed the attentions of the do it yourself culture. Notice that the decline of the home-tinkering magazines (Mechanix Illustrated, Popular Science, Popular Mechanics) which had been fairly stable in content since the 1930s occurred coincident with the personal computer explosion and the appearance of the dozens of PC-related magazines.

As a result, we build tools which place more and more direct control in the hands of the user, and allow him to exert more and more power over the things he does. We feel that telling somebody else to do something for us is somehow wrong.

This shared set of assumptions is carried over into the way we design our computer-based tools. We build power tools, not robot carpenters. We feel it is better to instruct the computer in the minutiae of the task we're doing than build a tool that will go off with some level of autonomy and get a job done. So our belief that people should not be subservient to our desires may lead us to build software that isn't subservient. But that's what computers are for!

I'd ask you to consider: in a society where virtually nobody fixes their own television sets any more, where the percentage of those repairing their own cars is plummeting, where people go out and pay for pre-popped popcorn, and where the value added by services accounts for a larger and larger percentage of the economy each year, shouldn't computers be servants who are told “go do that” rather than tools we must master in order to do ourselves what we previously asked others to do for us? Recalling a word many have forgotten, aren't we really in the automation industry, not the tool trade?

Let's consider a very different kind of user interface. I will take the business letter as an example. Suppose you get a letter from an irate customer who didn't get his product on time. All you can do is apologise and explain how you'll try to do better in the future. You punch “answer that”, and the letter goes away. The system reads it, selects a reply, and maybe asks you a couple of questions (queuing them, not interrupting your current task), and eventually puts a draft reply in your in basket. You mark it up, making some marginal notes, and maybe inserting a personalised paragraph in the middle and send it away again. It comes back, with your spelling and grammar corrected, beautifully formatted in conformance with the corporate style sheet. You say “send it”, and it's all taken care of in accordance with the standard procedures.

Or, today, you pound out a 20 page paper with hand written tables referenced by the text and mail it to IEEE Transactions on Computers. Your typescript may be full of erasures and arrows moving words around. A few months later you get back the galleys, perfectly formatted to fit in the journal, with all of the tables set up and placed in the proper locations in the text.

Before you say, “that's very nice, but we don't know how to do that”, pause to consider: Isn't this what people really want? If you had a system that did this, that could go off and do the shitwork, wouldn't you prefer it to all the menus and WYSIWYG gewgaws in the world? Also, remember that the computer would not be perfect at its job. Neither are people: that's why they send you the galleys. But isn't it better to mark up the galleys than to have to typeset the whole works yourself?

Building systems that can go off and do useful things for people is going to be very, very difficult. But so is designing the highly interactive user interfaces we're all working on. Both dwarf the effort involved in writing an old-style program where you just solved the problem and left the user to fend for himself. If you're going to succeed, you not only have to solve the problem well, you have to solve the right problem. Maybe improving direct user interaction is solving the wrong problem.

Can we build systems that do what people want done, rather than do it yourself tools?

There are some indications we're getting there. Autodesk has a product in its stable which may be the prototype of the 1990's application. I'm talking about CAD/camera. You take a picture and say “go make that into a CAD database”. It goes and gives it its best shot, and you get to look at the result and change what you don't like about it, and teach it how to do its job better. Notice that of all of our products, this is the one people take least seriously because nobody is really confident that a computer can do what it sets out to do. Yet of all our products, it is the one most assured of success if it actually does a good enough job. This dichotomy characterises subservient products…look for it.

There is a database system on the market called Q&A from Symantec which lets you enter queries such as:

How many people in sales make more than $50000 in salary and commissions?

then:

Which of them live in Massachusetts?

This really works. I have a demo copy. Try it.

I am not sure that anybody will ever actually build an “expert system”, but much of the work being done in rule-based systems is directly applicable to building the types of products I'm talking about here.

Although I have only recently pulled all of these threads together,

I've been flogging products like the ones I'm pushing now for a long

time (so I have a bias in their direction). NDOC is the second

word processor I've built which attempts to do reasonable things with

very little user direction. The first one obliterated its

competition, even though it provided the user much less direct control

over the result. In the world at large, the battle between

![]() and SCRIBE is being fought on the same ground.

and SCRIBE is being fought on the same ground.

When you encounter a subservient system, it tends to feel somewhat different from normal computer interaction. I can't exactly describe it, but try the “Clean up” operation on the Macintosh or the FORMAT command in Kern's editor and see if you don't understand what I'm saying.

The “computer revolution” has slowed unexpectedly. This is due to lack of user acceptance of the current products, not due to hardware factors or cost.

A large part of the current computer culture evolved from the do it yourself movement. This led to the development of computer products which require direct control and skill to operate. The computer industry is no longer involved in “automation”.

The society as a whole has moved away from the self-reliant, do-it-yourself, ethic toward a service economy.

Until products which can perform useful tasks without constant user control are produced, the market for computers will be limited to the do it yourself sector: comparable to the market for circular saws.

Building such products is very difficult and one cannot be assured of success when undertaking such a project. However, it may be no more difficult to create such products than to define successful dynamic user interfaces.

Customers want to buy “robot slaves”, not power tools.

The market for such products is immense.

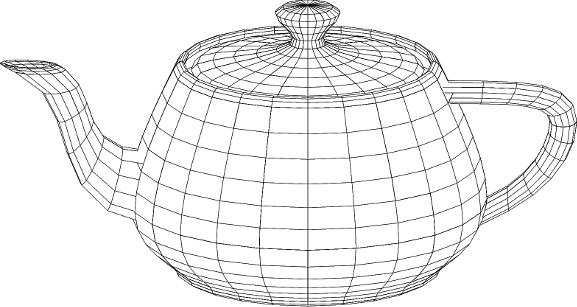

The Teapot is one of the classics of computer graphics. The teapot was originally hand-digitised as Bézier curves by Martin Newell, then a Ph.D. candidate at the University of Utah, in 1975. The control points for the Bézier surface patches were based on a sketch of an actual teapot on his desk. The original teapot now resides in the Computer Museum in Boston.

Newell's teapot became famous through his work and that of Jim Blinn. The teapot has become one of the standard test cases for any rendering algorithm. It includes compound curves, both positively and negatively curved surfaces, and intersections, so it traps many common programming errors.

For the full history of the Teapot, please refer to IEEE Computer Graphics and Applications, Volume 7, Number 1, January 1987, Page 8, for Frank Crow's delightful article chronicling its history.

I typed in the control point data from that article and wrote a C program to generate an AutoCAD script that draws the teapot. The teapot was one of the first realistic objects modeled with the three dimensional polygons introduced in AutoCAD 2.6, and has served as an AutoCAD and AutoShade test case ever since.

|

|