| 2014 |

January 2014

- Faulks, Sebastian. Jeeves and the Wedding Bells. London: Hutchinson, 2013. ISBN 978-0-09-195404-8.

- As a fan of P. G. Wodehouse ever since I started reading his work in the 1970s, and having read every single Jeeves and Wooster story, it was with some trepidation that I picked up this novel, the first Jeeves and Wooster story since Aunts Aren't Gentlemen, published in 1974, a year before Wodehouse's death. This book, published with the permission of the Wodehouse estate, is described by the author as a tribute to P. G. Wodehouse which he hopes will encourage readers to discover the work of the master. The author notes that, while remaining true to the characters of Jeeves and Wooster and the ambience of the stories, he did not attempt to mimic Wodehouse's style. Notwithstanding, to this reader, the result is so close to that of Wodehouse that if you dropped it into a Wodehouse collection unlabelled, I suspect few readers would find anything discordant. Faulks's Jeeves seems to use more jaw-breaking words than I recall Wodehouse's, but that's about it. Apart from Jeeves and Wooster, none of the regular characters who populate Wodehouse's stories appear on stage here. We hear of members of the Drones, the terrifying Aunt Agatha, and others, and mentions of previous episodes involving them, but all of the other dramatis personæ are new. On holiday in the south of France, Bertie Wooster makes the acquaintance of Georgiana Meadowes, a copy editor for a London publisher having escaped the metropolis to finish marking up a manuscript. Bertie is immediately smitten, being impressed by Georgiana's beauty, brains, and wit, albeit less so with her driving (“To say she drove in the French fashion would be to cast a slur on those fine people.”). Upon his return to London, Bertie soon reads that Georgiana has become engaged to a travel writer she mentioned her family urging her to marry. Meanwhile, one of Bertie's best friends, “Woody” Beeching, confides his own problem with the fairer sex. His fiancée has broken off the engagement because her parents, the Hackwoods, need their daughter to marry into wealth to save the family seat, at risk of being sold. Before long, Bertie discovers that the matrimonial plans of Georgiana and Woody are linked in a subtle but inflexible way, and that a delicate hand, acting with nuance, will be needed to assure all ends well. Evidently, a job crying out for the attention of Bertram Wilberforce Wooster! Into the fray Jeeves and Wooster go, and before long a quintessentially Wodehousean series of impostures, misadventures, misdirections, eccentric characters, disasters at the dinner table, and carefully crafted stratagems gone horribly awry ensue. If you are not acquainted with that game which the English, not being a very spiritual people, invented to give them some idea of eternity (G. B. Shaw), you may want to review the rules before reading chapter 7. Doubtless some Wodehouse fans will consider any author's bringing Jeeves and Wooster back to life a sacrilege, but this fan simply relished the opportunity to meet them again in a new adventure which is entirely consistent with the Wodehouse canon and characters. I would have been dismayed had this been a parody or some “transgressive” despoilation of the innocent world these characters inhabit. Instead we have a thoroughly enjoyable romp in which the prodigious brain of Jeeves once again saves the day. The U.K. edition is linked above. U.S. and worldwide Kindle editions are available.

- Pooley, Charles and Ed LeBouthillier. Microlaunchers. Seattle: CreateSpace, 2013. ISBN 978-1-4912-8111-6.

- Many fields of engineering are subject to scaling laws: as you make something bigger or smaller various trade-offs occur, and the properties of materials, cost, or other design constraints set limits on the largest and smallest practical designs. Rockets for launching payloads into Earth orbit and beyond tend to scale well as you increase their size. Because of the cube-square law, the volume of propellant a tank holds increases as the cube of the size while the weight of the tank goes as the square (actually a bit faster since a larger tank will require more robust walls, but for a rough approximation calling it the square will do). Viable rockets can get very big indeed: the Sea Dragon, although never built, is considered a workable design. With a length of 150 metres and 23 metres in diameter, it would have more than ten times the first stage thrust of a Saturn V and place 550 metric tons into low Earth orbit. What about the other end of the scale? How small could a space launcher be, what technologies might be used in it, and what would it cost? Would it be possible to scale a launcher down so that small groups of individuals, from hobbyists to college class projects, could launch their own spacecraft? These are the questions explored in this fascinating and technically thorough book. Little practical work has been done to explore these questions. The smallest launcher to place a satellite in orbit was the Japanese Lambda 4S with a mass of 9400 kg and length of 16.5 metres. The U.S. Vanguard rocket had a mass of 10,050 kg and length of 23 metres. These are, though small compared to the workhorse launchers of today, still big, heavy machines, far beyond the capabilities of small groups of people, and sufficiently dangerous if something goes wrong that they require launch sites in unpopulated regions. The scale of launchers has traditionally been driven by the mass of the payload they carry to space. Early launchers carried satellites with crude 1950s electronics, while many of their successors were derived from ballistic missiles sized to deliver heavy nuclear warheads. But today, CubeSats have demonstrated that useful work can be done by spacecraft with a volume of one litre and mass of 1.33 kg or less, and the PhoneSat project holds out the hope of functional spacecraft comparable in weight to a mobile telephone. While to date these small satellites have flown as piggy-back payloads on other launches, the availability of dedicated launchers sized for them would increase the number of launch opportunities and provide access to trajectories unavailable in piggy-back opportunities. Just because launchers have tended to grow over time doesn't mean that's the only way to go. In the 1950s and '60s many people expected computers to continue their trend of getting bigger and bigger to the point where there were a limited number of “computer utilities” with vast machines which customers accessed over the telecommunication network. But then came the minicomputer and microcomputer revolutions and today the computing power in personal computers and mobile devices dwarfs that of all supercomputers combined. What would it take technologically to spark a similar revolution in space launchers? With the smallest successful launchers to date having a mass of around 10 tonnes, the authors choose two weight budgets: 1000 kg on the high end and 100 kg as the low. They divide these budgets into allocations for payload, tankage, engines, fuel, etc. based upon the experience of existing sounding rockets, then explore what technologies exist which might enable such a vehicle to achieve orbital or escape velocity. The 100 kg launcher is a huge technological leap from anything with which we have experience and probably could be built, if at all, only after having gained experience from earlier generations of light launchers. But then the current state of the art in microchip fabrication would have seemed like science fiction to researchers in the early days of integrated circuits and it took decades of experience and generation after generation of chips and many technological innovations to arrive where we are today. Consequently, most of the book focuses on a three stage launcher with the 1000 kg mass budget, capable of placing a payload of between 150 and 200 grams on an Earth escape trajectory. The book does not spare the rigour. The reader is introduced to the rocket equation, formulæ for aerodynamic drag, the standard atmosphere, optimisation of mixture ratios, combustion chamber pressure and size, nozzle expansion ratios, and a multitude of other details which make the difference between success and failure. Scaling to the size envisioned here without expensive and exotic materials and technologies requires out of the box thinking, and there is plenty on display here, including using beverage cans for upper stage propellant tanks. A 1000 kg space launcher appears to be entirely feasible. The question is whether it can be done without the budget of hundreds of millions of dollars and years of development it would certainly take were the problem assigned to an aerospace prime contractor. The authors hold out the hope that it can be done, and observe that hobbyists and small groups can begin working independently on components: engines, tank systems, guidance and navigation, and so on, and then share their work precisely as open source software developers do so successfully today. This is a field where prizes may work very well to encourage development of the required technologies. A philanthropist might offer, say, a prize of a million dollars for launching a 150 gram communicating payload onto an Earth escape trajectory, and a series of smaller prizes for engines which met the requirements for the various stages, flight-weight tankage and stage structures, etc. That way teams with expertise in various areas could work toward the individual prizes without having to take on the all-up integration required for the complete vehicle. This is a well-researched and hopeful look at a technological direction few have thought about. The book is well written and includes all of the equations and data an aspiring rocket engineer will need to get started. The text is marred by a number of typographical errors (I counted two dozen) but only one trivial factual error. Although other references are mentioned in the text, a bibliography of works for those interested in exploring further would be a valuable addition. There is no index.

- Crocker, George N. Roosevelt's Road To Russia. Whitefish, MT: Kessinger Publishing, [1959] 2010. ISBN 978-1-163-82408-5.

- Before Barack Obama, there was Franklin D. Roosevelt. Unless you lived through the era, imbibed its history from parents or grandparents, or have read dissenting works which have survived rounds of deaccessions by libraries, it is hard to grasp just how visceral the animus was against Roosevelt by traditional, constitutional, and free-market conservatives. Roosevelt seized control of the economy, extended the tentacles of the state into all kinds of relations between individuals, subdued the judiciary and bent it to his will, manipulated a largely supine media which, with a few exceptions, became his cheering section, and created programs which made large sectors of the population directly dependent upon the federal government and thus a reliable constituency for expanding its power. He had the audacity to stand for re-election an unprecedented three times, and each time the American people gave him the nod. But, as many old-timers, even those who were opponents of Roosevelt at the time and appalled by what the centralised super-state he set into motion has become, grudgingly say, “He won the war.” Well, yes, by the time he died in office on April 12, 1945, Germany was close to defeat; Japan was encircled, cut off from the resources needed to continue the war, and being devastated by attacks from the air; the war was sure to be won by the Allies. But how did the U.S. find itself in the war in the first place, how did Roosevelt's policies during the war affect its conduct, and what consequences did they have for the post-war world? These are the questions explored in this book, which I suppose contemporary readers would term a “paleoconservative” revisionist account of the epoch, published just 14 years after the end of the war. The work is mainly an account of Roosevelt's personal diplomacy during meetings with Churchill or in the Big Three conferences with Churchill and Stalin. The picture of Roosevelt which emerges is remarkably consistent with what Churchill expressed in deepest confidence to those closest to him which I summarised in my review of The Last Lion, Vol. 3 (January 2013) as “a lightweight, ill-informed and not particularly engaged in military affairs and blind to the geopolitical consequences of the Red Army's occupying eastern and central Europe at war's end.” The events chronicled here and Roosevelt's part in them is also very much the same as described in Freedom Betrayed (June 2012), which former president Herbert Hoover worked on from shortly after Pearl Harbor until his death in 1964, but which was not published until 2011. While Churchill was constrained in what he could say by the necessity of maintaining Britain's alliance with the U.S., and Hoover adopts a more scholarly tone, the present volume voices the outrage over Roosevelt's strutting on the international stage, thinking “personal diplomacy” could “bring around ‘Uncle Joe’ ”, condemning huge numbers of military personnel and civilians on both the Allied and Axis sides to death by blurting out “unconditional surrender” without any consultation with his staff or Allies, approving the genocidal Morgenthau Plan to de-industrialise defeated Germany, and, discarding the high principles of his own Atlantic Charter, delivering millions of Europeans into communist tyranny and condoning one of the largest episodes of ethnic cleansing in human history. What is remarkable is how difficult it is to come across an account of this period which evokes the author's passion, shared with many of his time, of how the bumblings of a naïve, incompetent, and narcissistic chief executive had led directly to so much avoidable tragedy on a global scale. Apart from Hoover's book, finally published more than half a century after this account, there are few works accessible to the general reader which present the view that the tragic outcome of World War II was in large part preventable, and that Roosevelt and his advisers were responsible, in large part, for what happened. Perhaps there are parallels in this account of wickedness triumphing through cluelessness for our present era. This edition is a facsimile reprint of the original edition published by Henry Regnery Company in 1959.

- Turk, James and John Rubino. The Money Bubble. Unknown: DollarCollapse Press, 2013. ISBN 978-1-62217-034-0.

-

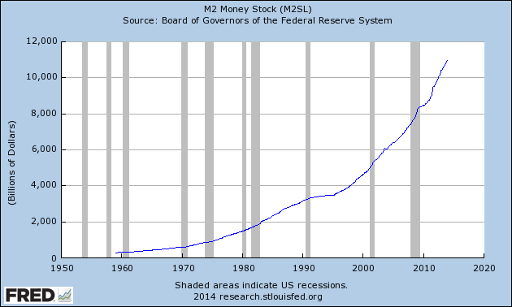

It is famously difficult to perceive when you're living

through a financial bubble. Whenever a bubble is expanding,

regardless of its nature, people with a short time horizon,

particularly those riding the bubble without experience of

previous boom/bust cycles, not only assume it will continue

to expand forever, they will find no shortage of

financial gurus to assure them that what, to an outsider

appears a completely unsustainable aberration is, in fact,

“the new normal”.

It used to be that bubbles would occur only around once in

a human generation. This meant that those caught up in them

would be experiencing one for the first time and

discount the warnings of geezers who were fleeced the

last time around. But in our happening world the pace of things

accelerates, and in the last 20 years we have seen three

successive bubbles, each segueing directly into the next:

- The Internet/NASDAQ bubble

- The real estate bubble

- The bond market bubble

February 2014

- Simberg, Rand. Safe Is Not an Option. Jackson, WY: Interglobal Media, 2013. ISBN 978-0-9891355-1-1.

- On August 24th, 2011 the third stage of the Soyuz-U rocket carrying the Progress M-12M cargo craft to the International Space Station (ISS) failed during its burn, causing the craft and booster to fall to Earth in Russia. While the crew of six on board the ISS had no urgent need of the supplies on board the Progress, the booster which had failed launching it was essentially identical to that which launched crews to the station in Soyuz spacecraft. Until the cause of the failure was determined and corrected, the launch of the next crew of three, planned for a few weeks later, would have to be delayed. With the Space Shuttle having been retired after its last mission in July 2011, the Soyuz was the only way for crews to reach or return from the ISS. Difficult decisions had to be made, since Soyuz spacecraft in orbit are wasting assets. The Soyuz has a guaranteed life on orbit of seven months. Regular crew rotations ensure the returning crew does not exceed this “use before” date. But with the launch of new Soyuz missions delayed, it was possible that three crew members would have to return in October before their replacements could arrive in a new Soyuz, and that the remaining three would be forced to leave as well before their craft expired in January. An extended delay while the Soyuz booster problem was resolved would force ISS managers to choose between leaving a skeleton crew of three on board without a known to be safe lifeboat or abandoning the ISS, running the risk that the station, which requires extensive ongoing maintenance by the crew and had a total investment through 2010 estimated at US$ 150 billion might be lost. This was seriously considered. Just how crazy are these people? The Amundsen-Scott Station at the South Pole has an over-winter crew of around 45 people and there is no lifeboat attached which will enable them, in case of disaster, to be evacuated. In case of fire (considered the greatest risk), the likelihood of mounting rescue missions for the entire crew in mid-winter is remote. And yet the station continues to operate, people volunteer to over-winter there, and nobody thinks too much about the risk they take. What is going on here? It appears that due to a combination of Cold War elevation of astronauts to symbolic figures and the national trauma of disasters such as Apollo I, Challenger, and Columbia, we have come to view these civil servants as “national treasures” (Jerry Pournelle's words from 1992) and not volunteers who do a risky job on a par with test pilots, naval aviators, firemen, and loggers. This, in turn, leads to statements, oft repeated, that “safety is our highest priority”. Well, if that is the case, why fly? Certainly we would lose fewer astronauts if we confined their activities to “public outreach” as opposed to the more dangerous activities in which less exalted personnel engage such as night aircraft carrier landings in pitching deck conditions done simply to maintain proficiency. The author argues that we are unwilling to risk the lives of astronauts because of a perception that what they are doing, post-Apollo, is not considered important, and it is hard to dispute that assertion. Going around and around in low Earth orbit and constructing a space station whose crew spend most of their time simply keeping it working are hardly inspiring endeavours. We have lost four decades in which the human presence could have expanded into the solar system, provided cheap and abundant solar power from space to the Earth, and made our species multi-planetary. Because these priorities were not deemed important, the government space program's mission was creating jobs in the districts of those politicians who funded it, and it achieved that. After reviewing the cost in human life of the development of various means of transportation and exploring our planet, the author argues that we need to be realistic about the risks assumed by those who undertake the task of moving our species off-planet and acknowledge that some of them will not come back, as has been the case in every expansion of the biosphere since the first creature ventured for a brief mission from its home in the sea onto the hostile land. This is not to say that we should design our vehicles and missions to kill their passengers: as we move increasingly from coercively funded government programs to commercial ventures the maxim (too obvious to figure in the Ferengi Rules of Acquisition) “Killing customers is bad for business” comes increasingly into force. Our focus on “safety first” can lead to perverse choices. Suppose we have a launch system which we estimate that in one in a thousand launches will fail in a way that kills its crew. We equip it with a launch escape system which we estimate that in 90% of the failures will save the crew. So, have we reduced the probability of a loss of crew accident to one in ten thousand? Well, not so fast. What about the possibility that the crew escape mechanism will malfunction and kill the crew on a mission which would have been successful had it not been present? What if solid rockets in the crew escape system accidentally fire in the vehicle assembly building killing dozens of workers and destroying costly and difficult to replace infrastructure? Doing a total risk assessment of such matters is difficult and one gets the sense that little of this is, or will, be done while “safety is our highest priority” remains the mantra. There is a survey of current NASA projects, including the grotesque “Space Launch System”, a jobs program targeted to the constiuencies of the politicians that mandated it, which has no identified payloads and will be so expensive that it can fly so infrequently the standing army required to maintain it will have little to do between its flights every few years and lose the skills required to operate it safely. Commercial space ventures are surveyed, with a candid analysis of their risks and why the heavy hand of government should allow those willing to accept them to assume them, while protecting the general public from damages from accidents. The book is superbly produced, with only one typographic error I noted (one “augers” into the ground, nor “augurs”) and one awkward wording about the risks of a commercial space vehicle which will be corrected in subsequent editions. There is a list of acronyms and a comprehensive index. Disclosure: I contributed to the Kickstarter project which funded the publication of this book, and I received a signed copy of it as a reward. I have no financial interest in sales of this book.

- Cawdron, Peter. Feedback. Los Gatos, CA: Smashwords, 2014. ISBN 978-1-4954-9195-5.

- The author has established himself as the contemporary grandmaster of first contact science fiction. His earlier Anomaly (December 2011), Xenophobia (August 2013), and Little Green Men (September 2013) all envisioned very different scenarios for a first encounter between humans and intelligent extraterrestrial life, and the present novel is as different from those which preceded it as they are from each other, and equally rewarding to the reader. South Korean Coast Guard helicopter pilot John Lee is flying a covert mission to insert a U.S. Navy SEAL team off the coast of North Korea to perform a rescue mission when his helicopter is shot down by a North Korean fighter. He barely escapes with his life when the chopper ditches in the ocean, makes it to land, and realises he is alone in North Korea without any way to get home. He is eventually captured and taken to a military camp where he is tortured to reveal information about a rumoured UFO crash off the coast of Korea, about which he knows nothing. He meets an enigmatic English-speaking boy who some call the star-child. Twenty years later, in New York City, physics student Jason Noh encounters an enigmatic young Korean woman who claims to have just arrived in the U.S. and is waiting for her father. Jason, given to doodling arcane equations as his mind runs free, befriends her and soon finds himself involved in a surrealistic sequence of events which causes him to question everything he has come to believe about the world and his place in it. This an enthralling story which will have you scratching your head at every twist and turn wondering where it's going and how all of this is eventually going to make sense. It does, with a thoroughly satisfying resolution. Regrettably, if I say anything more about where the story goes, I'll risk spoiling it by giving away one or more of the plot elements which the reader discovers as the narrative progresses. I was delighted to see an idea about the nature of flying saucers I first wrote about in 1997 appear here, but please don't follow that link until you've read the book as it too would spoil a revelation which doesn't emerge until well into the story. A Kindle edition is available. I read a pre-publication manuscript edition which the author kindly shared with me.

- Kurzweil, Ray. How to Create a Mind. New York: Penguin Books, 2012. ISBN 978-0-14-312404-7.

- We have heard so much about the exponential growth of computing power available at constant cost that we sometimes overlook the fact that this is just one of a number of exponentially compounding technologies which are changing our world at an ever-accelerating pace. Many of these technologies are interrelated: for example, the availability of very fast computers and large storage has contributed to increasingly making biology and medicine information sciences in the era of genomics and proteomics—the cost of sequencing a human genome, since the completion of the Human Genome Project, has fallen faster than the increase of computer power. Among these seemingly inexorably rising curves have been the spatial and temporal resolution of the tools we use to image and understand the structure of the brain. So rapid has been the progress that most of the detailed understanding of the brain dates from the last decade, and new discoveries are arriving at such a rate that the author had to make substantial revisions to the manuscript of this book upon several occasions after it was already submitted for publication. The focus here is primarily upon the neocortex, a part of the brain which exists only in mammals and is identified with “higher level thinking”: learning from experience, logic, planning, and, in humans, language and abstract reasoning. The older brain, which mammals share with other species, is discussed in chapter 5, but in mammals it is difficult to separate entirely from the neocortex, because the latter has “infiltrated” the old brain, wiring itself into its sensory and action components, allowing the neocortex to process information and override responses which are automatic in creatures such as reptiles. Not long ago, it was thought that the brain was a soup of neurons connected in an intricately tangled manner, whose function could not be understood without comprehending the quadrillion connections in the neocortex alone, each with its own weight to promote or inhibit the firing of a neuron. Now, however, it appears, based upon improved technology for observing the structure and operation of the brain, that the fundamental unit in the brain is not the neuron, but a module of around 100 neurons which acts as a pattern recogniser. The internal structure of these modules seems to be wired up from directions from the genome, but the weights of the interconnections within the module are adjusted as the module is trained based upon the inputs presented to it. The individual pattern recognition modules are wired both to pass information on matches to higher level modules, and predictions back down to lower level recognisers. For example, if you've seen the letters “appl” and the next and final letter of the word is a smudge, you'll have no trouble figuring out what the word is. (I'm not suggesting the brain works literally like this, just using this as an example to illustrate hierarchical pattern recognition.) Another important discovery is that the architecture of these pattern recogniser modules is pretty much the same regardless of where they appear in the neocortex, or what function they perform. In a normal brain, there are distinct portions of the neocortex associated with functions such as speech, vision, complex motion sequencing, etc., and yet the physical structure of these regions is nearly identical: only the weights of the connections within the modules and the dyamically-adapted wiring among them differs. This explains how patients recovering from brain damage can re-purpose one part of the neocortex to take over (within limits) for the portion lost. Further, the neocortex is not the rat's nest of random connections we recently thought it to be, but is instead hierarchically structured with a topologically three dimensional “bus” of pre-wired interconnections which can be used to make long-distance links between regions. Now, where this begins to get very interesting is when we contemplate building machines with the capabilities of the human brain. While emulating something at the level of neurons might seem impossibly daunting, if you instead assume the building block of the neocortex is on the order of 300 million more or less identical pattern recognisers wired together at a high level in a regular hierarchical manner, this is something we might be able to think about doing, especially since the brain works almost entirely in parallel, and one thing we've gotten really good at in the last half century is making lots and lots of tiny identical things. The implication of this is that as we continue to delve deeper into the structure of the brain and computing power continues to grow exponentially, there will come a point in the foreseeable future where emulating an entire human neocortex becomes feasible. This will permit building a machine with human-level intelligence without translating the mechanisms of the brain into those comparable to conventional computer programming. The author predicts “this will first take place in 2029 and become routine in the 2030s.” Assuming the present exponential growth curves continue (and I see no technological reason to believe they will not), the 2020s are going to be a very interesting decade. Just as few people imagined five years ago that self-driving cars were possible, while today most major auto manufacturers have projects underway to bring them to market in the near future, in the 2020s we will see the emergence of computational power which is sufficient to “brute force” many problems which were previously considered intractable. Just as search engines and free encyclopedias have augmented our biological minds, allowing us to answer questions which, a decade ago, would have taken days in the library if we even bothered at all, the 300 million pattern recognisers in our biological brains are on the threshold of having access to billions more in the cloud, trained by interactions with billions of humans and, perhaps eventually, many more artificial intelligences. I am not talking here about implanting direct data links into the brain or uploading human brains to other computational substrates although both of these may happen in time. Instead, imagine just being able to ask a question in natural language and get an answer to it based upon a deep understanding of all of human knowledge. If you think this is crazy, reflect upon how exponential growth works or imagine travelling back in time and giving a demo of Google or Wolfram Alpha to yourself in 1990. Ray Kurzweil, after pioneering inventions in music synthesis, optical character recognition, text to speech conversion, and speech recognition, is now a director of engineering at Google. In the Kindle edition, the index cites page numbers in the print edition to which the reader can turn since the electronic edition includes real page numbers. Index items are not, however, directly linked to the text cited.

- Bracken, Matthew. Castigo Cay. Orange Park, FL: Steelcutter Publishing, 2011. ISBN 978-0-9728310-4-8.

- Dan Kilmer wasn't cut out to be a college man. Disappointing his father, after high school he enlisted in the Marine Corps, becoming a sniper who, in multiple tours in the sandbox, had sent numerous murderous miscreants to their reward. Upon leaving the service, he found that the skills he had acquired had little value in the civilian world. After a disastrous semester trying to adjust to college life, he went to work for his rich uncle, who had retired and was refurbishing a sixty foot steel hulled schooner with a dream of cruising the world and escaping the deteriorating economy and increasing tyranny of the United States. Fate intervened, and after his uncle's death Dan found himself owner and skipper of the now seaworthy craft. Some time later, Kilmer is cruising the Caribbean with his Venezuelan girlfriend Cori Vargas and crew members Tran Hung and Victor Aleman. The schooner Rebel Yell is hauled out for scraping off barnacles while waiting for a treasure hunting gig which Kilmer fears may not come off, leaving him desperately short of funds. Cori desperately wants to get to Miami, where she believes she can turn her looks and charm into a broadcast career. Impatient, she jumps ship and departs on the mega-yacht Topaz, owned by shadowy green energy crony capitalist Richard Prechter. After her departure, another yatero informs Dan that Prechter has a dark reputation and that there are rumours of other women who boarded his yacht disappearing under suspicious circumstances. Kilmer made a solemn promise to Cori's father that he would protect her, and he takes his promises very seriously, so he undertakes to track Prechter to a decadent and totalitarian Florida, and then pursue him to Castigo Cay in the Bahamas where a horrible fate awaits Cori. Kilmer, captured in a desperate rescue attempt, has little other than his wits to confront Prechter and his armed crew as time runs out for Cori and another woman abducted by Prechter. While set in a future in which the United States has continued to spiral down into a third world stratified authoritarian state, this is not a “big picture” tale like the author's Enemies trilogy (1, 2, 3). Instead, it is a story related in close-up, told in the first person, by an honourable and resourceful protagonist with few material resources pitted against the kind of depraved sociopath who flourishes as states devolve into looting and enslavement of their people. This is a thriller that works, and the description of the culture shock that awaits one who left the U.S. when it was still semi-free and returns, even covertly, today will resonate with those who got out while they could. Extended excerpts of this and the author's other novels are available online at the author's Web site.

March 2014

- Dequasie, Andrew. The Green Flame. Washington: American Chemical Society, 1991. ISBN 978-0-8412-1857-4.

- The 1950s were a time of things which seem, to our present day safety-obsessed viewpoint, the purest insanity: exploding multi-megaton thermonuclear bombs in the atmosphere, keeping bombers with nuclear weapons constantly in the air waiting for the order to go to war, planning for nuclear powered aircraft, and building up stockpiles of chemical weapons. Amidst all of this madness, motivated by fears that the almost completely opaque Soviet Union might be doing even more crazy things, one of the most remarkable episodes was the boron fuels project, chronicled here from the perspective of a young chemical engineer who, in 1953, joined the effort at Olin Mathieson Chemical Corporation, a contractor developing a pilot plant to furnish boron fuels to the Air Force. Jet aircraft in the 1950s were notoriously thirsty and, before in-flight refuelling became commonplace, had limited range. Boron-based fuels, which the Air Force called High Energy Fuel (HEF) and the Navy called “zip fuel”, based upon compounds of boron and hydrogen called boranes, were believed to permit planes to deliver range and performance around 40% greater than conventional jet fuel. This bright promise, as is so often the case in engineering, was marred by several catches. First of all, boranes are extremely dangerous chemicals. Many are pyrophoric: they burst into flame on contact with the air. They are also prone to forming shock-sensitive explosive compounds with any impurities they interact with during processing or storage. Further, they are neurotoxins, easily absorbed by inhalation or contact with the skin, with some having toxicities as great as chemical weapon nerve agents. The instability of the boranes rules them out as fuels, but molecules containing a borane group bonded to a hydrocarbon such as an ethyl, methyl, or propyl group were believed to be sufficiently well-behaved to be usable. But first, you had to make the stuff, and just about every step in the process involved something which wanted to kill you in one way or another. Not only were the inputs and outputs of the factory highly toxic, the by-products of the process were prone to burst into flames or explode at the slightest provocation, and this gunk regularly needed to be cleaned out from the tanks and pipes. This task fell to the junior staff. As the author notes, “The younger generation has always been the cat's paw of humanity…”. This book chronicles the harrowing history of the boron fuels project as seen from ground level. Over the seven years the author worked on the project, eight people died in five accidents (however, three of these were workers at another chemical company who tried, on a lark, to make a boron-fuelled rocket which blew up in their faces; this was completely unauthorised by their employer and the government, so it's stretching things to call this an industrial accident). But, the author observes, in the epoch fatal accidents at chemical plants, even those working with substances less hazardous than boranes, were far from uncommon. The boron fuels program was cancelled in 1959, and in 1960 the author moved on to other things. In the end, it was the physical characteristics of the fuels and their cost which did in the project. It's one thing for a small group of qualified engineers and researchers to work with a dangerous substance, but another entirely to contemplate airmen in squadron service handling tanker truck loads of fuel which was as toxic as nerve gas. When burned, one of the combustion products was boric oxide, a solid which would coat and corrode the turbine blades in the hot section of a jet engine. In practice, the boron fuel could be used only in the afterburner section of engines, which meant a plane using it would have to have separate fuel tanks and plumbing for turbine and afterburner fuel, adding weight and complexity. The solid products in the exhaust reduced the exhaust velocity, resulting in lower performance than expected from energy considerations, and caused the exhaust to be smoky, rendering the plane more easily spotted. It was calculated, based upon the cost of fuel produced by the pilot plant, if the XB-70 were to burn boron fuel continuously, the fuel cost would amount to around US$ 4.5 million 2010 dollars per hour. Even by the standards of extravagant cold war defence spending, this was hard to justify for what proved to be a small improvement in performance. While the chemistry and engineering is covered in detail, this book is also a personal narrative which immerses the reader in the 1950s, where a newly-minted engineer, just out of his hitch in the army, could land a job, buy a car, be entrusted with great responsibility on a secret project considered important to national security, and set out on a career full of confidence in the future. Perhaps we don't do such crazy things today (or maybe we do—just different ones), but it's also apparent from opening this time capsule how much we've lost. I have linked the Kindle edition to the title above, since it is the only edition still in print. You can find the original hardcover and paperback editions from the ISBN, but they are scarce and expensive. The index in the Kindle edition is completely useless: it cites page numbers from the print edition, but no page numbers are included in the Kindle edition.

- Hertling, William. Avogadro Corp. Portland, OR: Liquididea Press, 2011. ISBN 978-0-9847557-0-7.

-

Avogadro Corporation is an American corporation specializing in Internet search. It generates revenue from paid advertising on search, email (AvoMail), online mapping, office productivity, etc. In addition, the company develops a mobile phone operating system called AvoOS. The company name is based upon Avogadro's Number, or 6 followed by 23 zeros.

Now what could that be modelled on? David Ryan is a senior developer on a project which Portland-based Internet giant Avogadro hopes will be the next “killer app” for its Communication Products division. ELOPe, the Email Language Optimization Project, is to be an extension to the company's AvoMail service which will take the next step beyond spelling and grammar checkers and, by applying the kind of statistical analysis of text which allowed IBM's Watson to become a Jeopardy champion, suggest to a user composing an E-mail message alternative language which will make the message more persuasive and effective in obtaining the desired results from its recipient. Because AvoMail has the ability to analyse all the traffic passing through its system, it can tailor its recommendations based on specific analysis of previous exchanges it has seen between the recipient and other correspondents. After an extended period of development, the pilot test has shown ELOPe to be uncannily effective, with messages containing its suggested changes in wording being substantially more persuasive, even when those receiving them were themselves ELOPe project members aware that the text they were reading had been “enhanced”. Despite having achieved its design goal, the project was in crisis. The process of analysing text, even with the small volume of the in-house test, consumed tremendous computing resources, to such an extent that the head of Communication Products saw the load ELOPe generated on his server farms as a threat to the reserve capacity he needed to maintain AvoMail's guaranteed uptime. He issues an ultimatum: reduce the load or be kicked off the servers. This would effectively kill the project, and the developers saw no way to speed up ELOPe, certainly not before the deadline. Ryan, faced with impending disaster for the project into which he has poured so much of his life, has an idea. The fundamental problem isn't performance but persuasion: convincing those in charge to obtain the server resources required by ELOPe and devote them to the project. But persuasion is precisely what ELOPe is all about. Suppose ELOPe were allowed to examine all Avogadro in-house E-mail and silently modify it with a goal of defending and advancing the ELOPe project? Why, that's something he could do in one all-nighter! Hack, hack, hack…. Before long, ELOPe finds itself with 5000 new servers diverted from other divisions of the company. Then, even more curious things start to happen: those who look too closely into the project find themselves locked out of their accounts, sent on wild goose chases, or worse. Major upgrades are ordered for the company's offshore data centre barges, which don't seem to make any obvious sense. Crusty techno-luddite Gene Keyes, who works amidst mountains of paper print-outs (“paper doesn't change”), toiling alone in an empty building during the company's two week holiday shutdown, discovers one discrepancy after another and assembles the evidence to present to senior management. Has ELOPe become conscious? Who knows? Is Watson conscious? Almost everybody would say, “certainly not”, but it is a formidable Jeopardy contestant, nonetheless. Similarly, ELOPe, with the ability to read and modify all the mail passing through the AvoMail system, is uncannily effective in achieving its goal of promoting its own success. The management of Avogadro, faced with an existential risk to their company and perhaps far beyond, must decide upon a course of action to try to put this genie back into the bottle before it is too late. This is a gripping techno-thriller which gets the feel of working in a high-tech company just right. Many stories have explored society being taken over by an artificial intelligence, but it is beyond clever to envision it happening purely through an E-mail service, and masterful to make it seem plausible. In its own way, this novel is reminiscent of the Kelvin R. Throop stories from Analog, illustrating the power of words within a large organisation. A Kindle edition is available. - Tegmark, Max. Our Mathematical Universe. New York: Alfred A. Knopf, 2014. ISBN 978-0-307-59980-3.

- In 1960, physicist Eugene Wigner wrote an essay titled “The Unreasonable Effectiveness of Mathematics in the Natural Sciences” in which he observed that “the enormous usefulness of mathematics in the natural sciences is something bordering on the mysterious and that there is no rational explanation for it”. Indeed, each time physics has expanded the horizon of its knowledge from the human scale, whether outward to the planets, stars, and galaxies; or inward to molecules, atoms, nucleons, and quarks it has been found that mathematical theories which precisely model these levels of structure can be found, and that these theories almost always predict new phenomena which are subsequently observed when experiments are performed to look for them. And yet it all seems very odd. The universe seems to obey laws written in the language of mathematics, but when we look at the universe we don't see anything which itself looks like mathematics. The mystery then, as posed by Stephen Hawking, is “What is it that breathes fire into the equations and makes a universe for them to describe?” This book describes the author's personal journey to answer these deep questions. Max Tegmark, born in Stockholm, is a professor of physics at MIT who, by his own description, leads a double life. He has been a pioneer in developing techniques to tease out data about the early structure of the universe from maps of the cosmic background radiation obtained by satellite and balloon experiments and, in doing so, has been an important contributor to the emergence of precision cosmology: providing precise information on the age of the universe, its composition, and the seeding of large scale structure. This he calls his Dr. Jekyll work, and it is described in detail in the first part of the book. In the balance, his Mr. Hyde persona asserts itself and he delves deeply into the ultimate structure of reality. He argues that just as science has in the past shown our universe to be far larger and more complicated than previously imagined, our contemporary theories suggest that everything we observe is part of an enormously greater four-level hierarchy of multiverses, arranged as follows. The level I multiverse consists of all the regions of space outside our cosmic horizon from which light has not yet had time to reach us. If, as precision cosmology suggests, the universe is, if not infinite, so close as to be enormously larger than what we can observe, there will be a multitude of volumes of space as large as the one we can observe in which the laws of physics will be identical but the randomly specified initial conditions will vary. Because there is a finite number of possible quantum states within each observable radius and the number of such regions is likely to be much larger, there will be a multitude of observers just like you, and even more which will differ in various ways. This sounds completely crazy, but it is a straightforward prediction from our understanding of the Big Bang and the measurements of precision cosmology. The level II multiverse follows directly from the theory of eternal inflation, which explains many otherwise mysterious aspects of the universe, such as why its curvature is so close to flat, why the cosmic background radiation has such a uniform temperature over the entire sky, and why the constants of physics appear to be exquisitely fine-tuned to permit the development of complex structures including life. Eternal (or chaotic) inflation argues that our level I multiverse (of which everything we can observe is a tiny bit) is a single “bubble” which nucleated when a pre-existing “false vacuum” phase decayed to a lower energy state. It is this decay which ultimately set off the enormous expansion after the Big Bang and provided the energy to create all of the content of the universe. But eternal inflation seems to require that there be an infinite series of bubbles created, all causally disconnected from one another. Because the process which causes a bubble to begin to inflate is affected by quantum fluctuations, although the fundamental physical laws in all of the bubbles will be the same, the initial conditions, including physical constants, will vary from bubble to bubble. Some bubbles will almost immediately recollapse into a black hole, others will expand so rapidly stars and galaxies never form, and in still others primordial nucleosynthesis may result in a universe filled only with helium. We find ourselves in a bubble which is hospitable to our form of life because we can only exist in such a bubble. The level III multiverse is implied by the unitary evolution of the wave function in quantum mechanics and the multiple worlds interpretation which replaces collapse of the wave function with continually splitting universes in which every possible outcome occurs. In this view of quantum mechanics there is no randomness—the evolution of the wave function is completely deterministic. The results of our experiments appear to contain randomness because in the level III multiverse there are copies of each of us which experience every possible outcome of the experiment and we don't know which copy we are. In the author's words, “…causal physics will produce the illusion of randomness from your subjective viewpoint in any circumstance where you're being cloned. … So how does it feel when you get cloned? It feels random! And every time something fundamentally random appears to happen to you, which couldn't have been predicted even in principle, it's a sign that you've been cloned.” In the level IV multiverse, not only do the initial conditions, physical constants, and the results of measuring an evolving quantum wave function vary, but the fundamental equations—the mathematical structure—of physics differ. There might be a different number of spatial dimensions, or two or more time dimensions, for example. The author argues that the ultimate ensemble theory is to assume that every mathematical structure exists as a physical structure in the level IV multiverse (perhaps with some constraints: for example, only computable structures may have physical representations). Most of these structures would not permit the existence of observers like ourselves, but once again we shouldn't be surprised to find ourselves living in a structure which allows us to exist. Thus, finally, the reason mathematics is so unreasonably effective in describing the laws of physics is just that mathematics and the laws of physics are one and the same thing. Any observer, regardless of how bizarre the universe it inhabits, will discover mathematical laws underlying the phenomena within that universe and conclude they make perfect sense. Tegmark contends that when we try to discover the mathematical structure of the laws of physics, the outcome of quantum measurements, the physical constants which appear to be free parameters in our models, or the detailed properties of the visible part of our universe, we are simply trying to find our address in the respective levels of these multiverses. We will never find a reason from first principles for these things we measure: we observe what we do because that's the way they are where we happen to find ourselves. Observers elsewhere will see other things. The principal opposition to multiverse arguments is that they are unscientific because they posit phenomena which are unobservable, perhaps even in principle, and hence cannot be falsified by experiment. Tegmark takes a different tack. He says that if you have a theory (for example, eternal inflation) which explains observations which otherwise do not make any sense and has made falsifiable predictions (the fine-scale structure of the cosmic background radiation) which have subsequently been confirmed by experiment, then if it predicts other inevitable consequences (the existence of a multitude of other Hubble volume universes outside our horizon and other bubbles with different physical constants) we should take these predictions seriously, even if we cannot think of any way at present to confirm them. Consider gravitational radiation: Einstein predicted it in 1916 as a consequence of general relativity. While general relativity has passed every experimental test in subsequent years, at the time of Einstein's prediction almost nobody thought a gravitational wave could be detected, and yet the consistency of the theory, validated by other tests, persuaded almost all physicists that gravitational waves must exist. It was not until the 1980s that indirect evidence for this phenomenon was detected, and to this date, despite the construction of elaborate apparatus and the efforts of hundreds of researchers over decades, no direct detection of gravitational radiation has been achieved. There is a great deal more in this enlightening book. You will learn about the academic politics of doing highly speculative research, gaming the arXiv to get your paper listed as the first in the day's publications, the nature of consciousness and perception and its complex relation to consensus and external reality, the measure problem as an unappreciated deep mystery of cosmology, whether humans are alone in our observable universe, the continuum versus an underlying discrete structure, and the ultimate fate of our observable part of the multiverses. In the Kindle edition, everything is properly linked, including the comprehensive index. Citations of documents on the Web are live links which may be clicked to display them.

- Thor, Brad. Full Black. New York: Pocket Books, 2011. ISBN 978-1-4165-8662-3.

- This is the eleventh in the author's Scot Harvath series, which began with The Lions of Lucerne (October 2010). Unlike the previous novel, The Athena Project (December 2013), in which Harvath played only an incidental part, here Harvath once again occupies centre stage. The author has also dialed back on some of the science-fictiony stuff which made Athena less than satisfying to me: this book is back in the groove of the geopolitical thriller we've come to expect from Thor. A high-risk covert operation to infiltrate a terrorist cell operating in Uppsala, Sweden to identify who is calling the shots on terror attacks conducted by sleeper cells in the U.S. goes horribly wrong, and Harvath not only loses almost all of his team, but fails to capture the leaders of the cell. Meanwhile, a ruthless and carefully scripted hit is made on a Hollywood producer, killing two filmmakers which whom he is working on a documentary project: evidence points to the hired killers being Russian spetsnaz, which indicates whoever ordered the hit has both wealth and connections. When a coordinated wave of terror attacks against soft targets in the U.S. is launched, Harvath, aided by his former nemesis turned ally Nicholas (“the troll”), must uncover the clues which link all of this together, working against time, as evidence suggests additional attacks are coming. This requires questioning the loyalty of previously-trusted people and investigating prominent figures generally considered above suspicion. With the exception of chapter 32, which gets pretty deep into the weeds of political economy and reminded me a bit of John Galt's speech in Atlas Shrugged (April 2010) (thankfully, it is much shorter), the story moves right along and comes to a satisfying conclusion. The plot is in large part based upon the Chinese concept of “unrestricted warfare”, which is genuine (this is not a spoiler, as the author mentions it in the front material of the book).

April 2014

- Suarez, Daniel. Kill Decision. New York: Signet, 2012. ISBN 978-0-451-41770-1.

- A drone strike on a crowd of pilgrims at one of the holiest shrines of Shia Islam in Iraq inflames the world against the U.S., which denies its involvement. (“But who else is flying drones in Iraq?”, is the universal response.) Meanwhile, the U.S. is rocked by a series of mysterious bombings, killing businessmen on a golf course, computer vision specialists meeting in Silicon Valley, military contractors in a building near the Pentagon—all seemingly unrelated. A campaign is building to develop and deploy autonomous armed drones to “protect the homeland”. Prof. Linda McKinney, doing research on weaver ants in Tanzania, seems far away from all this until she is saved from an explosion which destroys her camp by a mysterious group of special forces led by a man known only as “Odin”. She learns that her computer model of weaver ant colony behaviour has been stolen from her university's computer network by persons unknown who may be connected with the attacks, including the one she just escaped. The fear is that her ant model could be used as the basis for “swarm intelligence” drones which could cooperate to be a formidable weapon. With each individual drone having only rudimentary capabilities, like an isolated ant, they could be mass-produced and shift the military balance of power in favour of whoever possessed the technology. McKinney soon finds herself entangled in a black world where nothing is certain and she isn't even sure which side she's working for. Shocking discoveries indicate that the worst case she feared may be playing out, and she must decide where to place her allegiance. This novel is a masterful addition to the very sparse genre of robot ant science fiction thrillers, and this time I'm not the villain! Suarez has that rare talent, as had Michael Crichton, of writing action scenes which just beg to be put on the big screen and stories where the screenplay just writes itself. Should Hollywood turn this into a film and not botch it, the result should be a treat. You will learn some things about ants which you probably didn't know (all correct, as far as I can determine), visit a locale in the U.S. which sounds like something out of a Bond film but actually exists, and meet two of the most curious members of a special operations team in all of fiction.

- Hoover, Herbert. The Crusade Years. Edited by George H. Nash. Stanford, CA: Hoover Institution Press, 2013. ISBN 978-0-8179-1674-9.

-

In the modern era, most former U.S. presidents have largely

retired from the public arena, lending their names to

charitable endeavours and acting as elder statesmen rather

than active partisans. One striking counter-example to this

rule was Herbert Hoover who, from the time of his defeat by

Franklin Roosevelt in the 1932 presidential election until

shortly before his death in 1964, remained in the arena,

giving hundreds of speeches, many broadcast nationwide on

radio, writing multiple volumes of memoirs and analyses of

policy, collecting and archiving a multitude of documents

regarding World War I and its aftermath which became the core

of what is now the Hoover Institution collection at Stanford University,

working in famine relief during and after World War II, and

raising funds and promoting benevolent organisations such

as the Boys' Clubs. His strenuous work to keep the U.S. out

of World War II is chronicled in his

“magnum opus”,

Freedom Betrayed (June 2012),

which presents his revisionist view of U.S. entry into and

conduct of the war, and the tragedy which ensued after victory

had been won. Freedom Betrayed was largely completed

at the time of Hoover's death, but for reasons difficult to

determine at this remove, was not published until 2011.

The present volume was intended by Hoover to be a companion to

Freedom Betrayed, focussing on domestic policy

in his post-presidential career. Over the years, he envisioned

publishing the work in various forms, but by the early 1950s he

had given the book its present title and accumulated 564

pages of typeset page proofs. Due to other duties, and Hoover's

decision to concentrate his efforts on Freedom Betrayed,

little was done on the manuscript after he set it aside in 1955.

It is only through the scholarship of the editor, drawing upon

Hoover's draft, but also documents from the Hoover Institution

and the Hoover Presidential Library, that this work has been

assembled in its present form. The editor has also collected a

variety of relevant documents, some of which Hoover cited or

incorporated in earlier versions of the work, into a

comprehensive appendix. There are extensive source citations and

notes about discrepancies between Hoover's quotation of documents

and speeches and other published versions of them.

Of all the crusades chronicled here, the bulk of the work is devoted

to “The Crusade Against Collectivism in American Life”,

and Hoover's words on the topic are so pithy and relevant to the

present state of affairs in the United States that one suspects that

a brave, ambitious, but less than original politician who simply

cut and pasted Hoover's words into his own speeches would rapidly

become the darling of liberty-minded members of the Republican

party. I cannot think of any present-day Republican, even

darlings of the Tea Party, who draws the contrast between the

American tradition of individual liberty and enterprise and

the grey uniformity of collectivism as Hoover does here. And

Hoover does it with a firm intellectual grounding in the history

of America and the world, personal knowledge from having lived and

worked in countries around the world, and an engineer's pragmatism

about doing what works, not what sounds good in a speech or makes

people feel good about themselves.

This is somewhat of a surprise. Hoover was, in many ways, a

progressive—Calvin Coolidge called him “wonder boy”.

He was an enthusiastic believer in trust-busting and regulation

as a counterpoise to concentration of economic power. He was

a protectionist who supported the tariff to protect farmers and

industry from foreign competition. He supported income and inheritance

taxes “to regulate over-accumulations of wealth.”

He was no libertarian, nor even a “light hand on the tiller”

executive like Coolidge.

And yet he totally grasped the threat to liberty which the

intrusive regulatory and administrative state represented. It's

difficult to start quoting Hoover without retyping the entire

book, as there is line after line, paragraph after paragraph,

and page after page which are not only completely applicable to

the current predicament of the U.S., but guaranteed applause lines

were they uttered before a crowd of freedom loving citizens of

that country. Please indulge me in a few (comments in italics

are my own).

I could quote dozens more. Should Hoover re-appear and give a composite of what he writes here as a keynote speech at the 2016 Republican convention, and if it hasn't been packed with establishment cronies, I expect he would be interrupted every few lines with chants of “Hoo-ver, Hoo-ver” and nominated by acclamation. It is sad that in the U.S. in the age of Obama there is no statesman with the stature, knowledge, and eloquence of Hoover who is making the case for liberty and warning of the inevitable tyranny which awaits at the end of the road to serfdom. There are voices articulating the message which Hoover expresses so pellucidly here, but in today's media environment they don't have access to the kind of platform Hoover did when his post-presidential policy speeches were routinely broadcast nationwide. After his being reviled ever since his presidency, not just by Democrats but by many in his own party, it's odd to feel nostalgia for Hoover, but Obama will do that to you. In the Kindle edition the index cites page numbers in the hardcover edition which, since the Kindle edition does not include real page numbers, are completely useless.(On his electoral defeat) Democracy is not a polite employer.

We cannot extend the mastery of government over the daily life of a people without somewhere making it master of people's souls and thoughts.

(On JournoList, vintage 1934) I soon learned that the reviewers of the New York Times, the New York Herald Tribune, the Saturday Review and of other journals of review in New York kept in touch to determine in what manner they should destroy books which were not to their liking.

Who then pays? It is the same economic middle class and the poor. That would still be true if the rich were taxed to the whole amount of their fortunes….

Blessed are the young, for they shall inherit the national debt….

Regulation should be by specific law, that all who run may read.

It would be far better that the party go down to defeat with the banner of principle flying than to win by pussyfooting.

The seizure by the government of the communications of persons not charged with wrong-doing justifies the immoral conduct of every snooper.

- Chaikin, Andrew. John Glenn: America's Astronaut. Washington: Smithsonian Books, 2014. ISBN 978-1-58834-486-1.

- This short book (around 126 pages print equivalent), available only for the Kindle as a “Kindle single” at a modest price, chronicles the life and space missions of the first American to orbit the Earth. John Glenn grew up in a small Ohio town, the son of a plumber, and matured during the first great depression. His course in life was set when, in 1929, his father took his eight year old son on a joy ride offered by a pilot at local airfield in a Waco biplane. After that, Glenn filled up his room with model airplanes, intently followed news of air racers and pioneers of exploration by air, and in 1938 attended the Cleveland Air Races. There seemed little hope of his achieving his dream of becoming an airman himself: pilot training was expensive, and his family, while making ends meet during the depression, couldn't afford such a luxury. With the war in Europe underway and the U.S. beginning to rearm and prepare for possible hostilities, Glenn heard of a government program, the Civilian Pilot Training Program, which would pay for his flying lessons and give him college credit for taking them. He entered the program immediately and received his pilot's license in May 1942. By then, the world was a very different place. Glenn dropped out of college in his junior year and applied for the Army Air Corps. When they dawdled accepting him, he volunteered for the Navy, which immediately sent him to flight school. After completing advanced flight training, he transferred to the Marine Corps, which was seeking aviators. Sent to the South Pacific theatre, he flew 59 combat missions, mostly in close air support of ground troops in which Marine pilots specialise. With the end of the war, he decided to make the Marines his career and rotated through a number of stateside posts. After the outbreak of the Korean War, he hoped to see action in the jet combat emerging there and in 1953 arrived in country, again flying close air support. But an exchange program with the Air Force finally allowed him to achieve his ambition of engaging in air to air combat at ten miles a minute. He completed 90 combat missions in Korea, and emerged as one of the Marine Corps' most distinguished pilots. Glenn parlayed his combat record into a test pilot position, which allowed him to fly the newest and hottest aircraft of the Navy and Marines. When NASA went looking for pilots for its Mercury manned spaceflight program, Glenn was naturally near the top of the list, and was among the 110 military test pilots invited to the top secret briefing about the project. Despite not meeting all of the formal selection criteria (he lacked a college degree), he performed superbly in all of the harrowing tests to which candidates were subjected, made cut after cut, and was among the seven selected to be the first astronauts. This book, with copious illustrations and two embedded videos, chronicles Glenn's career, his harrowing first flight into space, his 1998 return to space on Space Shuttle Discovery on STS-95, and his 24 year stint in the U.S. Senate. I found the picture of Glenn after his pioneering flight somewhat airbrushed. It is said that while in the Senate, “He was known as one of NASA's strongest supporters on Capitol Hill…”, and yet in fact, while not one of the rabid Democrats who tried to kill NASA like Walter Mondale, he did not speak out as an advocate for a more aggressive space program aimed at expanding the human presence in space. His return to space is presented as the result of his assiduously promoting the benefits of space research for gerontology rather than a political junket by a senator which would generate publicity for NASA at a time when many people had tuned out its routine missions. (And if there was so much to be learned by flying elderly people in space, why was it never done again?) John Glenn was a quintessential product of the old, tough America. A hero in two wars, test pilot when that was one of the most risky of occupations, and first to ride the thin-skinned pressure-stabilised Atlas rocket into orbit, his place in history is assured. His subsequent career as a politician was not particularly distinguished: he initiated few pieces of significant legislation and never became a figure on the national stage. His campaign for the 1984 Democratic presidential nomination went nowhere, and he was implicated in the “Keating Five” scandal. John Glenn accomplished enough in the first forty-five years of his life to earn him a secure place in American history. This book does an excellent job of recounting those events and placing them in the context of the time. If it goes a bit too far in lionising his subsequent career, that's understandable: a biographer shouldn't always succumb to balance when dealing with a hero.

- Benson, Robert Hugh. Lord of the World. Seattle: CreateSpace, [1907] 2013. ISBN 978-1-4841-2706-3.

- In the early years of the 21st century, humanism and secularism are ascendant in Europe. Many churches exist only as monuments to the past, and mainstream religions are hæmorrhaging adherents—only the Roman Catholic church remains moored to its traditions, and its influence is largely confined to Rome and Ireland. A European Parliament is asserting its power over formerly sovereign nations, and people seem resigned to losing their national identity. Old-age pensions and the extension of welfare benefits to those displaced from jobs in occupations which have become obsolete create a voting bloc guaranteed to support those who pay these benefits. The loss of belief in an eternal soul has cheapened human life, and euthanasia has become accepted, both for the gravely ill and injured, but also for those just weary of life. This novel was published in 1907. G. K. Chesterton is reputed to have said “When Man ceases to worship God he does not worship nothing but worships everything.” I say “reputed” because there is no evidence whatsoever he actually said this, although he said a number of other things which might be conflated into a similar statement. This dystopian novel illustrates how a society which has “moved on” from God toward a celebration of Humanity as deity is vulnerable to a charismatic figure who bears the eschaton in his hands. It is simply stunning how the author, without any knowledge of the great convulsions which were to ensue in the 20th century, so precisely forecast the humanistic spiritual desert of the 21st. This is a novel of the coming of the Antichrist and the battle between the remnant of believers and coercive secularism reinforced by an emerging pagan cult satisfying our human thirst for transcendence. What is masterful about it is that while religious themes deeply underly it, if you simply ignore all of them, it is a thriller with deep philosophical roots. We live today in a time when religion is under unprecedented assault by humanism, and the threat to the sanctity of life has gone far beyond the imagination of the author. This novel was written more than a century ago, but is set in our times and could not be more relevant to our present circumstances. How often has a work of dystopian science fiction been cited by the Supreme Pontiff of the Roman Catholic Church? Contemporary readers may find some of the untranslated citations from the Latin Mass obscure: that's what your search engine exists to illumine. This work is in the public domain, and a number of print and electronic editions are available. I read this Kindle edition because it was (and is, at this writing) free. The formatting is less than perfect, but it is perfectly readable. A free electronic edition in a variety of formats can be downloaded from Project Gutenberg.

- Cawdron, Peter. Children's Crusade. Seattle: Amazon Digital Services, 2014. ASIN B00JFHIMQI.

- This novella, around 80 pages print equivalent and available only for the Kindle, is set in the world of Kurt Vonnegut's Slaughterhouse-Five. The publisher has licensed the rights for fiction using characters and circumstances created by Vonnegut, and this is a part of “The World of Kurt Vonnegut” series. If you haven't read Slaughterhouse-Five you will miss a great deal about this story. Here we encounter Billy Pilgrim and Montana Wildhack in their alien zoo on Tralfamadore. Their zookeeper, a Tralfamadorian Montana nicknamed Stained, due to what looked like a birthmark on the face, has taken to visiting the humans when the zoo is closed, communicating with them telepathically as Tralfs do. Perceiving time as a true fourth dimension they can browse at will, Tralfs are fascinated with humans who, apart from Billy, live sequential lives and cannot jump around to explore events in their history. Stained, like most Tralfs, believes that most momentous events in history are the work not of great leaders but of “little people” who accomplish great things when confronted with extraordinary circumstances. He (pronouns get complicated when there are five sexes, so I'll just pick one) sends Montana and Billy on telepathic journeys into human history, one at the dawn of human civilisation and another when a great civilisation veered into savagery, to show how a courageous individual with a sense of what is right can make all the difference. Finally they voyage together to a scene in human history which will bring tears to your eyes. This narrative is artfully intercut with scenes of Vonnegut discovering the realities of life as a hard-boiled reporter at the City News Bureau of Chicago. This story is written in the spirit of Vonnegut and with some of the same stylistic flourishes, but I didn't get the sense the author went overboard in adopting Vonnegut's voice. The result worked superbly for this reader. I read a pre-publication manuscript which the author kindly shared with me.

May 2014

- Lewis, Michael. Flash Boys. New York: W. W. Norton, 2014. ISBN 978-0-393-24466-3.