| 2016 |

January 2016

- Waldman, Jonathan. Rust. New York: Simon & Schuster, 2015. ISBN 978-1-4516-9159-7.

- In May of 1980 two activists, protesting the imprisonment of a Black Panther convicted of murder, climbed the Statue of Liberty in New York harbour, planning to unfurl a banner high on the statue. After spending a cold and windy night aloft, they descended and surrendered to the New York Police Department's Emergency Service Unit. Fearful that the climbers may have damaged the fragile copper cladding of the monument, a comprehensive inspection was undertaken. What was found was shocking. The structure of the Statue of Liberty was designed by Alexandre-Gustave Eiffel, and consists of an iron frame weighing 135 tons, which supports the 80 ton copper skin. As marine architects know well, a structure using two dissimilar metals such as iron and copper runs a severe risk of galvanic corrosion, especially in an environment such as the sea air of a harbour. If the iron and copper were to come into contact, a voltage would flow across the junction, and the iron would be consumed in the process. Eiffel's design prevented the iron and copper from touching one another by separating them with spacers made of asbestos impregnated with shellac. What Eiffel didn't anticipate is that over the years superintendents of the statue would decide to “protect” its interior by applying various kinds of paint. By 1980 eight coats of paint had accumulated, almost as thick as the copper skin. The paint trapped water between the skin and the iron frame, and this set electrolysis into action. One third of the rivets in the frame were damaged or missing, and some of the frame's iron ribs had lost two thirds of their material. The asbestos insulators had absorbed water and were long gone. The statue was at risk of structural failure. A private fund-raising campaign raised US$ 277 million to restore the statue, which ended up replacing most of its internal structure. On July 4th, 1986, the restored statue was inaugurated, marking its 100th anniversary. Earth, uniquely among known worlds, has an atmosphere with free oxygen, produced by photosynthetic plants. While much appreciated by creatures like ourselves which breathe it, oxygen is a highly reactive gas and combines with many other elements, either violently in fire, or more slowly in reactions such as rusting metals. Further, 71% of the Earth's surface is covered by oceans, whose salty water promotes other forms of corrosion all too familiar to owners of boats. This book describes humanity's “longest war”: the battle against the corruption of our works by the inexorable chemical process of corrosion. Consider an everyday object much more humble than the Statue of Liberty: the aluminium beverage can. The modern can is one of the most highly optimised products of engineering ever created. Around 180 billion cans are produced and consumed every year around the world: four six packs for every living human being. Reducing the mass of each can by just one gram will result in an annual saving of 180,000 metric tons of aluminium worth almost 300 million dollars at present prices, so a long list of clever tricks has been employed to reduce the mass of cans. But it doesn't matter how light or inexpensive the can is if it explodes, leaks, or changes the flavour of its contents. Coca-Cola, with a pH of 2.75 and a witches’ brew of ingredients, under a pressure of 6 atmospheres, is as corrosive to bare aluminium as battery acid. If the inside of the can were not coated with a proprietary epoxy lining (whose composition depends upon the product being canned, and is carefully guarded by can manufacturers), the Coke would corrode through the thin walls of the can in just three days. The process of scoring the pop-top removes the coating around the score, and risks corrosion and leakage if a can is stored on its side; don't do that. The author takes us on an eclectic tour the history of corrosion and those who battle it, from the invention of stainless steel, inspecting the trans-Alaska oil pipeline by sending a “pig” (essentially a robot submarine equipped with electronic sensors) down its entire length, and evangelists for galvanizing (zinc coating) steel. We meet Dan Dunmire, the Pentagon's rust czar, who estimates that corrosion costs the military on the order of US$ 20 billion a year and describes how even the most humble of mitigation strategies can have huge payoffs. A new kind of gasket intended to prevent corrosion where radio antennas protrude through the fuselage of aircraft returned 175 times its investment in a single year. Overall return on investment in the projects funded by his office is estimated as fifty to one. We're introduced to the world of the corrosion engineer, a specialty which, while not glamorous, pays well and offers superb job security, since rust will always be with us. Not everybody we encounter battles rust. Photographer Alyssha Eve Csük has turned corrosion into fine art. Working at the abandoned Bethlehem Steel Works in Pennsylvania, perhaps the rustiest part of the rust belt, she clandestinely scrambles around the treacherous industrial landscape in search of the beauty in corrosion. This book mixes the science of corrosion with the stories of those who fight it, in the past and today. It is an enlightening and entertaining look into the most mundane of phenomena, but one which affects all the technological works of mankind.

- Levenson, Thomas. The Hunt for Vulcan. New York: Random House, 2015. ISBN 978-0-8129-9898-6.

- The history of science has been marked by discoveries in which, by observing where nobody had looked before, with new and more sensitive instruments, or at different aspects of reality, new and often surprising phenomena have been detected. But some of the most profound of our discoveries about the universe we inhabit have come from things we didn't observe, but expected to. By the nineteenth century, one of the most solid pillars of science was Newton's law of universal gravitation. With a single equation a schoolchild could understand, it explained why objects fall, why the Moon orbits the Earth and the Earth and other planets the Sun, the tides, and the motion of double stars. But still, one wonders: is the law of gravitation exactly as Newton described, and does it work everywhere? For example, Newton's gravity gets weaker as the inverse square of the distance between two objects (for example, if you double the distance, the gravitational force is four times weaker [2² = 4]) but has unlimited range: every object in the universe attracts every other object, however weakly, regardless of distance. But might gravity not, say, weaken faster at great distances? If this were the case, the orbits of the outer planets would differ from the predictions of Newton's theory. Comparing astronomical observations to calculated positions of the planets was a way to discover such phenomena. In 1781 astronomer William Herschel discovered Uranus, the first planet not known since antiquity. (Uranus is dim but visible to the unaided eye and doubtless had been seen innumerable times, including by astronomers who included it in star catalogues, but Herschel was the first to note its non-stellar appearance through his telescope, originally believing it a comet.) Herschel wasn't looking for a new planet; he was observing stars for another project when he happened upon Uranus. Further observations of the object confirmed that it was moving in a slow, almost circular orbit, around twice the distance of Saturn from the Sun. Given knowledge of the positions, velocities, and masses of the planets and Newton's law of gravitation, it should be possible to predict the past and future motion of solar system bodies for an arbitrary period of time. Working backward, comparing the predicted influence of bodies on one another with astronomical observations, the masses of the individual planets can be estimated to produce a complete model of the solar system. This great work was undertaken by Pierre-Simon Laplace who published his Mécanique céleste in five volumes between 1799 and 1825. As the middle of the 19th century approached, ongoing precision observations of the planets indicated that all was not proceeding as Laplace had foreseen. Uranus, in particular, continued to diverge from where it was expected to be after taking into account the gravitational influence upon its motion by Saturn and Jupiter. Could Newton have been wrong, and the influence of gravity different over the vast distance of Uranus from the Sun? In the 1840s two mathematical astronomers, Urbain Le Verrier in France and John Couch Adams in Britain, working independently, investigated the possibility that Newton was right, but that an undiscovered body in the outer solar system was responsible for perturbing the orbit of Uranus. After almost unimaginably tedious calculations (done using tables of logarithms and pencil and paper arithmetic), both Le Verrier and Adams found a solution and predicted where to observe the new planet. Adams failed to persuade astronomers to look for the new world, but Le Verrier prevailed upon an astronomer at the Berlin Observatory to try, and Neptune was duly discovered within one degree (twice the apparent size of the full Moon) of his prediction. This was Newton triumphant. Not only was the theory vindicated, it had been used, for the first time in history, to predict the existence of a previously unknown planet and tell the astronomers right where to point their telescopes to observe it. The mystery of the outer solar system had been solved. But problems remained much closer to the Sun. The planet Mercury orbits the Sun every 88 days in an eccentric orbit which never exceeds half the Earth's distance from the Sun. It is a small world, with just 6% of the Earth's mass. As an inner planet, Mercury never appears more than 28° from the Sun, and can best be observed in the morning or evening sky when it is near its maximum elongation from the Sun. (With a telescope, it is possible to observe Mercury in broad daylight.) Flush with his success with Neptune, and rewarded with the post of director of the Paris Observatory, in 1859 Le Verrier turned his attention toward Mercury. Again, through arduous calculations (by this time Le Verrier had a building full of minions to assist him, but so grueling was the work and so demanding a boss was Le Verrier that during his tenure at the Observatory 17 astronomers and 46 assistants quit) the influence of all of the known planets upon the motion of Mercury was worked out. If Mercury orbited a spherical Sun without other planets tugging on it, the point of its closest approach to the Sun (perihelion) in its eccentric orbit would remain fixed in space. But with the other planets exerting their gravitational influence, Mercury's perihelion should advance around the Sun at a rate of 526.7 arcseconds per century. But astronomers who had been following the orbit of Mercury for decades measured the actual advance of the perihelion as 565 arcseconds per century. This left a discrepancy of 38.3 arcseconds, for which there was no explanation. (The modern value, based upon more precise observations over a longer period of time, for the perihelion precession of Mercury is 43 arcseconds per century.) Although small (recall that there are 1,296,000 arcseconds in a full circle), this anomalous precession was much larger than the margin of error in observations and clearly indicated something was amiss. Could Newton be wrong? Le Verrier thought not. Just as he had done for the anomalies of the orbit of Uranus, Le Verrier undertook to calculate the properties of an undiscovered object which could perturb the orbit of Mercury and explain the perihelion advance. He found that a planet closer to the Sun (or a belt of asteroids with equivalent mass) would do the trick. Such an object, so close to the Sun, could easily have escaped detection, as it could only be readily observed during a total solar eclipse or when passing in front of the Sun's disc (a transit). Le Verrier alerted astronomers to watch for transits of this intra-Mercurian planet. On March 26, 1859, Edmond Modeste Lescarbault, a provincial physician in a small town and passionate amateur astronomer turned his (solar-filtered) telescope toward the Sun. He saw a small dark dot crossing the disc of the Sun, taking one hour and seventeen minutes to transit, just as expected by Le Verrier. He communicated his results to the great man, and after a visit and detailed interrogation, the astronomer certified the doctor's observation as genuine and computed the orbit for the new planet. The popular press jumped upon the story. By February 1860, planet Vulcan was all the rage. Other observations began to arrive, both from credible and unknown observers. Professional astronomers mounted worldwide campaigns to observe the Sun around the period of predicted transits of Vulcan. All of the planned campaigns came up empty. Searches for Vulcan became a major focus of solar eclipse expeditions. Unless the eclipse happened to occur when Vulcan was in conjunction with the Sun, it should be readily observable when the Sun was obscured by the Moon. Eclipse expeditions prepared detailed star charts for the vicinity of the Sun to exclude known stars for the search during the fleeting moments of totality. In 1878, an international party of eclipse chasers including Thomas Edison descended on Rawlins, Wyoming to hunt Vulcan in an eclipse crossing that frontier town. One group spotted Vulcan; others didn't. Controversy and acrimony ensued. After 1878, most professional astronomers lost interest in Vulcan. The anomalous advance of Mercury's perihelion was mostly set aside as “one of those things we don't understand”, much as astronomers regard dark matter today. In 1915, Einstein published his theory of gravitation: general relativity. It predicted that when objects moved rapidly or gravitational fields were strong, their motion would deviate from the predictions of Newton's theory. Einstein recalled the moment when he performed the calculation of the motion of Mercury in his just-completed theory. It predicted precisely the perihelion advance observed by the astronomers. He said that his heart shuddered in his chest and that he was “beside himself with joy.” Newton was wrong! For the extreme conditions of Mercury's orbit, so close to the Sun, Einstein's theory of gravitation is required to obtain results which agree with observation. There was no need for planet Vulcan, and now it is mostly forgotten. But the episode is instructive as to how confidence in long-accepted theories and wishful thinking can lead us astray when what might be needed is an overhaul of our most fundamental theories. A century hence, which of our beliefs will be viewed as we regard planet Vulcan today?

- Ward, Jonathan H. Countdown to a Moon Launch. Cham, Switzerland: Springer International, 2015. ISBN 978-3-319-17791-5.

- In the companion volume, Rocket Ranch (December 2015), the author describes the gargantuan and extraordinarily complex infrastructure which was built at the Kennedy Space Center (KSC) in Florida to assemble, check out, and launch the Apollo missions to the Moon and the Skylab space station. The present book explores how that hardware was actually used, following the “processing flow” of the Apollo 11 launch vehicle and spacecraft from the arrival of components at KSC to the moment of launch. As intricate as the hardware was, it wouldn't have worked, nor would it have been possible to launch flawless mission after flawless mission on time had it not been for the management tools employed to coordinate every detail of processing. Central to this was PERT (Program Evaluation and Review Technique), a methodology developed by the U.S. Navy in the 1950s to manage the Polaris submarine and missile systems. PERT breaks down the progress of a project into milestones connected by activities into a graph of dependencies. Each activity has an estimated time to completion. A milestone might be, say, the installation of the guidance system into a launch vehicle. That milestone would depend upon the assembly of the components of the guidance system (gyroscopes, sensors, electronics, structure, etc.), each of which would depend upon their own components. Downstream, integrated test of the launch vehicle would depend upon the installation of the guidance system. Many activities proceed in parallel and only come together when a milestone has them as its mutual dependencies. For example, the processing and installation of rocket engines is completely independent of work on the guidance system until they join at a milestone where an engine steering test is performed. As a project progresses, the time estimates for the various activities will be confronted with reality: some will be completed ahead of schedule while other will slip due to unforeseen problems or over-optimistic initial forecasts. This, in turn, ripples downstream in the dependency graph, changing the time available for activities if the final completion milestone is to be met. For any given graph at a particular time, there will be a critical path of activities where a schedule slip of any one will delay the completion milestone. Each lower level milestone in the graph has its own critical path leading to it. As milestones are completed ahead or behind schedule, the overall critical path will shift. Knowing the critical path allows program managers to concentrate resources on items along the critical path to avoid, wherever possible, overall schedule slips (with the attendant extra costs). Now all this sounds complicated, and in a project with the scope of Apollo, it is almost bewildering to contemplate. The Launch Control Center was built with four firing rooms. Three were outfitted with all of the consoles to check out and launch a mission, but the fourth cavernous room ended up being used to display and maintain the PERT charts for activities in progress. Three levels of charts were maintained. Level A was used by senior management and contained hundreds of major milestones and activities. Each of these was expanded out into a level B chart which, taken together, tracked in excess of 7000 milestones. These, in turn, were broken down into detail on level C charts, which tracked more than 40,000 activities. The level B and C charts were displayed on more than 400 square metres of wall space in the back room of firing room four. As these detailed milestones were completed on the level C charts, changes would propagate down that chart and those which affected its completion upward to the level A and B charts. Now, here's the most breathtaking thing about this: they did it all by hand! For most of the Apollo program, computer implementations of PERT were not available (or those that existed could not handle this level of detail). (Today, the PERT network for processing of an Apollo mission could be handled on a laptop computer.) There were dozens of analysts and clerks charged with updating the networks, with the processing flow displayed on an enormous board with magnetic strips which could be shifted around by people climbing up and down rolling staircases. Photographers would take pictures of the board which were printed and distributed to managers monitoring project status. If PERT was essential to coordinating all of the parallel activities in preparing a spacecraft for launch, configuration control was critical to ensure than when the countdown reached T0, everything would work as expected. Just as there was a network of dependencies in the PERT chart, the individual components were tested, subassemblies were tested, assemblies of them were tested, all leading up to an integrated test of the assembled launcher and spacecraft. The successful completion of a test established a tested configuration for the item. Anything which changed that configuration in any way, for example unplugging a cable and plugging it back in, required re-testing to confirm that the original configuration had been restored. (One of the pins in the connector might not have made contact, for instance.) This was all documented by paperwork signed off by three witnesses. The mountain of paper was intimidating; there was even a slide rule calculator for estimating the cost of various kinds of paperwork. With all of this management superstructure it may seem a miracle that anything got done at all. But, as the end of the decade approached, the level of activity at KSC was relentless (and took a toll upon the workforce, although many recall it as the most intense and rewarding part of their careers). Several missions were processed in parallel: Apollo 11 rolled out to the launch pad while Apollo 10 was still en route to the Moon, and Apollo 12 was being assembled and tested. To illustrate how all of these systems and procedures came together, the author takes us through the processing of Apollo 11 in detail, starting around six months before launch when the Saturn V stages, and command, service, and lunar modules arrived independently from the contractors who built them or the NASA facilities where they had been individually tested. The original concept for KSC was that it would be an “operational spaceport” which would assemble pre-tested components into flight vehicles, run integrated system tests, and then launch them in an assembly-line fashion. In reality, the Apollo and Saturn programs never matured to this level, and were essentially development and test projects throughout. Components not only arrived at KSC with “some assembly required”; they often were subject to a blizzard of engineering change orders which required partially disassembling equipment to make modifications, then exhaustive re-tests to verify the previously tested configuration had been restored. Apollo 11 encountered relatively few problems in processing, so experiences from other missions where problems arose are interleaved to illustrate how KSC coped with contingencies. While Apollo 16 was on the launch pad, a series of mistakes during the testing process damaged a propellant tank in the command module. The only way to repair this was to roll the entire stack back to the Vehicle Assembly Building, remove the command and service modules, return them to the spacecraft servicing building then de-mate them, pull the heat shield from the command module, change out the tank, then put everything back together, re-stack, and roll back to the launch pad. Imagine how many forms had to be filled out. The launch was delayed just one month. The process of servicing the vehicle on the launch pad is described in detail. Many of the operations, such as filling tanks with toxic hypergolic fuel and oxidiser, which burn on contact, required evacuating the pad of all non-essential personnel and special precautions for those engaged in these hazardous tasks. As launch approached, the hurdles became higher: a Launch Readiness Review and the Countdown Demonstration Test, a full dress rehearsal of the countdown up to the moment before engine start, including fuelling all of the stages of the launch vehicle (and then de-fuelling them after conclusion of the test). There is a wealth of detail here, including many obscure items I've never encountered before. Consider “Forward Observers”. When the Saturn V launched, most personnel and spectators were kept a safe distance of more than 5 km from the launch pad in case of calamity. But three teams of two volunteers each were stationed at sites just 2 km from the pad. They were charged with observing the first seconds of flight and, if they saw a catastrophic failure (engine explosion or cut-off, hard-over of an engine gimbal, or the rocket veering into the umbilical tower), they would signal the astronauts to fire the launch escape system and abort the mission. If this happened, the observers would then have to dive into crude shelters often frequented by rattlesnakes to ride out the fiery aftermath. Did you know about the electrical glitch which almost brought the Skylab 2 mission to flaming catastrophe moments after launch? How lapses in handling of equipment and paperwork almost spelled doom for the crew of Apollo 13? The time an oxygen leak while fuelling a Saturn V booster caused cars parked near the launch pad to burst into flames? It's all here, and much more. This is an essential book for those interested in the engineering details of the Apollo project and the management miracles which made its achievements possible.

- Regis, Ed. Monsters. New York: Basic Books, 2015. ISBN 978-0-465-06594-3.

-

In 1863, as the American Civil War raged, Count Ferdinand von Zeppelin,

an ambitious young cavalry officer from the German kingdom of

Württemberg arrived in America to observe the conflict and

learn its lessons for modern warfare. He arranged an audience

with President Lincoln, who authorised him to travel among

the Union armies. Zeppelin spent a month with General Joseph

Hooker's Army of the Potomac. Accustomed to German military

organisation, he was unimpressed with what he saw and left to see

the sights of the new continent. While visiting Minnesota, he

ascended in a tethered balloon and saw the landscape laid out

below him like a military topographical map. He immediately

grasped the advantage of such an eye in the sky for military

purposes. He was impressed.

Upon his return to Germany, Zeppelin pursued a military career,

distinguishing himself in the 1870 war with France, although

being considered “a hothead”. It was this

characteristic which brought his military career to an abrupt

end in 1890. Chafing under what he perceived as stifling

leadership by the Prussian officer corps, he wrote

directly to the Kaiser to complain. This was a bad career move;

the Kaiser “promoted” him into retirement. Adrift,

looking for a new career, Zeppelin seized upon controlled aerial

flight, particularly for its military applications. And he

thought big.

By 1890, France was at the forefront of aviation. By 1885 the

first dirigible,

La France,

had demonstrated aerial navigation over complex closed courses

and carried passengers. Built for the French army, it was just a

technology demonstrator, but to Zeppelin it demonstrated a capability

with such potential that Germany must not be left behind.

He threw his energy into the effort, formed a company, raised the

money, and embarked upon the construction of

Luftschiff Zeppelin 1

(LZ 1).

Count Zeppelin was not a man to make small plans. Eschewing

sub-scale demonstrators or technology-proving prototypes, he

went directly to a full scale airship intended to be militarily

useful. It was fully 128 metres long, almost two and a half

times the size of La France,

longer than a football field. Its rigid aluminium frame

contained 17 gas bags filled with hydrogen, and it was powered

by two gasoline engines. LZ 1 flew just three times. An

observer from the German War Ministry reported it to be

“suitable for neither military nor for non-military purposes.”

Zeppelin's company closed its doors and the airship was sold for

scrap.

By 1905, Zeppelin was ready to try again. On its first flight, the LZ 2

lost power and control and had to make a forced landing. Tethered

to the ground at the landing site, it was caught by the wind and

destroyed. It was sold for scrap. Later the LZ 3 flew

successfully, and Zeppelin embarked upon construction of the LZ 4,

which would be larger still. While attempting a twenty-four hour

endurance flight, it suffered motor failure, landed, and while tied

down was caught by wind. Its gas bags rubbed against one another and

static electricity ignited the hydrogen, which reduced the airship

to smoking wreckage.

Many people would have given up at this point, but not the redoubtable

Count. The LZ 5, delivered to the military, was lost when carried away

by the wind after an emergency landing and dashed against a hill. LZ 6

burned in its hangar after an engine caught fire. LZ 7, the first

civilian passenger airship, crashed into a forest on its first flight

and was damaged beyond repair. LZ 8, its replacement, was destroyed

by a gust of wind while being walked out of its hangar.

With the outbreak of war in 1914, the airship went to war. Germany

operated 117 airships, using them for reconnaissance and even

bombing targets in England. Of the 117, fully 81 were destroyed,

about half due to enemy action and half by the woes which had wrecked

so many airships prior to the conflict.

Based upon this stunning record of success, after the end of the

Great War, Britain decided to embark in earnest on its own

airship program, building even larger airships than Germany.

Results were no better, culminating in the

R100 and

R101,

built to provide air and cargo service on routes throughout

the Empire. On its maiden flight to India in 1930, R101 crashed and

burned in a storm while crossing France, killing 48 of the

54 on board. After the catastrophe, the R100 was retired and

sold for scrap.

This did not deter the Americans, who, in addition to their

technical prowess and “can do” spirit, had

access to helium, produced as a by-product of their

natural gas fields. Unlike hydrogen, helium is nonflammable,

so the risk of fire, which had destroyed so many airships

using hydrogen, was entirely eliminated. Helium does not

provide as much lift as hydrogen, but this can be compensated

for by increasing the size of the ship.

Helium is also around fifty times more expensive than hydrogen, which

makes managing an airship in flight more difficult. While the

commander of a hydrogen airship can freely “valve”

gas to reduce lift when required, doing this in a helium

ship is forbiddingly expensive and restricted only to the

most dire of emergencies.

The U.S. Navy believed the airship to be an ideal platform for

long-range reconnaissance, anti-submarine patrols, and other

missions where its endurance, speed, and the ability to

operate far offshore provided advantages over ships and

heavier than air craft. Between 1921 and 1935 the Navy

operated

five rigid airships,

three built domestically and two abroad. Four of the five crashed in

storms or due to structural failure, killing dozens of crew.

This sorry chronicle leads up to a detailed recounting of

the history of the

Hindenburg.

Originally designed to use helium, it was redesigned for hydrogen

after it became clear the U.S., which had forbidden export of helium

in 1927, would not grant a waiver, especially to a Germany by

then under Nazi rule. The Hindenburg was enormous:

at 245 metres in length, it was longer than the U.S. Capitol building and

more than three times the length of a Boeing 747. It carried

between 50 and 72 passengers who were served by a crew of 40 to

61, with accommodations (apart from the spartan sleeping quarters)

comparable to first class on ocean liners. In 1936, the great ship

made 17 transatlantic crossings without incident. On its first

flight to the U.S. in 1937, it was

destroyed by

fire while approaching the mooring mast at Lakehurst, New Jersey.

The disaster and its aftermath are described in detail. Remarkably,

given the iconic images of the flaming airship falling to the ground

and the structure glowing from the intense heat of combustion, of

the 97 passengers and crew on board, 62 survived the disaster. (One

of the members of the ground crew also died.)

Prior to the destruction of the Hindenburg, a total

of twenty-six hydrogen filled airships had been destroyed by

fire, excluding those shot down in wartime, with a total of 250

people killed. The vast majority of all rigid airships built

ended in disaster—if not due to fire then structural failure,

weather, or pilot error. Why did people continue to pursue this

technology in the face of abundant evidence that it was fundamentally

flawed?

The author argues that rigid airships are an example of a

“pathological technology”, which he characterises

as:

- Embracing something huge, either in size or effects.

- Inducing a state bordering on enthralment among its proponents…

- …who underplay its downsides, risks, unintended consequences, and obvious dangers.

- Having costs out of proportion to the benefits it is alleged to provide.

Still, for all of their considerable faults and stupidities—their huge costs, terrible risks, unintended negative consequences, and in some cases injuries and deaths—pathological technologies possess one crucial saving grace: they can be stopped. Or better yet, never begun.

Except, it seems, you can only recognise them in retrospect.

February 2016

- McCullough, David. The Wright Brothers. New York: Simon & Schuster, 2015. ISBN 978-1-4767-2874-2.

- On December 8th, 1903, all was in readiness. The aircraft was perched on its launching catapult, the brave airman at the controls. The powerful internal combustion engine roared to life. At 16:45 the catapult hurled the craft into the air. It rose straight up, flipped, and with its wings coming apart, plunged into the Potomac river just 20 feet from the launching point. The pilot was initially trapped beneath the wreckage but managed to free himself and swim to the surface. After being rescued from the river, he emitted what one witness described as “the most voluble series of blasphemies” he had ever heard. So ended the last flight of Samuel Langley's “Aerodrome”. Langley was a distinguished scientist and secretary of the Smithsonian Institution in Washington D.C. Funded by the U.S. Army and the Smithsonian for a total of US$ 70,000 (equivalent to around 1.7 million present-day dollars), the Aerodrome crashed immediately on both of its test flights, and was the subject of much mockery in the press. Just nine days later, on December 17th, two brothers, sons of a churchman, with no education beyond high school, and proprietors of a bicycle shop in Dayton, Ohio, readied their own machine for flight near Kitty Hawk, on the windswept sandy hills of North Carolina's Outer Banks. Their craft, called just the Flyer, took to the air with Orville Wright at the controls. With the 12 horsepower engine driving the twin propellers and brother Wilbur running alongside to stabilise the machine as it moved down the launching rail into the wind, Orville lifted the machine into the air and achieved the first manned heavier-than-air powered flight, demonstrating the Flyer was controllable in all three axes. The flight lasted just 12 seconds and covered a distance of 120 feet. After the first flight, the brothers took turns flying the machine three more times on the 17th. On the final flight Wilbur flew a distance of 852 feet in a flight of 59 seconds (a strong headwind was blowing, and this flight was over half a mile through the air). After completion of the fourth flight, while being prepared to fly again, a gust of wind caught the machine and dragged it, along with assistant John T. Daniels, down the beach toward the ocean. Daniels escaped, but the Flyer was damaged beyond repair and never flew again. (The Flyer which can seen in the Smithsonian's National Air and Space Museum today has been extensively restored.) Orville sent a telegram to his father in Dayton announcing the success, and the brothers packed up the remains of the aircraft to be shipped back to their shop. The 1903 season was at an end. The entire budget for the project between 1900 through the successful first flights was less than US$ 1000 (24,000 dollars today), and was funded entirely by profits from the brothers' bicycle business. How did two brothers with no formal education in aerodynamics or engineering succeed on a shoestring budget while Langley, with public funds at his disposal and the resources of a major scientific institution fail so embarrassingly? Ultimately it was because the Wright brothers identified the key problem of flight and patiently worked on solving it through a series of experiments. Perhaps it was because they were in the bicycle business. (Although they are often identified as proprietors of a “bicycle shop”, they also manufactured their own bicycles and had acquired the machine tools, skills, and co-workers for the business, later applied to building the flying machine.) The Wrights believed the essential problem of heavier than air flight was control. The details of how a bicycle is built don't matter much: you still have to learn to ride it. And the problem of control in free flight is much more difficult than riding a bicycle, where the only controls are the handlebars and, to a lesser extent, shifting the rider's weight. In flight, an airplane must be controlled in three axes: pitch (up and down), yaw (left and right), and roll (wings' angle to the horizon). The means for control in each of these axes must be provided, and what's more, just as for a child learning to ride a bike, the would-be aeronaut must master the skill of using these controls to maintain his balance in the air. Through a patient program of subscale experimentation, first with kites controlled by from the ground by lines manipulated by the operators, then gliders flown by a pilot on board, the Wrights developed their system of pitch control by a front-mounted elevator, yaw by a rudder at the rear, and roll by warping the wings of the craft. Further, they needed to learn how to fly using these controls and verify that the resulting plane would be stable enough that a person could master the skill of flying it. With powerless kites and gliders, this required a strong, consistent wind. After inquiries to the U.S. Weather Bureau, the brothers selected the Kitty Hawk site on the North Carolina coast. Just getting there was an adventure, but the wind was as promised and the sand and lack of large vegetation was ideal for their gliding experiments. They were definitely “roughing it” at this remote site, and at times were afflicted by clouds of mosquitos of Biblical plague proportions, but starting in 1900 they tested a series of successively larger gliders and by 1902 had a design which provided three axis control, stability, and the controls for a pilot on board. In the 1902 season they made more than 700 flights and were satisfied the control problem had been mastered. Now all that remained was to add an engine and propellers to the successful glider design, again scaling it up to accommodate the added weight. In 1903, you couldn't just go down to the hardware store and buy an engine, and automobile engines were much too heavy, so the Wrights' resourceful mechanic, Charlie Taylor, designed and built the four cylinder motor from scratch, using the new-fangled material aluminium for the engine block. The finished engine weighed just 152 pounds and produced 12 horsepower. The brothers could find no references for the design of air propellers and argued intensely over the topic, but eventually concluded they'd just have to make a best guess and test it on the real machine. The Flyer worked the on the second attempt (an earlier try on December 14th ended in a minor crash when Wilbur over-controlled at the moment of take-off). But this stunning success was the product of years of incremental refinement of the design, practical testing, and mastery of airmanship through experience. Those four flights in December of 1903 are now considered one of the epochal events of the twentieth century, but at the time they received little notice. Only a few accounts of the flights appeared in the press, and some of them were garbled and/or sensationalised. The Wrights knew that the Flyer (whose wreckage was now in storage crates at Dayton), while a successful proof of concept and the basis for a patent filing, was not a practical flying machine. It could only take off into the strong wind at Kitty Hawk and had not yet demonstrated long-term controlled flight including aerial maneuvers such as turns or flying around a closed course. It was just too difficult travelling to Kitty Hawk, and the facilities of their camp there didn't permit rapid modification of the machines based upon experimentation. They arranged to use an 84 acre cow pasture called Huffman Prairie located eight miles from Dayton along an interurban trolley line which made it easy to reach. The field's owner let them use it without charge as long as they didn't disturb the livestock. The Wrights devised a catapult to launch their planes, powered by a heavy falling weight, which would allow them to take off in still air. It was here, in 1904, that they refined the design into a practical flying machine and fully mastered the art of flying it over the course of about fifty test flights. Still, there was little note of their work in the press, and the first detailed account was published in the January 1905 edition of Gleanings in Bee Culture. Amos Root, the author of the article and publisher of the magazine, sent a copy to Scientific American, saying they could republish it without fee. The editors declined, and a year later mocked the achievements of the Wright brothers. For those accustomed to the pace of technological development more than a century later, the leisurely pace of progress in aviation and lack of public interest in the achievement of what had been a dream of humanity since antiquity seems odd. Indeed, the Wrights, who had continued to refine their designs, would not become celebrities nor would their achievements be widely acknowledged until a series of demonstrations Wilbur would perform at Le Mans in France in the summer of 1908. Le Figaro wrote, “It was not merely a success, but a triumph…a decisive victory for aviation, the news of which will revolutionize scientific circles throughout the world.” And it did: stories of Wilbur's exploits were picked up by the press on the Continent, in Britain, and, belatedly, by papers in the U.S. Huge crowds came out to see the flights, and the intrepid American aviator's name was on every tongue. Meanwhile, Orville was preparing for a series of demonstration flights for the U.S. Army at Fort Myer, Virginia. The army had agreed to buy a machine if it passed a series of tests. Orville's flights also began to draw large crowds from nearby Washington and extensive press coverage. All doubts about what the Wrights had wrought were now gone. During a demonstration flight on September 17, 1908, a propeller broke in flight. Orville tried to recover, but the machine plunged to the ground from an altitude of 75 feet, severely injuring him and killing his passenger, Lieutenant Thomas Selfridge, who became the first person to die in an airplane crash. Orville's recuperation would be long and difficult, aided by his sister, Katharine. In early 1909, Orville and Katharine would join Wilbur in France, where he was to do even more spectacular demonstrations in the south of the country, training pilots for the airplanes he was selling to the French. Upon their return to the U.S., the Wrights were awarded medals by President Taft at the White House. They were feted as returning heroes in a two day celebration in Dayton. The diligent Wrights continued their work in the shop between events. The brothers would return to Fort Myer, the scene of the crash, and complete their demonstrations for the army, securing the contract for the sale of an airplane for US$ 30,000. The Wrights would continue to develop their company, defend their growing portfolio of patents against competitors, and innovate. Wilbur was to die of typhoid fever in 1912, aged only 45 years. Orville sold his interest in the Wright Company in 1915 and, in his retirement, served for 28 years on the National Advisory Committee for Aeronautics, the precursor of NASA. He died in 1948. Neither brother ever married. This book is a superb evocation of the life and times of the Wrights and their part in creating, developing, promoting, and commercialising one of the key technologies of the modern world.

- Carlson, W. Bernard. Tesla: Inventor of the Electrical Age. Princeton: Princeton University Press, 2013. ISBN 978-0-691-16561-5.

-

Nicola Tesla was born in 1858 in a village in what is now Croatia,

then part of the Austro-Hungarian Empire. His father and

grandfather were both priests in the Orthodox church. The family

was of Serbian descent, but had lived in Croatia since the 1690s

among a community of other Serbs. His parents wanted him to

enter the priesthood and enrolled him in school to that end. He excelled in

mathematics and, building on a boyhood fascination with machines

and tinkering, wanted to pursue a career in engineering. After

completing high school, Tesla returned to his village where he

contracted cholera and was near death. His father promised him

that if he survived, he would “go to the best technical

institution in the world.” After nine months of illness,

Tesla recovered and, in 1875 entered the Joanneum Polytechnic

School in Graz, Austria.

Tesla's university career started out brilliantly, but he came

into conflict with one of his physics professors over the

feasibility of designing a motor which would operate without

the troublesome and unreliable commutator and brushes of

existing motors. He became addicted to

gambling, lost his scholarship, and dropped out in his third

year. He worked as a draftsman, taught in his old high school,

and eventually ended up in Prague, intending to continue his

study of engineering at the Karl-Ferdinand University. He

took a variety of courses, but eventually his uncles withdrew

their financial support.

Tesla then moved to Budapest, where he found employment as

chief electrician at the Budapest Telephone Exchange. He

quickly distinguished himself as a problem solver and innovator and,

before long, came to the attention of the Continental Edison Company

of France, which had designed the equipment used in Budapest. He

was offered and accepted a job at their headquarters in

Ivry, France. Most of Edison's employees had practical, hands-on

experience with electrical equipment, but lacked Tesla's

formal education in mathematics and physics. Before long, Tesla

was designing dynamos for lighting plants and earning a

handsome salary. With his language skills (by that time, Tesla

was fluent in Serbian, German, and French, and was improving his

English), the Edison company sent him into the field as a

trouble-shooter. This further increased his reputation and,

in 1884 he was offered a job at Edison headquarters in New York.

He arrived and, years later, described the formalities of

entering the U.S. as an immigrant: a clerk saying “Kiss

the Bible. Twenty cents!”.

Tesla had never abandoned the idea of a brushless motor. Almost

all electric lighting systems in the 1880s used

direct current (DC):

electrons flowed in only one direction through the distribution

wires. This is the kind of current produced by batteries,

and the first electrical generators (dynamos) produced direct

current by means of a device called a

commutator.

As the generator is turned by its power source (for example, a steam

engine or water wheel), power is extracted from the rotating commutator

by fixed brushes which press against it. The contacts on the

commutator are wired to the coils in the generator in such a way

that a constant direct current is maintained. When direct current

is used to drive a motor, the motor must also contain a commutator

which converts the direct current into a reversing flow to maintain

the motor in rotary motion.

Commutators, with brushes rubbing against them, are inefficient

and unreliable. Brushes wear and must eventually be replaced, and

as the commutator rotates and the brushes make and break contact,

sparks may be produced which waste energy and degrade the

contacts. Further, direct current has a major disadvantage

for long-distance power transmission. There was, at the time,

no way to efficiently change the voltage of direct current. This

meant that the circuit from the generator to the user of the

power had to run at the same voltage the user received,

say 120 volts. But at such a voltage, resistance losses in

copper wires are such that over long runs most of the energy

would be lost in the wires, not delivered to customers. You can

increase the size of the distribution wires to reduce losses, but

before long this becomes impractical due to the cost of copper

it would require. As a consequence, Edison

electric lighting systems installed in the 19th century had

many small powerhouses, each supplying a local set of

customers.

Alternating

current (AC) solves the problem of power distribution. In 1881

the electrical transformer had been invented, and by 1884

high-efficiency transformers were being manufactured in Europe.

Powered by alternating current (they don't work with DC),

a transformer efficiently converts current from one voltage and current to

another. For example, power might be transmitted from the generating

station to the customer at 12000 volts and 1 ampere, then stepped

down to 120 volts and 100 amperes by a transformer at the customer

location. Losses in a wire are purely a function of current, not

voltage, so for a given level of transmission loss, the cables

to distribute power at 12000 volts will cost a hundredth as

much as if 120 volts were used. For electric lighting,

alternating current works just as well as direct current (as

long as the frequency of the alternating current is sufficiently

high that lamps do not flicker). But electricity was increasingly

used to power motors, replacing steam power in factories. All

existing practical motors ran on DC, so this was seen as an

advantage to Edison's system.

Tesla worked only six months for Edison. After developing an

arc lighting system only to have Edison put it on the shelf

after acquiring the rights to a system developed by another

company, he quit in disgust. He then continued to work on

an arc light system in New Jersey, but the company to which

he had licensed his patents failed, leaving him only with a

worthless stock certificate. To support himself, Tesla worked

repairing electrical equipment and even digging ditches, where

one of his foremen introduced him to Alfred S. Brown, who

had made his career in telegraphy. Tesla showed Brown one

of his patents, for a “thermomagnetic motor”, and

Brown contacted Charles F. Peck, a lawyer who had made his

fortune in telegraphy. Together, Peck and Brown saw the

potential for the motor and other Tesla inventions and in

April 1887 founded the Tesla Electric Company, with its

laboratory in Manhattan's financial district.

Tesla immediately set to make his dream of a brushless AC motor a

practical reality and, by using multiple AC currents, out of phase with

one another (the

polyphase system),

he was able to create a magnetic field which itself

rotated. The rotating magnetic field induced a current in the

rotating part of the motor, which would start and turn

without any need for a commutator or brushes.

Tesla had invented what we now call the

induction motor.

He began to file patent applications for the motor and the

polyphase AC transmission system in the fall of 1887, and by

May of the following year had been granted a total of seven

patents on various aspects of the motor and polyphase current.

One disadvantage of the polyphase system and motor was that it required

multiple pairs of wires to transmit power from the generator to the

motor, which increased cost and complexity. Also,

existing AC lighting systems, which were beginning to come into use,

primarily in Europe, used a single phase and two wires. Tesla

invented the

split-phase motor,

which would run on a two wire, single phase circuit, and this was

quickly patented.

Unlike Edison, who had built an industrial empire based upon

his inventions, Tesla, Peck, and Brown had no interest in

founding a company to manufacture Tesla's motors. Instead,

they intended to shop around and license the patents to an

existing enterprise with the resources required to exploit

them. George Westinghouse had developed his inventions of

air brakes and signalling systems for railways into a

successful and growing company, and was beginning to compete

with Edison in the electric light industry, installing AC

systems. Westinghouse was a prime prospect to license the

patents, and in July 1888 a deal was concluded for cash,

notes, and a royalty for each horsepower of motors sold.

Tesla moved to Pittsburgh, where he spent a year working in

the Westinghouse research lab improving the motor designs.

While there, he filed an additional fifteen patent applications.

After leaving Westinghouse, Tesla took a trip to Europe

where he became fascinated with Heinrich Hertz's discovery

of electromagnetic waves. Produced by alternating current at

frequencies much higher than those used in electrical power

systems (Hertz used a spark gap to produce them), here was

a demonstration of transmission of electricity through

thin air—with no wires at all. This idea was to

inspire much of Tesla's work for the rest of his life.

By 1891, he had invented a resonant high frequency

transformer which we now call a

Tesla coil,

and before long was performing spectacular demonstrations

of artificial lightning, illuminating lamps at a distance

without wires, and demonstrating new kinds of electric lights

far more efficient than Edison's incandescent bulbs.

Tesla's reputation as an inventor was equalled by his

talent as a showman in presentations before scientific

societies and the public in both the U.S. and Europe.

Oddly, for someone with Tesla's academic and practical background,

there is no evidence that he mastered Maxwell's theory of

electromagnetism. He believed that the phenomena he observed

with the Tesla coil and other apparatus were not due to the

Hertzian waves predicted by Maxwell's equations, but rather

something he called “electrostatic thrusts”. He

was later to build a great edifice of mistaken theory on this

crackpot idea.

By 1892, plans were progressing to harness the hydroelectric

power of Niagara Falls. Transmission of this power

to customers was central to the project: around one

fifth of the American population lived within 400 miles

of the falls. Westinghouse bid Tesla's polyphase

system and with Tesla's help in persuading the committee

charged with evaluating proposals, was awarded the contract

in 1893. By November of 1896, power from Niagara reached

Buffalo, twenty miles away, and over the next decade extended

throughout New York. The success of the project made polyphase

power transmission the technology of choice for most

electrical distribution systems, and it remains so to this day.

In 1895, the New York Times wrote:

Even now, the world is more apt to think of him as a producer of weird experimental effects than as a practical and useful inventor. Not so the scientific public or the business men. By the latter classes Tesla is properly appreciated, honored, perhaps even envied. For he has given to the world a complete solution of the problem which has taxed the brains and occupied the time of the greatest electro-scientists for the last two decades—namely, the successful adaptation of electrical power transmitted over long distances.

After the Niagara project, Tesla continued to invent, demonstrate his work, and obtain patents. With the support of patrons such as John Jacob Astor and J. P. Morgan he pursued his work on wireless transmission of power at laboratories in Colorado Springs and Wardenclyffe on Long Island. He continued to be featured in the popular press, amplifying his public image as an eccentric genius and mad scientist. Tesla lived until 1943, dying at the age of 86 of a heart attack. Over his life, he obtained around 300 patents for devices as varied as a new form of turbine, a radio controlled boat, and a vertical takeoff and landing airplane. He speculated about wireless worldwide distribution of news to personal mobile devices and directed energy weapons to defeat the threat of bombers. While in Colorado, he believed he had detected signals from extraterrestrial beings. In his experiments with high voltage, he accidently detected X-rays before Röntgen announced their discovery, but he didn't understand what he had observed. None of these inventions had any practical consequences. The centrepiece of Tesla's post-Niagara work, the wireless transmission of power, was based upon a flawed theory of how electricity interacts with the Earth. Tesla believed that the Earth was filled with electricity and that if he pumped electricity into it at one point, a resonant receiver anywhere else on the Earth could extract it, just as if you pump air into a soccer ball, it can be drained out by a tap elsewhere on the ball. This is, of course, complete nonsense, as his contemporaries working in the field knew, and said, at the time. While Tesla continued to garner popular press coverage for his increasingly bizarre theories, he was ignored by those who understood they could never work. Undeterred, Tesla proceeded to build an enormous prototype of his transmitter at Wardenclyffe, intended to span the Atlantic, without ever, for example, constructing a smaller-scale facility to verify his theories over a distance of, say, ten miles. Tesla's invention of polyphase current distribution and the induction motor were central to the electrification of nations and continue to be used today. His subsequent work was increasingly unmoored from the growing theoretical understanding of electromagnetism and many of his ideas could not have worked. The turbine worked, but was uncompetitive with the fabrication and materials of the time. The radio controlled boat was clever, but was far from the magic bullet to defeat the threat of the battleship he claimed it to be. The particle beam weapon (death ray) was a fantasy. In recent decades, Tesla has become a magnet for Internet-connected crackpots, who have woven elaborate fantasies around his work. Finally, in this book, written by a historian of engineering and based upon original sources, we have an authoritative and unbiased look at Tesla's life, his inventions, and their impact upon society. You will understand not only what Tesla invented, but why, and how the inventions worked. The flaky aspects of his life are here as well, but never mocked; inventors have to think ahead of accepted knowledge, and sometimes they will inevitably get things wrong.

March 2016

- Flint, Eric. 1632. Riverdale, NY: Baen Publishing, 2000. ISBN 978-0-671-31972-4.

- Nobody knows how it happened, nor remotely why. Was it a bizarre physics phenomenon, an act of God, intervention by aliens, or “just one of those things”? One day, with a flash and a bang which came to be called the Ring of Fire, the town of Grantville, West Virginia and its environs in the present day was interchanged with an equally large area of Thuringia, in what is now Germany, in the year 1632. The residents of Grantville discover a sharp boundary where the town they know so well comes to an end and the new landscape begins. What's more, they rapidly discover they aren't in West Virginia any more, encountering brutal and hostile troops ravaging the surrounding countryside. After rescuing two travellers and people being attacked by the soldiers and using their superior firepower to bring hostilities to a close, they begin to piece together what has happened. They are not only in central Europe, but square in the middle of the Thirty Years' War: the conflict between Catholic and Protestant forces which engulfed much of the continent. Being Americans, and especially being self-sufficient West Virginians, the residents of Grantville take stock of their situation and start planning to make of the most of the situation they've been dealt. They can count themselves lucky that the power plant was included within the Ring of Fire, so the electricity will stay on as long as there is fuel to run it. There are local coal mines and people with the knowledge to work them. The school and its library were within the circle, so there is access to knowledge of history and technology, as well as the school's shop and several machine shops in town. As a rural community, there are experienced farmers, and the land in Thuringia is not so different from West Virginia, although the climate is somewhat harsher. Supplies of fuel for transportation are limited to stocks on hand and in the tanks of vehicles with no immediate prospect of obtaining more. There are plenty of guns and lots of ammunition, but even with the reloading skills of those in the town, eventually the supply of primers and smokeless powder will be exhausted. Not only does the town find itself in the middle of battles between armies, those battles have created a multitude of refugees who press in on the town. Should Grantville put up a wall and hunker down, or welcome them, begin to assimilate them as new Americans, and put them to work to build a better society based upon the principles which kept religious wars out of the New World? And how can a small town, whatever its technological advantages and principles, deal with contending forces thousands of times larger? Form an alliance? But with whom, and on what terms? And what principles must be open to compromise and which must be inviolate? This is a thoroughly delightful story which will leave you with admiration for the ways of rural America, echoing those of their ancestors who built a free society in a wilderness. Along with the fictional characters, we encounter key historical figures of the era, who are depicted accurately. There are a number of coincidences which make things work (for example, Grantville having a power plant, and encountering Scottish troops in the army of the King of Sweden who speak English), but without those coincidences the story would fall apart. The thought which recurred as I read the novel is what would have happened if, instead, an effete present-day American university town had been plopped down in the midst of the Thirty Years War instead of Grantville. I'd give it forty-eight hours at most. This novel is the first in what has become a large and expanding Ring of Fire universe, including novels by the author and other writers set all over Europe and around the world, short stories, periodicals, and a role-playing game. If you loved this story, as I did, there's much more to explore. This book is a part of the Baen Free Library. You can read the book online or download it in a wide variety of electronic book formats, all free of digital rights management, directly from the book's page at the Baen site. The Kindle edition may also be downloaded for free from Amazon.

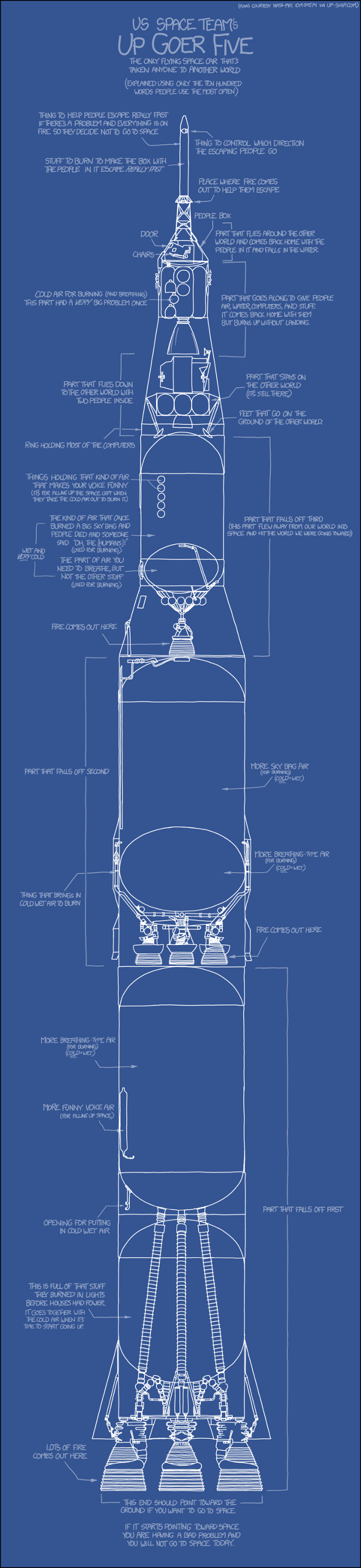

- Munroe, Randall. Thing Explainer. New York: Houghton Mifflin, 2015. ISBN 978-0-544-66825-6.

-

What a great idea! The person who wrote this book explains not simple

things like red world sky cars, tiny water bags we are made of, and

the shared space house, with only the ten hundred words people use

most.

There are many pictures with words explaining each thing. The idea

came from the

Up Goer Five

picture he drew earlier.

Drawing by Randall Munroe / xkcd used under right to share but not to sell (CC BY-NC 2.5).

Many other things are explained here. You will learn about things in the house like food-heating radio boxes and boxes that clean food holders; living things like trees, bags of stuff inside you, and the tree of life; the Sun, Earth, sky, and other worlds; and even machines for burning cities and boats that go under the seas to throw them at other people. This is not just a great use of words, but something you can learn much from. There is art in explaining things in the most used ten hundred words, and this book is a fine work of that art. Read this book, then try explaining such things yourself. You can use this write checker to see how you did. Can you explain why time slows down when you go fast? Or why things jump around when you look at them very close-up? This book will make you want to try it. Enjoy! The same writer also created What If? (2015-11) Here, I have only written with the same ten hundred most used words as in the book.

(The words in the above picture are drawn. In the book they are set in sharp letters.)

April 2016

- Jenne, Mike. Blue Gemini. New York: Yucca Publishing, 2015. ISBN 978-1-63158-047-5.

- It is the late 1960s, and the Apollo project is racing toward the Moon. The U.S. Air Force has not abandoned its manned space flight ambitions, and is proceeding with its Manned Orbiting Laboratory program, nominally to explore the missions military astronauts can perform in an orbiting space station, but in reality a large manned reconnaissance satellite. Behind the curtain of secrecy and under the cover of the blandly named “Aerospace Support Project”, the Air Force was simultaneously proceeding with a much more provocative project: Blue Gemini. Using the Titan II booster and a modified version of the two-man spacecraft from NASA's recently-concluded Gemini program, its mission was to launch on short notice, rendezvous with and inspect uncooperative targets (think Soviet military satellites), and optionally attach a package to them which, on command from the ground, could destroy the satellite, de-orbit it, or throw it out of control. All of this would have to be done covertly, without alerting the Soviets to the intrusion. Inconclusive evidence and fears that the Soviets, in response to the U.S. ballistic missile submarine capability, were preparing to place nuclear weapons in orbit, ready to rain down onto the U.S. upon command, even if the Soviet missile and bomber forces were destroyed, gave Blue Gemini a high priority. Operating out of Wright-Patterson Air Force Base in Ohio, flight hardware for the Gemini-I interceptor spacecraft, Titan II missiles modified for man-rating, and a launching site on Johnston Island in the Pacific were all being prepared, and three flight crews were in training. Scott Ourecky had always dreamed of flying. In college, he enrolled in Air Force ROTC, underwent primary flight training, and joined the Air Force upon graduation. Once in uniform, his talent for engineering and mathematics caused him to advance, but his applications for flight training were repeatedly rejected, and he had resigned himself to a technical career in advanced weapon development, most recently at Eglin Air Force Base in Florida. There he is recruited to work part-time on the thorny technical problems of a hush-hush project: Blue Gemini. Ourecky settles in and undertakes the formidable challenges faced by the mission. (NASA's Gemini rendezvous targets were cooperative: they had transponders and flashing beacons which made them easier to locate, and missions could be planned so that rendezvous would be accomplished when communications with ground controllers would be available. In Blue Gemini the crew would be largely on their own, with only brief communication passes available.) Finally, after an incident brought on by the pressure and grueling pace of training, he finds himself in the right seat of the simulator, paired with hot-shot pilot Drew Carson (who views non-pilots as lesser beings, and would rather be in Vietnam adding combat missions to his service record rather than sitting in a simulator in Ohio on a black program which will probably never be disclosed). As the story progresses, crisis after crisis must be dealt with, all against a deadline which, if not met, will mean the almost-certain cancellation of the project. This is fiction: no Gemini interceptor program ever existed (although one of the missions for which the Space Shuttle was designed was essentially the same: a one orbit inspection or snatch-and-return of a hostile satellite). But the remarkable thing about this novel is that, unlike many thrillers, the author gets just about everything absolutely right. This does not stop with the technical details of the Gemini and Titan hardware, but also Pentagon politics, inter-service rivalry, the interaction of military projects with political forces, and the dynamics of the relations between pilots, engineers, and project administrators. It works as a thriller, as a story with characters who develop in interesting ways, and there are no jarring goofs to distract you from the narrative. (Well, hardly any: the turbine engines of a C-130 do not “cough to life”.) There are numerous subplots and characters involved in them, and when this book comes to an end, they're just left hanging in mid-air. That's because this is the first of a multi-volume work in progress. The second novel, Blue Darker than Black, picks up where the first ends. The third, Pale Blue, is scheduled to be published in August 2016.

- Goldsmith, Barbara. Obsessive Genius. New York: W. W. Norton, 2005. ISBN 978-0-393-32748-9.