« April 2016 | Main | June 2016 »

Saturday, May 28, 2016

Reading List: The Cosmic Web

- Gott, J. Richard. The Cosmic Web. Princeton: Princeton University Press, 2016. ISBN 978-0-691-15726-9.

- Some works of popular science, trying to impress the reader with the scale of the universe and the insignificance of humans on the cosmic scale, argue that there's nothing special about our place in the universe: “an ordinary planet orbiting an ordinary star, in a typical orbit within an ordinary galaxy”, or something like that. But this is wrong! Surfaces of planets make up a vanishingly small fraction of the volume of the universe, and habitable planets, where beings like ourselves are neither frozen nor fried by extremes of temperature, nor suffocated or poisoned by a toxic atmosphere, are rarer still. The Sun is far from an ordinary star: it is brighter than 85% of the stars in the galaxy, and only 7.8% of stars in the Milky Way share its spectral class. Fully 76% of stars are dim red dwarves, the heavens' own 25 watt bulbs. What does a typical place in the universe look like? What would you see if you were there? Well, first of all, you'd need a space suit and air supply, since the universe is mostly empty. And you'd see nothing. Most of the volume of the universe consists of great voids with few galaxies. If you were at a typical place in the universe, you'd be in one of these voids, probably far enough from the nearest galaxy that it wouldn't be visible to the unaided eye. There would be no stars in the sky, since stars are only formed within galaxies. There would only be darkness. Now look out the window: you are in a pretty special place after all. One of the great intellectual adventures of the last century is learning our place in the universe and coming to understand its large scale structure. This book, by an astrophysicist who has played an important role in discovering that structure, explains how we pieced together the evidence and came to learn the details of the universe we inhabit. It provides an insider's look at how astronomers tease insight out of the messy and often confusing data obtained from observation. It's remarkable not just how much we've learned, but how recently we've come to know it. At the start of the 20th century, most astronomers believed the solar system was part of a disc of stars which we see as the Milky Way. In 1610, Galileo's telescope revealed that the Milky Way was made up of a multitude of faint stars, and since the galaxy makes a band all around the sky, that the Sun must be within it. In 1918, by observing variable stars in globular clusters which orbit the Milky Way, Harlow Shapley was able to measure the size of the galaxy, which proved much larger than previously estimated, and determine that the Sun was about half way from the centre of the galaxy to its edge. Still, the universe was the galaxy. There remained the mystery of the “spiral nebulæ”. These faint smudges of light had been revealed by photographic time exposures through large telescopes to be discs, some with prominent spiral arms, viewed from different angles. Some astronomers believed them to be gas clouds within the galaxy, perhaps other solar systems in the process of formation, while others argued they were galaxies like the Milky Way, far distant in the universe. In 1920 a great debate pitted the two views against one another, concluding that insufficient evidence existed to decide the matter. That evidence would not be long in coming. Shortly thereafter, using the new 100 inch telescope on Mount Wilson in California, Edwin Hubble was able to photograph the Andromeda Nebula and resolve it into individual stars. Just as Galileo had done three centuries earlier for the Milky Way, Hubble's photographs proved Andromeda was not a gas cloud, but a galaxy composed of a multitude of stars. Further, Hubble was able to identify variable stars which allowed him to estimate its distance: due to details about the stars which were not understood at the time, he underestimated the distance by about a factor of two, but it was clear the galaxy was far beyond the Milky Way. The distances to other nearby galaxies were soon measured. In one leap, the scale of the universe had become breathtakingly larger. Instead of one galaxy comprising the universe, the Milky Way was just one of a multitude of galaxies scattered around an enormous void. When astronomers observed the spectra of these galaxies, they noticed something odd: spectral lines from stars in most galaxies were shifted toward the red end of the spectrum compared to those observed on Earth. This was interpreted as a Doppler shift due to the galaxy's moving away from the Milky Way. Between 1929 and 1931, Edwin Hubble measured the distances and redshifts of a number of galaxies and discovered there was a linear relationship between the two. A galaxy twice as distant as another would be receding at twice the velocity. The universe was expanding, and every galaxy (except those sufficiently close to be gravitationally bound) was receding from every other galaxy. The discovery of the redshift-distance relationship provided astronomers a way to chart the cosmos in three dimensions. Plotting the position of a galaxy on the sky and measuring its distance via redshift allowed building up a model of how galaxies were distributed in the universe. Were they randomly scattered, or would patterns emerge, suggesting larger-scale structure? Galaxies had been observed to cluster: the nearest cluster, in the constellation Virgo, is made up of at least 1300 galaxies, and is now known to be part of a larger supercluster of which the Milky Way is an outlying member. It was not until the 1970s and 1980s that large-scale redshift surveys allowed plotting the positions of galaxies in the universe, initially in thin slices, and eventually in three dimensions. What was seen was striking. Galaxies were not sprinkled at random through the universe, but seemed to form filaments and walls, with great voids containing little or no galaxies. How did this come to be? In parallel with this patient observational work, theorists were working out the history of the early universe based upon increasingly precise observations of the cosmic microwave background radiation, which provides a glimpse of the universe just 380,000 years after the Big Bang. This ushered in the era of precision cosmology, where the age and scale of the universe were determined with great accuracy, and the tiny fluctuations in temperature of the early universe were mapped in detail. This led to a picture of the universe very different from that imagined by astronomers over the centuries. Ordinary matter: stars, planets, gas clouds, and you and me—everything we observe in the heavens and the Earth—makes up less than 5% of the mass-energy of the universe. Dark matter, which interacts with ordinary matter only through gravitation, makes up 26.8% of the universe. It can be detected through its gravitational effects on the motion of stars and galaxies, but at present we don't have any idea what it's composed of. (It would be more accurate to call it “transparent matter” since it does not interact with light, but “dark matter” is the name we're stuck with.) The balance of the universe, 68.3%, is dark energy, a form of energy filling empty space and causing the expansion of the universe to accelerate. We have no idea at all about the nature of dark energy. These three components: ordinary matter, dark matter, and dark energy add up to give the universe a flat topology. It is humbling to contemplate the fact that everything we've learned in all of the sciences is about matter which makes up less than 5% of the universe: the other 95% is invisible and we don't know anything about it (although there are abundant guesses or, if you prefer, hypotheses). This may seem like a flight of fancy, or a case of theorists making up invisible things to explain away observations they can't otherwise interpret. But in fact, dark matter and dark energy, originally inferred from astronomical observations, make predictions about the properties of the cosmic background radiation, and these predictions have been confirmed with increasingly high precision by successive space-based observations of the microwave sky. These observations are consistent with a period of cosmological inflation in which a tiny portion of the universe expanded to encompass the entire visible universe today. Inflation magnified tiny quantum fluctuations of the density of the universe to a scale where they could serve as seeds for the formation of structures in the present-day universe. Regions with greater than average density would begin to collapse inward due to the gravitational attraction of their contents, while those with less than average density would become voids as material within them fell into adjacent regions of higher density. Dark matter, being more than five times as abundant as ordinary matter, would take the lead in this process of gravitational collapse, and ordinary matter would follow, concentrating in denser regions and eventually forming stars and galaxies. The galaxies formed would associate into gravitationally bound clusters and eventually superclusters, forming structure at larger scales. But what does the universe look like at the largest scale? Are galaxies distributed at random; do they clump together like meatballs in a soup; or do voids occur within a sea of galaxies like the holes in Swiss cheese? The answer is, surprisingly, none of the above, and the author explains the research, in which he has been a key participant, that discovered the large scale structure of the universe. As increasingly more comprehensive redshift surveys of galaxies were made, what appeared was a network of filaments which connected to one another, forming extended structures. Between filaments were voids containing few galaxies. Some of these structures, such as the Sloan Great Wall, at 1.38 billion light years in length, are 1/10 the radius of the observable universe. Galaxies are found along filaments, and where filaments meet, rich clusters and superclusters of galaxies are observed. At this large scale, where galaxies are represented by single dots, the universe resembles a neural network like the human brain. As ever more extensive observations mapped the three-dimensional structure of the universe we inhabit, progress in computing allowed running increasingly detailed simulations of the evolution of structure in models of the universe. Although the implementation of these simulations is difficult and complicated, they are conceptually simple. You start with a region of space, populate it with particles representing ordinary and dark matter in a sea of dark energy with random positions and density variations corresponding to those observed in the cosmic background radiation, then let the simulation run, computing the gravitational attraction of each particle on the others and tracking their motion under the influence of gravity. In 2005, Volker Springel and the Virgo Consortium ran the Millennium Simulation, which started from the best estimate of the initial conditions of the universe known at the time and tracked the motion of ten billion particles of ordinary and dark matter in a cube two billion light years on a side. As the simulation clock ran, the matter contracted into filaments surrounding voids, with the filaments joined at nodes rich in galaxies. The images produced by the simulation and the statistics calculated were strikingly similar to those observed in the real universe. The behaviour of this and other simulations increases confidence in the existence of dark matter and dark energy; if you leave them out of the simulation, you get results which don't look anything like the universe we inhabit. At the largest scale, the universe isn't made of galaxies sprinkled at random, nor meatballs of galaxy clusters in a sea of voids, nor a sea of galaxies with Swiss cheese like voids. Instead, it resembles a sponge of denser filaments and knots interpenetrated by less dense voids. Both the denser and less dense regions percolate: it is possible to travel from one edge of the universe to another staying entirely within more or less dense regions. (If the universe were arranged like a honeycomb, for example, with voids surrounded by denser walls, this would not be possible.) Nobody imagined this before the observational results started coming in, and now we've discovered that given the initial conditions of the universe after the Big Bang, the emergence of such a structure is inevitable. All of the structure we observe in the universe has evolved from a remarkably uniform starting point in the 13.8 billion years since the Big Bang. What will the future hold? The final chapter explores various scenarios for the far future. Because these depend upon the properties of dark matter and dark energy, which we don't understand, they are necessarily speculative. The book is written for the general reader, but at a level substantially more difficult than many works of science popularisation. The author, a scientist involved in this research for decades, does not shy away from using equations when they illustrate an argument better than words. Readers are assumed to be comfortable with scientific notation, units like light years and parsecs, and logarithmically scaled charts. For some reason, in the Kindle edition dozens of hyphenated phrases are run together without any punctuation.

Wednesday, May 25, 2016

Reading List: The B-58 Blunder

- Holt, George, Jr. The B-58 Blunder. Randolph, VT: George Holt, 2015. ISBN 978-0-692-47881-3.

- The B-58 Hustler was a breakthrough aircraft. The first generation of U.S. Air Force jet-powered bombers—the B-47 medium and B-52 heavy bombers—were revolutionary for their time, but were becoming increasingly vulnerable to high-performance interceptor aircraft and anti-aircraft missiles on the deep penetration bombing missions within the communist bloc for which they were intended. In the 1950s, it was believed the best way to reduce the threat was to fly fast and at high altitude, with a small aircraft that would be more difficult to detect with radar. Preliminary studies of a next generation bomber began in 1949, and in 1952 Convair was selected to develop a prototype of what would become the B-58. Using a delta wing and four turbojet engines, the aircraft could cruise at up to twice the speed of sound (Mach 2, 2450 km/h) with a service ceiling of 19.3 km. With a small radar cross-section compared to the enormous B-52 (although still large compared to present-day stealth designs), the idea was that flying so fast and at high altitude, by the time an enemy radar site detected the B-58, it would be too late to scramble an interceptor to attack it. Contemporary anti-aircraft missiles lacked the capability to down targets at its altitude and speed. The first flight of a prototype was in November 1956, and after a protracted development and test program, plagued by problems due to its radical design, the bomber entered squadron service in March of 1960. Rising costs caused the number purchased to be scaled back to just 116 (by comparison, 2,032 B-47s and 744 B-52s were built), deployed in two Strategic Air Command (SAC) bomber wings. The B-58 was built to deliver nuclear bombs. Originally, it carried one B53 nine megaton weapon mounted below the fuselage. Subsequently, the ability to carry four B43 or B61 bombs on hardpoints beneath the wings was added. The B43 and B61 were variable yield weapons, with the B43 providing yields from 70 kilotons to 1 megaton and the B61 300 tons to 340 kilotons. The B-58 was not intended to carry conventional (non-nuclear, high explosive) bombs, and although some studies were done of conventional missions, its limited bomb load would have made it uncompetitive with other aircraft. Defensive weaponry was a single 20 mm radar-guided cannon in the tail. This was a last-ditch option: the B-58 was intended to outrun attackers, not fight them off. The crew of three consisted of a pilot, bombardier/navigator, and a defensive systems operator (responsible for electronic countermeasures [jamming] and the tail gun), each in their own cockpit with an ejection capsule. The navigation and bombing system included an inertial navigation platform with a star tracker for correction, a Doppler radar, and a search radar. The nuclear weapon pod beneath the fuselage could be replaced with a pod for photo reconnaissance. Other pods were considered, but never developed. The B-58 was not easy to fly. Its delta wing required high takeoff and landing speeds, and a steep angle of attack (nose-up attitude), but if the pilot allowed the nose to rise too high, the aircraft would pitch up and spin. Loss of an engine, particularly one of the outboard engines, was, as they say, a very dynamic event, requiring instant response to counter the resulting yaw. During its operational history, a total of 26 B-58s were lost in accidents: 22.4% of the fleet. During its ten years in service, no operational bomber equalled or surpassed the performance of the B-58. It set nineteen speed records, some which still stand today, and won prestigious awards for its achievements. It was a breakthrough, but ultimately a dead end: no subsequent operational bomber has exceeded its performance in speed and altitude, but that's because speed and altitude were judged insufficient to accomplish the mission. With the introduction of supersonic interceptors and high-performance anti-aircraft missiles by the Soviet Union, the B-58 was determined to be vulnerable in its original supersonic, high-altitude mission profile. Crews were retrained to fly penetration missions at near-supersonic speeds and very low altitude, making it difficult for enemy radar to acquire and track the bomber. Although it was not equipped with terrain-following radar like the B-52, an accurate radar altimeter allowed crews to perform these missions. The large, rigid delta wing made the B-58 relatively immune to turbulence at low altitudes. Still, abandoning the supersonic attack profile meant that many of the capabilities which made the B-58 so complicated and expensive to operate and maintain were wasted. This book is the story of the decision to retire the B-58, told by a crew member and Pentagon staffer who strongly dissented and argues that the B-58 should have remained in service much longer. George “Sonny” Holt, Jr. served for thirty-one years in the U.S. Air Force, retiring with the rank of colonel. For three years he was a bombardier/navigator on a B-58 crew and later, in the Plans Division at the Pentagon, observed the process which led to the retirement of the bomber close-up, doing his best to prevent it. He would disagree with many of the comments about the disadvantages of the aircraft mentioned in previous paragraphs, and addresses them in detail. In his view, the retirement of the B-58 in 1970, when it had been originally envisioned as remaining in the fleet until the mid-1970s, was part of a deal by SAC, which offered the retirement of all of the B-58s in return for retaining four B-52 wings which were slated for retirement. He argues that SAC never really wanted to operate the B-58, and that they did not understand its unique capabilities. With such a small fleet, it did not figure large in their view of the bomber force (although with its large nuclear weapon load, it actually represented about half the yield of the bomber leg of the strategic triad). He provides an insider's perspective on Pentagon politics, and how decisions are made at high levels, often without input from those actually operating the weapon systems. He disputes many of the claimed disadvantages of the B-58 and, in particular, argues that it performed superbly in the low-level penetration mission, something for which it was not designed. What is not discussed is the competition posed to manned bombers of all kinds in the nuclear mission by the Minuteman missile, which began to be deployed in 1962. By June 1965, 800 missiles were on alert, each with a 1.2 megaton W56 warhead. Solid-fueled missiles like the Minuteman require little maintenance and are ready to launch immediately at any time. Unlike bombers, where one worries about the development of interceptor aircraft and surface to air missiles, no defense against a mass missile attack existed or was expected to be developed in the foreseeable future. A missile in a silo required only a small crew of launch and maintenance personnel, as opposed to the bomber which had flight crews, mechanics, a spare parts logistics infrastructure, and had to be supported by refueling tankers with their own overhead. From the standpoint of cost-effectiveness, a word very much in use in the 1960s Pentagon, the missiles, which were already deployed, were dramatically better than any bomber, and especially the most expensive one in the inventory. The bomber generals in SAC were able to save the B-52, and were willing to sacrifice the B-58 in order to do so. The book is self-published by the author and is sorely in need of the attention of a copy editor. There are numerous spelling and grammatical errors, and nouns are capitalised in the middle of sentences for no apparent reason. There are abundant black and white illustrations from Air Force files.

Monday, May 23, 2016

Reading List: Arkwright

- Steele, Allen. Arkwright. New York: Tor, 2016. ISBN 978-0-7653-8215-3.

-

Nathan Arkwright was one of the “Big Four” science fiction

writers of the twentieth century, along with Isaac Asimov, Arthur C.

Clarke, and Robert A. Heinlein. Launching his career in the

Golden Age of science fiction,

he created the Galaxy Patrol

space adventures, with 17 novels from 1950 to 1988, a

radio drama, television series, and three movies. The royalties from

his work made him a wealthy man. He lived quietly in his home in

rural Massachusetts, dying in 2006.

Arkwright was estranged from his daughter and granddaughter, Kate

Morressy, a freelance science journalist. Kate attends the funeral

and meets Nathan's long-term literary agent, Margaret (Maggie) Krough,

science fiction writer Harry Skinner, and George Hallahan, a

research scientist long involved with military and aerospace projects.

After the funeral, the three meet with Kate, and Maggie explains

that Arkwright's will bequeaths all of his assets including future

royalties from his work to the non-profit Arkwright Foundation, which

Kate is asked to join as a director representing the family. She asks

the mission of the foundation, and Maggie responds by saying it's

a long and complicated story which is best answered by her reading the

manuscript of Arkwright's unfinished autobiography, My Life in

the Future.

It is some time before Kate gets around to reading the manuscript.

When she does, she finds herself immersed in the Golden Age of

science fiction, as her father recounts attending the first World's

Science Fiction Convention in New York in 1939. An avid science

fiction fan and aspiring writer, Arkwright rubs elbows with figures

he'd known only as names in magazines such as Fred Pohl, Don Wollheim,

Cyril Kornbluth, Forrest Ackerman, and Isaac Asimov. Quickly learning

that at a science fiction convention it isn't just elbows that rub

but also egos, he runs afoul of one of the clique wars that are

incomprehensible to those outside of fandom and finds himself ejected

from the convention, sitting down for a snack at the Automat across

the street with fellow banished fans Maggie, Harry, and George. The four

discuss their views of the state of science fiction and their

ambitions, and pledge to stay in touch. Any group within fandom needs

a proper name, and after a brief discussion “The Legion of Tomorrow”

was born. It would endure for decades.

The manuscript comes to an end, leaving Kate still in 1939. She then meets

in turn with the other three surviving members of the Legion, who carry the story

through Arkwright's long life, and describe the events which shaped his view

of the future and the foundation he created. Finally,

Kate is ready to hear the mission of the foundation—to make the future

Arkwright wrote about during his career a reality—to move humanity off

the planet and enter the era of space colonisation, and not just the

planets but, in time, the stars. And the foundation will be going it alone.

As Harry explains (p. 104), “It won't be made public, and there

won't be government involvement either. We don't want this to become another

NASA project that gets scuttled because Congress can't get off its dead ass

and give it decent funding.”

The strategy is bet on the future: invest in the technologies which will be

needed for and will profit from humanity's expansion from the home planet,

and then reinvest the proceeds in research and development and new generations

of technology and enterprises as space development proceeds. Nobody expects

this to be a short-term endeavour: decades or generations may be required before

the first interstellar craft is launched, but the structure of the foundation

is designed to persist for however long it takes. Kate signs on, “Forward

the Legion.”

So begins a grand, multi-generation saga chronicling humanity's leap to the

stars. Unlike many tales of interstellar flight, no arm-waving about

faster than light warp drives or other technologies requiring new physics

is invoked. Based upon information presented at the DARPA/NASA

100 Year Starship Symposium

in 2011 and the 2013

Starship Century conference, the author

uses only technologies based upon well-understood physics which, if

economic growth continues on the trajectory of the last century, are

plausible for the time in the future at which the story takes place.

And lest interstellar travel and colonisation be dismissed as

wasteful, no public resources are spent on it: coercive governments have

neither the imagination nor the attention span to achieve such grand and

long-term goals. And you never know how important the technological spin-offs

from such a project may prove in the future.

As noted, the author is scrupulous in using only technologies

consistent with our understanding of physics and biology and plausible

extrapolations of present capabilities. There are a few goofs, which

I'll place behind the curtain since some are plot spoilers.

Spoiler warning: Plot and/or ending details follow.On p. 61, a C-53 transport plane is called a Dakota. The C-53 is a troop transport variant of the C-47, referred to as the Skytrooper. But since the planes were externally almost identical, the observer may have confused them. “Dakota” was the RAF designation for the C-47; the U.S. Army Air Forces called it the Skytrain. On the same page, planes arrive from “Kirtland Air Force Base in Texas”. At the time, the facility would have been called “Kirtland Field”, part of the Albuquerque Army Air Base, which is located in New Mexico, not Texas. It was not renamed Kirtland Air Force Base until 1947. In the description of the launch of Apollo 17 on p. 71, after the long delay, the count is recycled to T−30 seconds. That isn't how it happened. After the cutoff in the original countdown at thirty seconds, the count was recycled to the T−22 minute mark, and after the problem was resolved, resumed from there. There would have been plenty of time for people who had given up and gone to bed to be awakened when the countdown was resumed and observe the launch. On p. 214, we're told the Doppler effect of the ship's velocity “caused the stars around and in front of the Galactique to redshift”. In fact, the stars in front of the ship would be blueshifted, while those behind it would be redshifted. On p. 230, the ship, en route, is struck by a particle of interstellar dust which is described as “not much larger than a piece of gravel”, which knocks out communications with the Earth. Let's assume it wasn't the size of a piece of gravel, but only that of a grain of sand, which is around 20 milligrams. The energy released in the collision with the grain of sand is 278 gigajoules, or 66 tons of TNT. The damage to the ship would have been catastrophic, not something readily repaired. On the same page, “By the ship's internal chronometer, the repair job probably only took a few days, but time dilation made it seem much longer to observers back on Earth.” Nope—at half the speed of light, time dilation is only 15%. Three days' ship's time would be less than three and a half days on Earth. On p. 265, “the DNA of its organic molecules was left-handed, which was crucial to the future habitability…”. What's important isn't the handedness of DNA, but rather the chirality of the organic molecules used in cells. The chirality of DNA is many levels above this fundamental property of biochemistry and, in fact, the DNA helix of terrestrial organisms is right-handed. (The chirality of DNA actually depends upon the nucleotide sequence, and there is a form, called Z-DNA, in which the helix is left-handed.)This is an inspiring and very human story, with realistic and flawed characters, venal politicians, unanticipated adversities, and a future very different than envisioned by many tales of the great human expansion, even those by the legendary Nathan Arkwright. It is an optimistic tale of the human future, grounded in the achievements of individuals who build it, step by step, in the unbounded vision of the Golden Age of science fiction. It is ours to make reality. Here is a podcast interview with the author by James Pethokoukis.Spoilers end here.

Saturday, May 21, 2016

Reading List: Cuckservative

- Red Eagle, John and Vox Day [Theodore Beale]. Cuckservative. Kouvola, Finland: Castalia House, 2015. ASIN B018ZHHA52.

- Yes, I have read it. So read me out of the polite genteel “conservative” movement. But then I am not a conservative. Further, I enjoyed it. The authors say things forthrightly that many people think and maybe express in confidence to their like-minded friends, but reflexively cringe upon even hearing in public. Even more damning, I found it enlightening on a number of topics, and I believe that anybody who reads it dispassionately is likely to find it the same. And finally, I am reviewing it. I have reviewed (or noted) every book I have read since January of 2001. Should I exclude this one because it makes some people uncomfortable? I exist to make people uncomfortable. And so, onward…. The authors have been called “racists”, which is rather odd since both are of Native American ancestry and Vox Day also has Mexican ancestors. Those who believe ancestry determines all will have to come to terms with the fact that these authors defend the values which largely English settlers brought to America, and were the foundation of American culture until it all began to come apart in the 1960s. In the view of the authors, as explained in chapter 4, the modern conservative movement in the U.S. dates from the 1950s. Before that time both the Democrat and Republican parties contained politicians and espoused policies which were both conservative and progressive (with the latter word used in the modern sense), often with regional differences. Starting with the progressive era early in the 20th century and dramatically accelerating during the New Deal, the consensus in both parties was centre-left liberalism (with “liberal” defined in the corrupt way it is used in the U.S.): a belief in a strong central government, social welfare programs, and active intervention in the economy. This view was largely shared by Democrat and Republican leaders, many of whom came from the same patrician class in the Northeast. At its outset, the new conservative movement, with intellectual leaders such as Russell Kirk and advocates like William F. Buckley, Jr., was outside the mainstream of both parties, but more closely aligned with the Republicans due to their wariness of big government. (But note that the Eisenhower administration made no attempt to roll back the New Deal, and thus effectively ratified it.) They argue that since the new conservative movement was a coalition of disparate groups such as libertarians, isolationists, southern agrarians, as well as ex-Trotskyites and former Communists, it was an uneasy alliance, and in forging it Buckley and others believed it was essential that the movement be seen as socially respectable. This led to a pattern of conservatives ostracising those who they feared might call down the scorn of the mainstream press upon them. In 1957, a devastating review of Atlas Shrugged by Whittaker Chambers marked the break with Ayn Rand's Objectivists, and in 1962 Buckley denounced the John Birch Society and read it out of the conservative movement. This established a pattern which continues to the present day: when an individual or group is seen as sufficiently radical that they might damage the image of conservatism as defined by the New York and Washington magazines and think tanks, they are unceremoniously purged and forced to find a new home in institutions viewed with disdain by the cultured intelligentsia. As the authors note, this is the exact opposite of the behaviour of the Left, which fiercely defends its most radical extremists. Today's Libertarian Party largely exists because its founders were purged from conservatism in the 1970s. The search for respectability and the patient construction of conservative institutions were successful in aligning the Republican party with the new conservatism. This first manifested itself in the nomination of Barry Goldwater in 1964. Following his disastrous defeat, conservatives continued their work, culminating in the election of Ronald Reagan in 1980. But even then, and in the years that followed, including congressional triumphs in 1994, 2010, and 2014, Republicans continued to behave as a minority party: acting only to slow the rate of growth of the Left's agenda rather than roll it back and enact their own. In the words of the authors, they are “calling for the same thing as the left, but less of it and twenty years later”. The authors call these Republicans “cuckservative” or “cuck” for short. The word is a portmanteau of “cuckold” and “conservative”. “Cuckold” dates back to A.D. 1250, and means the husband of an unfaithful wife, or a weak and ineffectual man. Voters who elect these so-called conservatives are cuckolded by them, as through their fecklessness and willingness to go along with the Left, they bring into being and support the collectivist agenda which they were elected to halt and roll back. I find nothing offensive in the definition of this word, but I don't like how it sounds—in part because it rhymes with an obscenity which has become an all-purpose word in the vocabulary of the Left and, increasingly, the young. Using the word induces a blind rage in some of those to whom it is applied, which may be its principal merit. But this book, despite bearing it as a title, is not about the word: only three pages are devoted to defining it. The bulk of the text is devoted to what the authors believe are the central issues facing the U.S. at present and an examination of how those calling themselves conservatives have ignored, compromised away, or sold out the interests of their constituents on each of these issues, including immigration and the consequences of a change in demographics toward those with no experience of the rule of law, the consequences of mass immigration on workers in domestic industries, globalisation and the flight of industries toward low-wage countries, how immigration has caused other societies in history to lose their countries, and how mainstream Christianity has been subverted by the social justice agenda and become an ally of the Left at the same time its pews are emptying in favour of evangelical denominations. There is extensive background information about the history of immigration in the United States, the bizarre “Magic Dirt” theory (that, for example, transplanting a Mexican community across the border will, simply by changing its location, transform its residents, in time, into Americans or, conversely, that “blighted neighbourhoods” are so because there's something about the dirt [or buildings] rather than the behaviour of those who inhabit them), and the overwhelming and growing scientific evidence for human biodiversity and the coming crack-up of the “blank slate” dogma. If the Left continues to tighten its grip upon the academy, we can expect to see research in this area be attacked as dissent from the party line on climate science is today. This is an excellent book: well written, argued, and documented. For those who have been following these issues over the years and observed the evolution of the conservative movement over the decades, there may not be much here that's new, but it's all tied up into one coherent package. For the less engaged who've just assumed that by voting for Republicans they were advancing the conservative cause, this may prove a revelation. If you're looking to find racism, white supremacy, fascism, authoritarianism, or any of the other epithets hurled against the dissident right, you won't find them here unless, as the Left does, you define the citation of well-documented facts as those things. What you will find is two authors who love America and believe that American policy should put the interests of Americans before those of others, and that politicians elected by Americans should be expected to act in their interest. If politicians call themselves “conservatives”, they should act to conserve what is great about America, not compromise it away in an attempt to, at best, delay the date their constituents are delivered into penury and serfdom. You may have to read this book being careful nobody looks over your shoulder to see what you're reading. You may have to never admit you've read it. You may have to hold your peace when somebody goes on a rant about the “alt-right”. But read it, and judge for yourself. If you believe the facts cited are wrong, do the research, refute them with evidence, and publish a response (under a pseudonym, if you must). But before you reject it based upon what you've heard, read it—it's only five bucks—and make up your own mind. That's what free citizens do. As I have come to expect in publications from Castalia House, the production values are superb. There are only a few (I found just three) copy editing errors. At present the book is available only in Kindle and Audible audiobook editions.

Thursday, May 19, 2016

Reading List: The Relic Master

- Buckley, Christopher. The Relic Master. New York: Simon & Schuster, 2015. ISBN 978-1-5011-2575-1.

- The year is 1517. The Holy Roman Empire sprawls across central Europe, from the Mediterranean in the south to the North Sea and Baltic in the north, from the Kingdom of France in the west to the Kingdoms of Poland and Hungary in the east. In reality the structure of the empire is so loose and complicated it defies easy description: independent kings, nobility, and prelates all have their domains of authority, and occasionally go to war against one another. Although the Reformation is about to burst upon the scene, the Roman Catholic Church is supreme, and religion is big business. In particular, the business of relics and indulgences. Commit a particularly heinous sin? If you're sufficiently well-heeled, you can obtain an indulgence through prayer, good works, or making a pilgrimage to a holy site. Over time, “good works” increasingly meant, for the prosperous, making a contribution to the treasury of the local prince or prelate, a percentage of which was kicked up to higher-ranking clergy, all the way to Rome. Or, an enterprising noble or churchman could collect relics such as the toe bone of a saint, a splinter from the True Cross, or a lock of hair from one of the camels the Magi rode to Bethlehem. Pilgrims would pay a fee to see, touch, have their sins erased, and be healed by these holy trophies. In short, the indulgence and relic business was selling “get out of purgatory for a price”. The very best businesses are those in which the product is delivered only after death—you have no problems with dissatisfied customers. To flourish in this trade, you'll need a collection of relics, all traceable to trustworthy sources. Relics were in great demand, and demand summons supply into being. All the relics of the True Cross, taken together, would have required the wood from a medium-sized forest, and even the most sacred and unique of relics, the burial shroud of Christ, was on display in several different locations. It's the “trustworthy” part that's difficult, and that's where Dismas comes in. A former Swiss mercenary, his resourcefulness in obtaining relics had led to his appointment as Relic Master to His Grace Albrecht, Archbishop of Brandenburg and Mainz, and also to Frederick the Wise, Elector of Saxony. These two customers were rivals in the relic business, allowing Dismas to play one against the other to his advantage. After visiting the Basel Relic Fair and obtaining some choice merchandise, he visits his patrons to exchange them for gold. While visiting Frederick, he hears that a monk has nailed ninety-five denunciations of the Church, including the sale of indulgences, to the door of the castle church. This is interesting, but potentially bad for business. Dismas meets his friend, Albrecht Dürer, who he calls “Nars” due to Dürer's narcissism: among other things including his own visage in most of his paintings. After months in the south hunting relics, he returns to visit Dürer and learns that the Swiss banker with whom he's deposited his fortune has been found to be a 16th century Bernie Madoff and that he has only the money on his person. Destitute, Dismas and Dürer devise a scheme to get back into the game. This launches them into a romp across central Europe visiting the castles, cities, taverns, dark forbidding forests, dungeons, and courts of nobility. We encounter historical figures including Philippus Aureolus Theophrastus Bombastus von Hohenheim (Paracelsus), who lends his scientific insight to the effort. All of this is recounted with the mix of wry and broad humour which Christopher Buckley uses so effectively in all of his novels. There is a tableau of the Last Supper, identity theft, and bombs. An appendix gives background on the historical figures who appear in the novel. This is a pure delight and illustrates how versatile is the talent of the author. Prepare yourself for a treat; this novel delivers. Here is an interview with the author.

Tuesday, May 17, 2016

Reading List: Abandoned in Place

- Miller, Roland. Abandoned in Place. Albuquerque: University of New Mexico Press, 2016. ISBN 978-0-8263-5625-3.

- Between 1945 and 1970 humanity expanded from the surface of Earth into the surrounding void, culminating in 1969 with the first landing on the Moon. Centuries from now, when humans and their descendents populate the solar system and exploit resources dwarfing those of the thin skin and atmosphere of the home planet, these first steps may be remembered as the most significant event of our age, with all of the trivialities that occupy our quotidian attention forgotten. Not only were great achievements made, but grand structures built on Earth to support them; these may be looked upon in the future as we regard the pyramids or the great cathedrals. Or maybe not. The launch pads, gantry towers, assembly buildings, test facilities, blockhouses, bunkers, and control centres were not built as monuments for the ages, but rather to accomplish time-sensitive goals under tight budgets, by the lowest bidder, and at the behest of a government famous for neglecting infrastructure. Once the job was done, the mission accomplished, the program concluded; the facilities that supported it were simply left at the mercy of the elements which, in locations like coastal Florida, immediately began to reclaim them. Indeed, half of the facilities pictured here no longer exist. For more than two decades, author and photographer Roland Miller has been documenting this heritage before it succumbs to rust, crumbling concrete, and invasive vegetation. With unparalleled access to the sites, he has assembled this gallery of these artefacts of a great age of exploration. In a few decades, this may be all we'll have to remember them. Although there is rudimentary background information from a variety of authors, this is a book of photography, not a history of the facilities. In some cases, unless you know from other sources what you're looking at, you might interpret some of the images as abstract. The hardcover edition is a “coffee table book”: large format and beautifully printed, with a corresponding price. The Kindle edition is, well, a Kindle book, and grossly overpriced for 193 pages with screen-resolution images and a useless index consisting solely of search terms. A selection of images from the book may be viewed on the Abandoned in Place Web site.

Sunday, May 15, 2016

Reading List: The Circle

- Eggers, Dave. The Circle. New York: Alfred A. Knopf, 2013. ISBN 978-0-345-80729-8.

-

There have been a number of novels, many in recent years, which explore the

possibility of human society being taken over by intelligent machines.

Some depict the struggle between humans and machines, others

envision a dystopian future in which the machines have triumphed, and

a few explore the possibility that machines might create a “new

operating system” for humanity which works better than the dysfunctional

social and political systems extant today. This novel goes off in a

different direction: what might happen, without artificial

intelligence, but in an era of exponentially growing computer power and

data storage capacity, if an industry leading company with tendrils

extending into every aspect of personal interaction and commerce worldwide,

decided, with all the best intentions, “What the heck? Let's be evil!”

Mae Holland had done everything society had told her to do. One of only

twelve of the 81 graduates of her central California high school to

go on to college, she'd been accepted by a prestigious college

and graduated with a degree in psychology and massive student loans

she had no prospect of paying off. She'd ended up moving

back in with her parents and taking a menial cubicle job at the local

utility company, working for a creepy boss. In frustration and

desperation, Mae reaches out to her former college roommate, Annie, who

has risen to an exalted position at the hottest technology company on

the globe: The Circle. The Circle had started by creating the Unified

Operating System, which combined all aspects of users'

interactions—social media, mail, payments, user names—into

a unique and verified identity called TruYou. (Wonder where they got

that idea?)

Before long, anonymity on the Internet was a thing of the past as

merchants and others recognised the value of knowing their

customers and of information collected across their activity on

all sites. The Circle and its associated businesses supplanted

existing sites such as Google, Facebook, and Twitter, and with the

tight integration provided by TruYou, created new kinds of

interconnection and interaction not possible when information

was Balkanised among separate sites. With the end of anonymity,

spam and fraudulent schemes evaporated, and with all posters

personally accountable, discussions became civil and trolls

slunk back under the bridge.

With an effective monopoly on electronic communication and

commercial transactions (if everybody uses TruYou to pay, what

option does a merchant have but to accept it and pay The Circle's

fees?), The Circle was assured a large, recurring, and growing

revenue stream. With the established businesses generating so

much cash, The Circle invested heavily in research and development

of new technologies: everything from sustainable housing, access

to DNA databases, crime prevention, to space applications.

Mae's initial job was far more mundane. In Customer Experience, she

was more or less working in a call centre, except her communications

with customers were over The Circle's message services. The work

was nothing like that at the utility company, however. Her work

was monitored in real time, with a satisfaction score computed from

follow-ups surveys by clients. To advance, a score near 100 was

required, and Mae had to follow-up any scores less than that to satisfy

the customer and obtain a perfect score. On a second screen,

internal “zing” messages informed her of activity on

the campus, and she was expected to respond and contribute.

As she advances within the organisation, Mae begins to comprehend

the scope of The Circle's ambitions. One of the founders unveils a

plan to make always-on cameras and microphones available at very

low cost, which people can install around the world. All the feeds will

be accessible in real time and archived forever. A new slogan

is unveiled:

“All that happens must be known.”

At a party, Mae meets a mysterious character, Kalden, who appears to have

access to parts of The Circle's campus unknown to her associates and

yet doesn't show up in the company's exhaustive employee social

networks. Her encounters and interactions with him become increasingly

mysterious.

Mae moves up, and is chosen to participate to a greater extent in the

social networks, and to rate products and ideas. All of this activity

contributes to her participation rank, computed and displayed in real time.

She swallows a sensor which will track her health and vital signs in real

time, display them on a wrist bracelet, and upload them for analysis and

early warning diagnosis.

Eventually, she volunteers to “go transparent”: wear a body

camera and microphone every waking moment, and act as a window into

The Circle for the general public. The company had pushed transparency

for politicians, and now was ready to deploy it much more widely.

Secrets Are Lies

To Mae's family and few remaining friends outside The Circle, this all seems increasingly bizarre: as if the fastest growing and most prestigious high technology company in the world has become a kind of grotesque cult which consumes the lives of its followers and aspires to become universal. Mae loves her sense of being connected, the interaction with a worldwide public, and thinks it is just wonderful. The Circle internally tests and begins to roll out a system of direct participatory democracy to replace existing political institutions. Mae is there to report it. A plan to put an end to most crime is unveiled: Mae is there. The Circle is closing. Mae is contacted by her mysterious acquaintance, and presented with a moral dilemma: she has become a central actor on the stage of a world which is on the verge of changing, forever. This is a superbly written story which I found both realistic and chilling. You don't need artificial intelligence or malevolent machines to create an eternal totalitarian nightmare. All it takes a few years' growth and wider deployment of technologies which exist today, combined with good intentions, boundless ambition, and fuzzy thinking. And the latter three commodities are abundant among today's technology powerhouses. Lest you think the technologies which underlie this novel are fantasy or far in the future, they were discussed in detail in David Brin's 1999 The Transparent Society and my 1994 “Unicard” and 2003 “The Digital Imprimatur”. All that has changed is that the massive computing, communication, and data storage infrastructure envisioned in those works now exists or will within a few years. What should you fear most? Probably the millennials who will read this and think, “Wow! This will be great.” “Democracy is mandatory here!”

Sharing Is Caring

Privacy Is Theft

Monday, May 9, 2016

Transit of Mercury

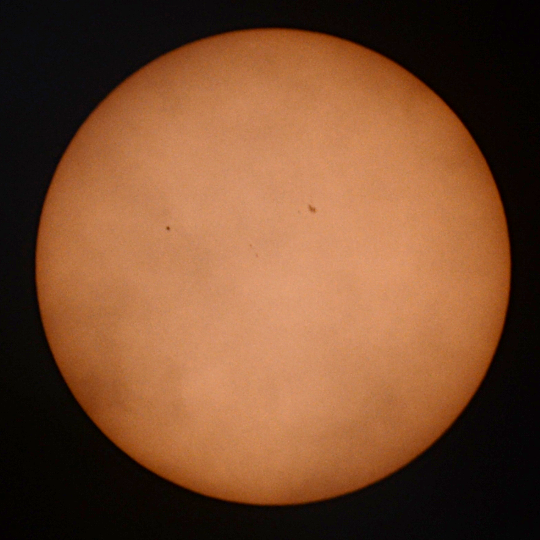

I was clouded out for most of today's transit of Mercury, but in mid-afternoon the skies cleared briefly and I was able to observe the transit visually and capture the following picture through thin clouds. Mercury is the dark black dot at the left, along the 10 o'clock direction from the centre of the Sun. The shading on the Sun's surface is due to the thin clouds through which I took this picture. Note how much darker Mercury's disc is than the sunspot group (Active Region 12542).

The photo was taken at 13:43 UTC from the Fourmilab driveway with a Nikon D600 camera. Exposure was 1/1250 second at the fixed f/8 aperture of the Nikon 500 mm catadioptric "mirror lens" with ISO 400 sensitivity. A full aperture Orion solar filter was mounted on the front of the lens. This image is cropped from the full frame and scaled down to fit on the page. Minor contrast stretching and sharpening was done with GIMP.

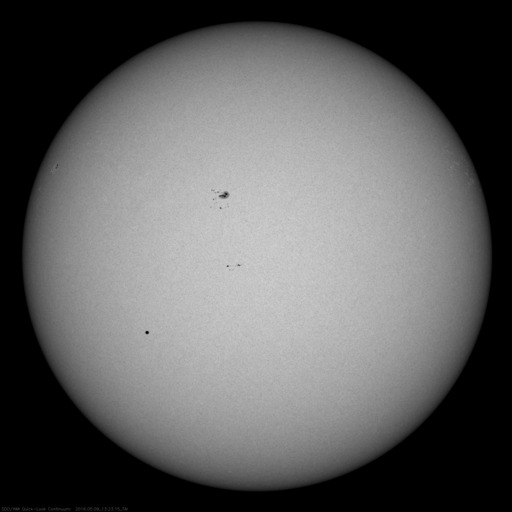

For comparison, below is an image of the transit from space, captured by the Solar Dynamics Observatory's (SDO) Helioseismic and Magnetic Imager.

Mercury is the dark black dot at the left, along the 10 o'clock direction from the centre of the Sun. The shading on the Sun's surface is due to the thin clouds through which I took this picture. Note how much darker Mercury's disc is than the sunspot group (Active Region 12542).

The photo was taken at 13:43 UTC from the Fourmilab driveway with a Nikon D600 camera. Exposure was 1/1250 second at the fixed f/8 aperture of the Nikon 500 mm catadioptric "mirror lens" with ISO 400 sensitivity. A full aperture Orion solar filter was mounted on the front of the lens. This image is cropped from the full frame and scaled down to fit on the page. Minor contrast stretching and sharpening was done with GIMP.

For comparison, below is an image of the transit from space, captured by the Solar Dynamics Observatory's (SDO) Helioseismic and Magnetic Imager.

The image appears rotated with respect to mine because solar north is up in the SDO image, while mine shows the Sun as it appears from my location at 47° north latitude.

The image appears rotated with respect to mine because solar north is up in the SDO image, while mine shows the Sun as it appears from my location at 47° north latitude.

Saturday, May 7, 2016

Reading List: Black Hole Blues

- Levin, Janna. Black Hole Blues. New York: Alfred A. Knopf, 2016. ISBN 978-0-307-95819-8.

-

In Albert Einstein's 1915 general theory of relativity,

gravitation does not propagate instantaneously as it did in Newton's

theory, but at the speed of light. According to relativity, nothing

can propagate faster than light. This has a consequence which was not

originally appreciated when the theory was published: if you move

an object here, its gravitational influence upon an object

there cannot arrive any faster than a pulse of light

travelling between the two objects. But how is that change in the

gravitational field transmitted? For light, it is via the electromagnetic

field, which is described by Maxwell's equations and

implies the existence of excitations of the field which, according to

their wavelength, we call radio, light, and gamma rays. Are there,

then, equivalent excitations of the gravitational field (which, according

to general relativity, can be thought of as curvature of spacetime),

which transmit the changes due to motion of objects to distant objects

affected by their gravity and, if so, can we detect them? By analogy

to electromagnetism, where we speak of electromagnetic waves or

electromagnetic radiation, these would be gravitational waves or

gravitational radiation.

Einstein first predicted the existence of gravitational waves in a

1916 paper, but he made a mathematical error in the nature of sources

and the magnitude of the effect. This was corrected in a paper he

published in 1918 which describes gravitational radiation as we

understand it today. According to Einstein's calculations, gravitational

waves were real, but interacted so weakly that any practical experiment

would never be able to detect them. If gravitation is thought of as the

bending of spacetime, the equations tell us that spacetime is

extraordinarily stiff: when you encounter an equation with the speed

of light, c, raised to the fourth power in the denominator,

you know you're in trouble trying to detect the effect.

That's where the matter rested for almost forty years. Some theorists

believed that gravitational waves existed but, given the potential

sources we knew about (planets orbiting stars, double and multiple

star systems), the energy emitted was so small (the Earth orbiting the

Sun emits a grand total of 200 watts of energy in gravitational waves,

which is absolutely impossible to detect with any plausible apparatus),

we would never be able to detect it. Other physicists doubted the

effect was real, and that gravitational waves actually carried energy which

could, even in principle, produce effects which could be detected. This

dispute was settled to the satisfaction of most theorists by the

sticky bead

argument, proposed in 1957 by Richard Feynman and Hermann Bondi.

Although a few dissenters remained, most of the small community interested

in general relativity agreed that gravitational waves existed and could

carry energy, but continued to believe we'd probably never detect them.

This outlook changed in the 1960s. Radio astronomers, along with

optical astronomers, began to discover objects in the sky which

seemed to indicate the universe was a much more violent and dynamic

place than had been previously imagined. Words like

“quasar”,

“neutron star”,

“pulsar”,

and “black hole”

entered the vocabulary, and suggested there were objects in

the universe where gravity might be so strong and motion so fast that

gravitational waves could be produced which might be detected by

instruments on Earth.

Joseph Weber, an experimental physicist at the University of Maryland, was the first to attempt to detect gravitational radiation. He used large bars, now called Weber bars, of aluminium, usually cylinders two metres long and one metre in diameter, instrumented with piezoelectric sensors. The bars were, based upon their material and dimensions, resonant at a particular frequency, and could detect a change in length of the cylinder of around 10−16 metres. Weber was a pioneer in reducing noise of his detectors, and operated two detectors at different locations so that signals would only be considered valid if observed nearly simultaneously by both.

What nobody knew was how “noisy” the sky was in gravitational radiation: how many sources there were and how strong they might be. Theorists could offer little guidance: ultimately, you just had to listen. Weber listened, and reported signals he believed consistent with gravitational waves. But others who built comparable apparatus found nothing but noise and theorists objected that if objects in the universe emitted as much gravitational radiation as Weber's detections implied, it would convert all of its mass into gravitational radiation in just fifty million years. Weber's claims of having detected gravitational radiation are now considered to have been discredited, but there are those who dispute this assessment. Still, he was the first to try, and made breakthroughs which informed subsequent work. Might there be a better way, which could detect even smaller signals than Weber's bars, and over a wider frequency range? (Since the frequency range of potential sources was unknown, casting the net as widely as possible made more potential candidate sources accessible to the experiment.) Independently, groups at MIT, the University of Glasgow in Scotland, and the Max Planck Institute in Germany began to investigate interferometers as a means of detecting gravitational waves. An interferometer had already played a part in confirming Einstein's special theory of relativity: could it also provide evidence for an elusive prediction of the general theory? An interferometer is essentially an absurdly precise ruler where the markings on the scale are waves of light. You send beams of light down two paths, and adjust them so that the light waves cancel (interfere) when they're combined after bouncing back from mirrors at the end of the two paths. If there's any change in the lengths of the two paths, the light won't interfere precisely, and its intensity will increase depending upon the difference. But when a gravitational wave passes, that's precisely what happens! Lengths in one direction will be squeezed while those orthogonal (at a right angle) will be stretched. In principle, an interferometer can be an exquisitely sensitive detector of gravitational waves. The gap between principle and practice required decades of diligent toil and hundreds of millions of dollars to bridge. From the beginning, it was clear it would not be easy. The field of general relativity (gravitation) had been called “a theorist's dream, an experimenter's nightmare”, and almost everybody working in the area were theorists: all they needed were blackboards, paper, pencils, and lots of erasers. This was “little science”. As the pioneers began to explore interferometric gravitational wave detectors, it became clear what was needed was “big science”: on the order of large particle accelerators or space missions, with budgets, schedules, staffing, and management comparable to such projects. This was a culture shock to the general relativity community as violent as the astrophysical sources they sought to detect. Between 1971 and 1989, theorists and experimentalists explored detector technologies and built prototypes to demonstrate feasibility. In 1989, a proposal was submitted to the National Science Foundation to build two interferometers, widely separated geographically, with an initial implementation to prove the concept and a subsequent upgrade intended to permit detection of gravitational radiation from anticipated sources. After political battles, in 1995 construction of LIGO, the Laser Interferometer Gravitational-Wave Observatory, began at the two sites located in Livingston, Louisiana and Hanford, Washington, and in 2001, commissioning of the initial detectors was begun; this would take four years. Between 2005 and 2007 science runs were made with the initial detectors; much was learned about sources of noise and the behaviour of the instrument, but no gravitational waves were detected. Starting in 2007, based upon what had been learned so far, construction of the advanced interferometer began. This took three years. Between 2010 and 2012, the advanced components were installed, and another three years were spent commissioning them: discovering their quirks, fixing problems, and increasing sensitivity. Finally, in 2015, observations with the advanced detectors began. The sensitivity which had been achieved was astonishing: the interferometers could detect a change in the length of their four kilometre arms which was one ten-thousandth the diameter of a proton (the nucleus of a hydrogen atom). In order to accomplish this, they had to overcome noise which ranged from distant earthquakes, traffic on nearby highways, tides raised in the Earth by the Sun and Moon, and a multitude of other sources, via a tower of technology which made the machine, so simple in concept, forbiddingly complex. September 14, 2015, 09:51 UTC: Chirp! A hundred years after the theory that predicted it, 44 years after physicists imagined such an instrument, 26 years after it was formally proposed, 20 years after it was initially funded, a gravitational wave had been detected, and it was right out of the textbook: the merger of two black holes with masses around 29 and 36 times that of the Sun, at a distance of 1.3 billion light years. A total of three solar masses were converted into gravitational radiation: at the moment of the merger, the gravitational radiation emitted was 50 times greater than the light from all of the stars in the universe combined. Despite the stupendous energy released by the source, when it arrived at Earth it could only have been detected by the advanced interferometer which had just been put into service: it would have been missed by the initial instrument and was orders of magnitude below the noise floor of Weber's bar detectors. For only the third time since proto-humans turned their eyes to the sky a new channel of information about the universe we inhabit was opened. Most of what we know comes from electromagnetic radiation: light, radio, microwaves, gamma rays, etc. In the 20th century, a second channel opened: particles. Cosmic rays and neutrinos allow exploring energetic processes we cannot observe in any other way. In a real sense, neutrinos let us look inside the Sun and into the heart of supernovæ and see what's happening there. And just last year the third channel opened: gravitational radiation. The universe is almost entirely transparent to gravitational waves: that's why they're so difficult to detect. But that means they allow us to explore the universe at its most violent: collisions and mergers of neutron stars and black holes—objects where gravity dominates the forces of the placid universe we observe through telescopes. What will we see? What will we learn? Who knows? If experience is any guide, we'll see things we never imagined and learn things even the theorists didn't anticipate. The game is afoot! It will be a fine adventure. Black Hole Blues is the story of gravitational wave detection, largely focusing upon LIGO and told through the eyes of Rainer Weiss and Kip Thorne, two of the principals in its conception and development. It is an account of the transition of a field of research from a theorist's toy to Big Science, and the cultural, management, and political problems that involves. There are few examples in experimental science where so long an interval has elapsed, and so much funding expended, between the start of a project and its detecting the phenomenon it was built to observe. The road was bumpy, and that is documented here. I found the author's tone off-putting. She, a theoretical cosmologist at Barnard College, dismisses scientists with achievements which dwarf her own and ideas which differ from hers in the way one expects from Social Justice Warriors in the squishier disciplines at the Seven Sisters: “the notorious Edward Teller”, “Although Kip [Thorne] outgrew the tedious moralizing, the sexism, and the religiosity of his Mormon roots”, (about Joseph Weber) “an insane, doomed, impossible bar detector designed by the old mad guy, crude laboratory-scale slabs of metal that inspired and encouraged his anguished claims of discovery”, “[Stephen] Hawking made his oddest wager about killer aliens or robots or something, which will not likely ever be resolved, so that might turn out to be his best bet yet”, (about Richard Garwin) “He played a role in halting the Star Wars insanity as well as potentially disastrous industrial escalations, like the plans for supersonic airplanes…”, and “[John Archibald] Wheeler also was not entirely against the House Un-American Activities Committee. He was not entirely against the anticommunist fervor that purged academics from their ivory-tower ranks for crimes of silence, either.” … “I remember seeing him at the notorious Princeton lunches, where visitors are expected to present their research to the table. Wheeler was royalty, in his eighties by then, straining to hear with the help of an ear trumpet. (Did I imagine the ear trumpet?)”. There are also a number of factual errors (for example, a breach in the LIGO beam tube sucking out all of the air from its enclosure and suffocating anybody inside), which a moment's calculation would have shown was absurd. The book was clearly written with the intention of being published before the first detection of a gravitational wave by LIGO. The entire story of the detection, its validation, and public announcement is jammed into a seven page epilogue tacked onto the end. This epochal discovery deserves being treated at much greater length.